TL;DR:

- Stanford University released the “Foundation Model Transparency Index” to assess AI companies.

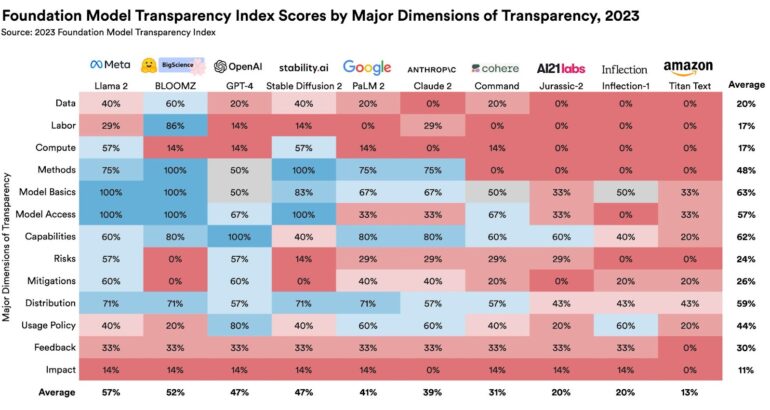

- Llama 2 by Meta earns the highest transparency score at 54%.

- Microsoft-backed OpenAI’s GPT-4 ranks third with a 48% transparency score.

- Google’s PaLM 2 and Amazon-backed Claude 2 secure fifth and sixth positions.

- Lack of transparency hinders policymaking, business confidence, research, and consumer understanding.

- Many AI companies don’t disclose user numbers or the use of copyrighted material.

- Transparency in AI is a global policy priority, with the EU leading the charge.

- The G7 summit and an upcoming UK international summit emphasize the importance of AI regulation.

Main AI News:

In a groundbreaking study released on Wednesday, it becomes evident that the realm of artificial intelligence is shrouded in obscurity, creating a pressing need for regulatory guidance amidst its exponential growth. The esteemed researchers at Stanford University have introduced the “Foundation Model Transparency Index,” a pioneering effort to assess and rank ten prominent AI companies, shedding light on their transparency quotient.

Remarkably, the top spot, boasting a 54 percent score, is secured by Llama 2, the brainchild of Meta, the parent company of Facebook and Instagram. This success underscores the strides made by the social media behemoth in embracing transparency within the AI landscape. Notably, GPT-4, the flagship creation of Microsoft-backed OpenAI, the genius behind the renowned ChatGPT, secures a respectable third place, with a commendable score of 48 percent.

Google’s PaLM 2 takes the fifth spot, scoring 40 percent, while Claude 2, from the Amazon-backed firm Anthropic, follows closely at 36 percent. According to Rishi Bommasani, a dedicated researcher at Stanford’s Center for Research on Foundation Models, these results underscore the need for companies to aim for transparency scores ranging between 80 and 100 percent.

The Stanford study highlights that inadequate transparency in AI poses multifaceted challenges. It not only obstructs policymakers in shaping effective regulations for this influential technology but also leaves businesses uncertain about the technology’s dependability for their own applications. Academics face hurdles in conducting research, and consumers find it challenging to comprehend the limitations of these AI models.

Bommasani underscores, “If you don’t have transparency, regulators can’t even pose the right questions, let alone take action in these areas.” The emergence of AI, a source of both anticipation for its technological potential and apprehension about its societal impact, underscores the urgency of addressing this issue.

One of the glaring gaps identified by the Stanford study is the absence of information from companies about the number of users relying on their AI models and the geographic locations of their usage. Furthermore, most AI firms remain tight-lipped about the extent to which copyrighted material fuels their models, a significant omission that raises pertinent questions.

Transparency has emerged as a pivotal policy concern for numerous governments globally, including the European Union, the United States, the United Kingdom, China, Canada, and the Group of Seven (G7). The EU, at the forefront of AI regulation, is poised to pioneer the world’s first law encompassing AI technology by the year’s end. In this backdrop, Britain is set to host an international summit on AI later this year, building on the momentum generated during the G7 summit in Japan earlier this year, which called for decisive action on AI.

Conclusion:

The stark revelation of insufficient transparency in the AI sector, as unveiled by Stanford’s “Foundation Model Transparency Index,” underscores the urgency for the industry to embrace openness and accountability. This deficit not only obstructs effective policymaking but also leaves businesses in uncertainty and hampers academic research. As governments worldwide prioritize transparency in AI, businesses that champion transparency are poised to thrive in an evolving market driven by responsible AI practices.