TL;DR:

- SuperContext integrates large language models (LLMs) with supervised language models (SLMs) to enhance text generation.

- It addresses LLMs’ issues with generalizability and factuality by leveraging SLMs’ task-focused insights.

- Empirical studies demonstrate SuperContext’s superiority in terms of generalizability and factual accuracy.

- It significantly improves LLM performance in diverse tasks, including natural language understanding and question answering.

- SuperContext excels when dealing with out-of-distribution data, making it a game-changer in real-world applications.

Main AI News:

Large language models (LLMs) have undoubtedly revolutionized the field of natural language processing with their ability to generate human-like text. However, their effectiveness wanes when confronted with data and queries that diverge significantly from their training material. Inconsistent outputs, often resembling hallucinations, hinder their reliability and applicability in diverse contexts.

Traditional methods have relied on extensive training and prompt engineering to refine LLMs, but they come with limitations related to generalizability and factuality, raising questions about efficiency. Addressing these challenges, a groundbreaking approach known as “SuperContext” has emerged from collaborative research between Westlake University, Peking University, and Microsoft.

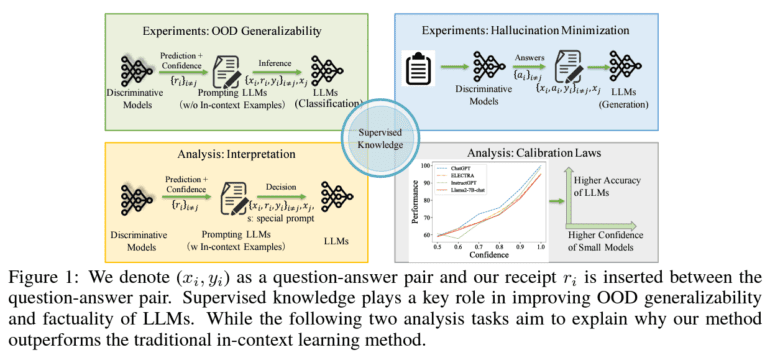

SuperContext represents a novel framework that harnesses the combined strengths of large language models and smaller, task-specific supervised language models (SLMs). At its core, SuperContext introduces an innovative way to integrate the outputs from SLMs into the prompts used by LLMs, resulting in a seamless fusion of extensive pre-trained knowledge and specific task data.

This integration is the key to addressing issues related to generalizability and factuality. By incorporating predictions and confidence levels from SLMs into the inference process of LLMs, SuperContext empowers LLMs to leverage precise, task-focused insights. This bridging of the gap between broad, generalized learning and nuanced, task-specific knowledge leads to a more balanced and effective model performance.

Empirical studies have demonstrated the remarkable potential of SuperContext. When compared to traditional methods, it consistently enhances the performance of both SLMs and LLMs, particularly in terms of generalizability and factual accuracy. In a range of tasks, from natural language understanding to question answering, SuperContext outshines its predecessors. Its true prowess shines when dealing with out-of-distribution data, reaffirming its effectiveness in real-world applications.

In a rapidly evolving landscape where language models are central to various industries, SuperContext emerges as a transformative solution, elevating the reliability and adaptability of LLMs. As the demands for accurate and context-aware language generation grow, SuperContext sets a new standard for excellence in the field, promising a future of more robust and effective AI-powered text generation.

Conclusion:

SuperContext represents a groundbreaking advancement in the field of natural language processing. Its ability to bridge the gap between LLMs and SLMs enhances the reliability and adaptability of language models in various business applications. This innovation promises to revolutionize the market by offering more accurate and context-aware AI-powered text generation, opening doors to improved customer service, content creation, and data analysis for businesses.