- SynthEval, a novel open-source machine learning framework, redefines the evaluation landscape for synthetic tabular data.

- It addresses the critical need for comprehensive evaluation frameworks in the face of escalating privacy concerns.

- SynthEval offers a diverse array of metrics and a user-friendly interface for effortless benchmarking and customization.

- Its benchmark module introduces innovative techniques for evaluating multiple synthetic renditions of datasets concurrently.

- SynthEval’s versatility and accessibility empower researchers to bridge the gap between theoretical advancements and practical implementations in synthetic data evaluation.

Main AI News:

As the utilization of synthetic data continues to soar across various domains encompassing computer vision, machine learning, and data analytics, the need for comprehensive evaluation frameworks becomes increasingly paramount. Synthetic data, designed to replicate intricate real-world scenarios, plays a pivotal role in scenarios where gathering authentic data proves challenging or unfeasible. Particularly in tabular data containing individual information such as patient records, citizen demographics, or customer attributes, synthetic data serves as a cornerstone for knowledge discovery endeavors and the development of sophisticated predictive models crucial for informed decision-making and product innovation.

However, the proliferation of tabular data raises significant privacy concerns, necessitating stringent data protection regulations to safeguard individuals’ rights against potential threats like privacy breaches, fraud, or discrimination. While these regulations may potentially impede scientific progress, they are indispensable in mitigating potential harms. Traditional anonymization methods often fall short in ensuring robust privacy protection, thereby underscoring the importance of advancing evaluation methodologies for synthetic data.

Enter SynthEval, a pioneering open-source machine learning framework introduced by researchers at the University of Southern Denmark. SynthEval addresses the pressing need for a standardized and user-friendly platform for evaluating synthetic tabular data comprehensively. Its inception stems from a recognition of the pivotal role evaluation frameworks play in shaping research paradigms and ensuring the credibility of findings in the data science community.

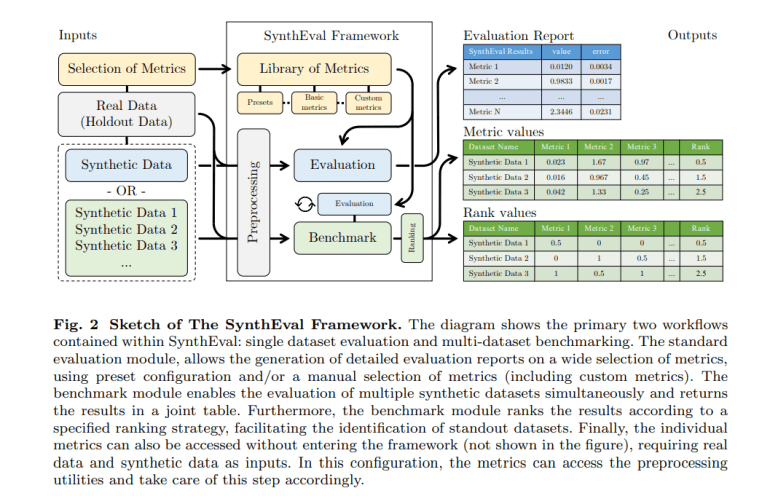

SynthEval boasts a diverse array of metrics meticulously curated to facilitate the creation of tailored benchmarks catering to users’ specific requirements. Its intuitive interface empowers users to effortlessly access predefined benchmarks or customize their evaluation settings with minimal effort. Furthermore, SynthEval’s flexibility extends to the seamless integration of custom metrics, eliminating the need for extensive code modifications.

At its core, SynthEval serves as a robust conduit for aggregating a plethora of measurements into concise evaluation reports or benchmark configurations. Leveraging two primary building blocks—the metrics object and the SynthEval interface object—SynthEval streamlines the evaluation process while ensuring compatibility with diverse datasets and evaluation scenarios. Whether through its user-friendly Python package or its command-line interface, SynthEval offers unparalleled accessibility and versatility in evaluating synthetic tabular data.

Moreover, SynthEval’s benchmark module introduces a groundbreaking approach to evaluating multiple synthetic renditions of datasets concurrently, facilitating comprehensive assessments across various metrics. By adopting innovative techniques such as mixed correlation matrix equivalents and empirical approximation of p-values, SynthEval endeavors to capture the complexities inherent in real-world data, thereby enhancing the robustness and applicability of evaluation outcomes.

Conclusion:

SynthEval’s emergence signifies a pivotal moment in the market for synthetic data evaluation tools. By offering a comprehensive and user-friendly framework, SynthEval not only addresses the pressing need for robust evaluation methodologies but also fosters transparency, accountability, and innovation in the machine learning landscape. Its adoption is poised to catalyze advancements in privacy-preserving technologies and propel the market towards greater standardization and efficacy in synthetic data evaluation.