TL;DR:

- TalkToModel, an interactive platform, aims to demystify machine learning models for users.

- Machine learning models are vital in various fields but often lack transparency.

- Explainable Artificial Intelligence (XAI) seeks to make AI decisions more understandable.

- TalkToModel’s three components facilitate user-friendly interactions with AI models.

- Initial testing indicates promising results, with potential for public release.

Main AI News:

In today’s fast-paced world, machine learning models have seamlessly woven themselves into the fabric of various professional sectors, serving as the backbone of numerous smartphone applications, software suites, and online services. However, while these sophisticated algorithms are ubiquitous, their inner workings remain an enigma to most. This knowledge gap has given rise to a critical challenge: how can we bolster trust in these advanced computational tools and empower users to comprehend the intricacies of AI-driven decision-making?

Enter Explainable Artificial Intelligence (XAI). It represents a groundbreaking frontier in the realm of machine learning, aiming to demystify these enigmatic models. XAI seeks to create machine learning models that can articulate, to some extent, the rationale behind their conclusions and elucidate the specific data attributes they deem pivotal when crafting a particular prediction. Yet, the path to achieving this goal has been fraught with hurdles, as most XAI endeavors still leave room for interpretation in their explanations.

In a collaborative effort, researchers from the University of California-Irvine and Harvard University have made significant strides in bridging this comprehension gap. Their brainchild, TalkToModel, is a revolutionary interactive dialog system meticulously designed to elucidate machine learning models and their predictions, catering to both engineers and individuals lacking technical expertise. This groundbreaking platform, featured in Nature Machine Intelligence, empowers users to pose queries about AI models and glean straightforward, relevant responses.

Dylan Slack, one of the visionary researchers spearheading this initiative, shared their motivation, stating, “We were keen on enhancing the interpretability of models. However, practitioners often grapple with the intricacies of interpretability tools. Hence, we conceived the idea of allowing practitioners to directly engage with machine learning models.”

The recent study led by Slack and his team builds upon their prior work in XAI and human-AI interaction. The primary objective? To unveil a novel platform that distills the complexity of AI into an easily digestible format, akin to how OpenAI’s conversational platform, ChatGPT, adeptly addresses questions.

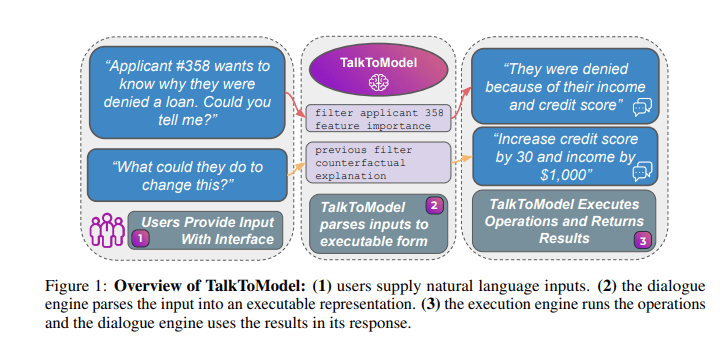

TalkToModel comprises three pivotal components:

1. Adaptive Dialog Engine: This engine is primed to decipher natural language input and craft coherent responses, ensuring that the interaction feels intuitive and seamless.

2. Execution Unit: This component assembles “AI explanations,” translating them into accessible language and delivering them to users in a comprehensible manner.

3. Conversational Interface: Serving as the conduit for user interaction, this software enables users to articulate their queries and receive answers effortlessly.

Slack expounded upon the system’s essence, saying, “TalkToModel is a system designed to facilitate open-ended conversations with machine learning models. You simply pose a question about your model’s decision-making process and receive an informative response. This makes model understanding accessible to everyone.”

To gauge the utility of their creation, the team enlisted professionals and students to test TalkToModel, soliciting valuable feedback. The results were promising, with 73% of healthcare professionals expressing interest in using it to enhance their comprehension of AI-driven diagnostic tools. Additionally, a resounding 85% of machine learning developers attested to its superior user-friendliness compared to other XAI tools.

The future holds exciting prospects as TalkToModel undergoes further refinements and potentially becomes accessible to the public. Such a development could play a pivotal role in advancing AI literacy and bolstering trust in AI predictions.

Slack concluded with optimism, remarking, “The insights gleaned from our studies involving a diverse range of participants – from graduate students to healthcare workers – underscore the potential utility of this system in demystifying AI models. We are committed to exploring ways to leverage advanced AI systems, akin to ChatGPT-style models, to enhance the user experience with this groundbreaking platform.”

Conclusion:

TalkToModel represents a significant advancement in the AI landscape, addressing the critical need for transparency and understanding. Its user-friendly approach, backed by positive feedback, has the potential to enhance AI literacy and foster greater trust in AI applications. This development underscores the growing importance of accessible AI explanation tools in the market, promising improved user engagement and confidence in AI-driven solutions across various industries.