- Tencent introduces ELLA, enhancing text-to-image models by integrating Large Language Models (LLMs) without the need for U-Net or LLM training.

- ELLA’s Timestep-Aware Semantic Connector dynamically adjusts semantic features, improving comprehension of complex prompts.

- ELLA’s performance surpasses existing models on dense prompts through the Dense Prompt Graph Benchmark (DPG-Bench).

- Compatibility with community models and downstream tools highlights ELLA’s potential for widespread adoption.

- Ablation research demonstrates the robustness of ELLA’s design choices and LLM selection.

Main AI News:

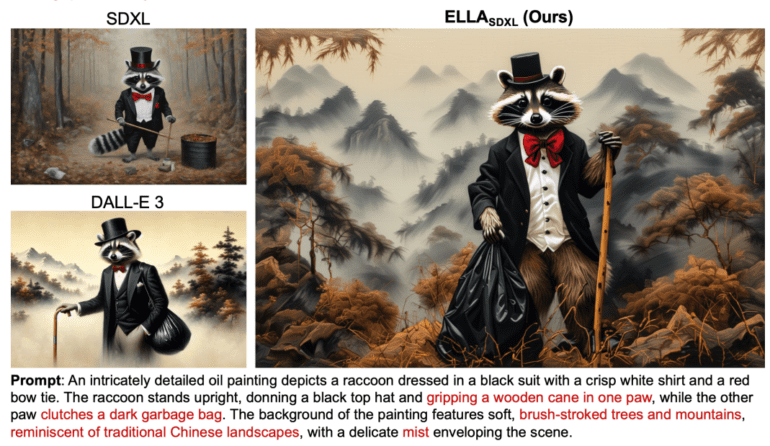

Recent advancements in the realm of text-to-image generation have been largely driven by diffusion models. However, prevailing models often rely on CLIP as their text encoder, posing limitations in comprehending intricate prompts laden with numerous elements, intricate details, intricate relationships, and expansive textual alignments. In response to these challenges, this study introduces the Efficient Large Language Model Adapter (ELLA), a pioneering methodology. ELLA seamlessly integrates potent Large Language Models (LLMs) into text-to-image diffusion models, thereby enhancing their capabilities without necessitating the training of U-Net or LLM. A notable innovation is the Timestep-Aware Semantic Connector (TSC), a component that dynamically extracts conditions varying with timestep from pre-trained LLMs. ELLA facilitates the interpretation of lengthy and complex prompts by adjusting semantic features across multiple denoising phases.

In recent years, diffusion models have been pivotal in driving progress in text-to-image generation, yielding visually appealing images relevant to textual cues. However, conventional models, including CLIP-based variations, struggle with dense prompts, impeding their ability to handle intricate connections and comprehensive descriptions of numerous elements. As a lightweight alternative, ELLA enhances existing models by seamlessly integrating powerful LLMs, thereby enhancing their ability to follow prompts and comprehend lengthy, dense texts without necessitating LLM or U-Net training.

Pre-trained LLMs such as T5, TinyLlama, or LLaMA-2 are integrated with a TSC within ELLA’s architecture to ensure semantic alignment throughout the denoising process. TSC dynamically adjusts semantic characteristics at various denoising stages based on the resampler architecture, with timestep information enhancing its dynamic text feature extraction capability and enabling better conditioning of the frozen U-Net across different semantic levels.

The paper introduces the Dense Prompt Graph Benchmark (DPG-Bench), comprising 1,065 lengthy, dense prompts, to assess the performance of text-to-image models on dense prompts. This dataset facilitates a more comprehensive evaluation than existing benchmarks by assessing semantic alignment capabilities in handling challenging and information-rich cues. Additionally, the paper highlights ELLA’s compatibility with existing community models and downstream tools, presenting a promising avenue for further enhancement.

The paper provides a perceptive overview of pertinent research in compositional text-to-image diffusion models and their limitations in comprehending intricate instructions. It lays the groundwork for ELLA’s innovative contributions by underscoring the drawbacks of CLIP-based models and the importance of integrating powerful LLMs like T5 and LLaMA-2 into existing frameworks.

By employing LLMs as text encoders, ELLA introduces the TSC for dynamic semantic alignment, with comprehensive tests comparing ELLA with state-of-the-art models on dense prompts using DPG-Bench and short compositional questions on a subset of T2I-CompBench. Results demonstrate ELLA’s superiority, particularly in complex prompt comprehension, compositions featuring numerous objects, and diverse attributes and relationships.

The impact of different LLM options and alternative architecture designs on ELLA’s performance is scrutinized through ablation research. The robustness of the proposed approach is underscored by the significant influence of the TSC module’s design and LLM selection on the model’s comprehension of both simple and complex prompts.

ELLA effectively enhances text-to-image generation, empowering models to decipher intricate prompts without necessitating retraining of LLM or U-Net. While the paper acknowledges its limitations, such as frozen U-Net constraints and MLLM sensitivity, it proposes avenues for future research, including addressing these issues and exploring further integration of MLLM with diffusion models.

Source: Marktechpost Media Inc.

Conclusion:

The introduction of ELLA marks a significant advancement in the text-to-image generation landscape, offering a seamless integration of powerful Large Language Models without the burden of additional training. ELLA’s superior performance on dense prompts and its compatibility with existing models suggest a promising future for enhanced text-to-image capabilities in various industries, potentially revolutionizing content generation, design automation, and visual storytelling applications. Businesses should consider adopting ELLA-powered solutions to stay competitive in an increasingly visual-driven market.