TL;DR:

- AI transforms e-books into high-quality audiobooks efficiently.

- It overcomes issues like robotic narration and content selection.

- Thousands of audiobooks were created using neural text-to-speech tech.

- Offers user customization of voice, pace, pitch, and intonation.

- Emotion-inference system enhances character interactions.

- Significant potential to expand the audiobook market.

Main AI News:

In today’s fast-paced world, the demand for audiobooks has soared, surpassing traditional reading methods. Audiobooks offer the convenience of absorbing information on the go and have opened new avenues for accessibility, benefiting a diverse audience, including children, the visually impaired, and language learners. However, the conventional audiobook production process is both time-consuming and costly, resulting in inconsistent recording quality, often relying on human narrators or volunteer-driven initiatives like LibriVox. Keeping pace with the ever-expanding library of published books has proven to be a formidable challenge.

Nevertheless, the advent of automatic audiobook creation has revolutionized the industry, addressing the drawbacks of the earlier robotic-sounding text-to-speech systems and the complexity of determining what content should or shouldn’t be narrated, such as tables of contents, page numbers, figures, and footnotes. Enter a groundbreaking solution that leverages cutting-edge advancements in neural text-to-speech technology, expressive narration, scalable computing, and automated content recognition to generate thousands of lifelike audiobooks.

This groundbreaking endeavor has contributed an impressive library of over 5,000 audiobooks, totaling a staggering 35,000 hours, to the open-source community. Moreover, they have developed demonstrative software that empowers users to craft their own audiobooks by simply reading any book from the library aloud in their unique voices, using only a brief voice sample. Their innovation introduces a scalable approach to converting HTML-based e-books into exceptional audiobooks. At the core of this transformative pipeline is SynapseML, a scalable machine learning platform that orchestrates the entire audiobook creation process, offering a seamless experience.

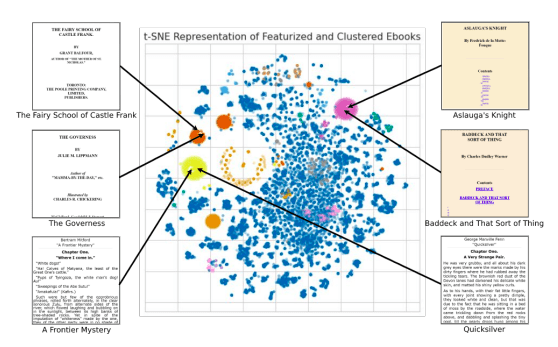

The initiative commences with the utilization of thousands of free e-books generously provided by Project Gutenberg, with a primary focus on the HTML format, which lends itself perfectly to automated parsing, making it the optimal choice for this endeavor. The team has successfully organized and standardized Project Gutenberg’s HTML pages, identifying numerous clusters of similarly structured files. This standardization facilitated the swift and deterministic parsing of a vast array of books, enabling them to concentrate their efforts on texts that promise high-quality recordings.

The outcome of their clustering approach is illustrated in Figure 1, depicting the emergence of various groups of similarly organized electronic books within the Project Gutenberg collection. Post-processing, a plain text stream is extracted and seamlessly fed into text-to-speech algorithms. Varied reading techniques are employed to cater to the diverse needs of audiobooks. Nonfiction benefits from a clear and objective voice, while fiction, especially dialogue-heavy narratives, thrives with expressive readings, even a touch of “acting.” Notably, during live demonstrations, customers are given the freedom to customize the voice, pace, pitch, and intonation of the text.

Remarkably, the team employs zero-shot text-to-speech techniques to effectively transfer voice features from a limited number of user-enrolled recordings, enabling users to swiftly generate personalized audiobooks with only a small audio sample. To add depth and authenticity to the narrations, an automated speaker and emotion inference system dynamically adjusts the reading voice and tone based on the context, enhancing the realism and engagement of audiobooks, particularly those with multiple characters and dynamic interactions.

The approach involves segmenting the text into narrative and dialogue, assigning distinct speakers to each line of conversation, and employing self-supervised techniques to predict the emotional tone of each dialogue. The integration of a multi-style and context-based neural text-to-speech model further enhances the differentiation of voices and emotions between narrators and characters, promising to significantly expand the availability and accessibility of audiobooks.

Conclusion:

The AI-driven revolution in audiobook production not only addresses previous challenges but also presents substantial growth opportunities for the audiobook market. With the ability to efficiently create high-quality, customizable audiobooks, AI technology is poised to meet the increasing demand for accessible and engaging content, appealing to a broader audience and potentially reshaping the industry’s landscape.