- Artificial neural networks (ANNs) exhibit consistent patterns in learned representations across diverse training conditions.

- Fourier features, including localized and non-localized variants, emerge prominently in image models trained for various tasks.

- Collaborative research from KTH, Redwood Center for Theoretical Neuroscience, and UC Santa Barbara introduces a mathematical explanation for the prevalence of Fourier features in neural networks.

- The framework posits downstream invariance as a key factor, rooted in symmetries present in natural data.

- Theoretical theorems elucidate the relationship between invariant parametric functions and group harmonics, providing insights into neural representation learning.

- Model implementation validates the theory, showcasing its applicability across different learning objectives.

Main AI News:

In the realm of artificial neural networks (ANNs), a fascinating phenomenon emerges: despite diverse initializations, datasets, or training goals, models within the same data domain tend to converge towards akin learned representations. Notably, in various image models, the initial layer weights gravitate towards Gabor filters and color-contrast detectors, embodying a universal aspect transcending both biological and artificial systems, reminiscent of visual cortex functionalities. However, while practical, these observations lack robust theoretical underpinnings, leaving a void in our comprehension of literature-interpreting machines.

Canonical 2D Fourier basis functions, particularly localized variants, emerge as prevalent features in image models, such as Gabor filters and wavelets, when trained for tasks encompassing efficient coding, classification, and temporal coherence. Intriguingly, non-localized Fourier features manifest in networks tackling tasks permitting cyclic wraparound, like modular arithmetic, indicative of a broader applicability in tasks involving cyclic translations.

A collaborative effort from KTH, Redwood Center for Theoretical Neuroscience, and UC Santa Barbara endeavors to elucidate the emergence of Fourier features in learning systems, particularly neural networks. Their proposition attributes this emergence to downstream invariance, where learners become insensitive to specific transformations like planar translation or rotation. The team’s mathematical framework furnishes theoretical assurances regarding Fourier features within invariant learners, applicable across various machine-learning paradigms.

Central to their explanation is the notion of invariance as a fundamental bias inherent in learning systems, stemming from symmetries present in natural data. Leveraging this concept, they extend the standard discrete Fourier transform to encompass more general Fourier transforms on groups, replacing harmonic bases with diverse unitary group representations. Their theoretical groundwork, rooted in sparse coding models, delineates conditions under which sparse linear combinations recover original bases generating data, thereby laying a cornerstone for understanding representations in neural systems.

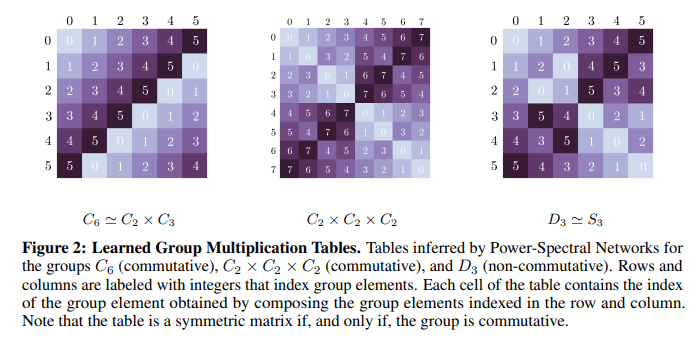

Two pivotal theorems presented by the team encapsulate their framework: the first posits that an invariant parametric function’s weights align with harmonics of a finite group, while the second asserts that nearly invariant functions, coupled with orthonormal weights, facilitate the recovery of the group’s multiplicative table. Furthermore, they validate their theory through model implementation, demonstrating its efficacy across various learning tasks aimed at fostering invariance and extracting group structures from weight distributions.

This interdisciplinary endeavor not only enriches our understanding of neural network behaviors but also offers a principled approach towards deciphering the underlying mechanisms governing learning systems’ emergence and evolution.

Conclusion:

Understanding the mathematical underpinnings of Fourier features in learning systems offers profound implications for the market. This knowledge enhances the development of more robust and efficient neural network architectures, with potential applications spanning various industries such as computer vision, natural language processing, and autonomous systems. Additionally, it fosters a deeper understanding of how neural networks interpret and process data, paving the way for more sophisticated AI-driven solutions that can adapt and generalize to diverse real-world scenarios.