TL;DR:

- PLASMA introduces a framework to equip small language models with procedural planning skills.

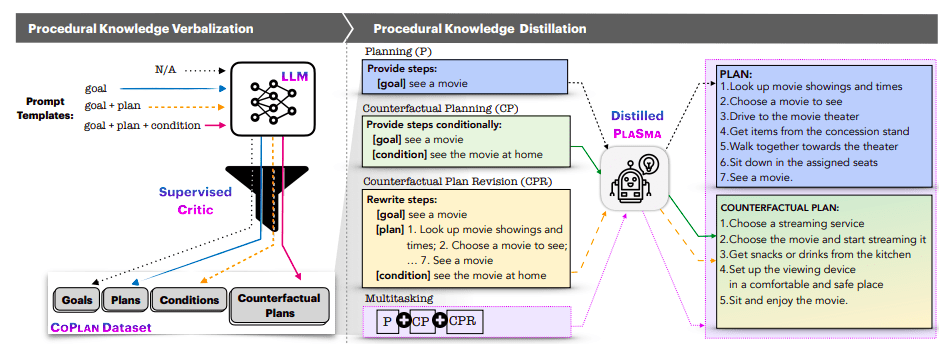

- It combines inference-time decoding techniques and symbolic procedural knowledge distillation.

- COPLAN, a procedural planning dataset, is created through knowledge verbalization.

- Task-specific and multi-task distillation using COPLAN trains smaller models within the PLASMA framework.

- PLASMA+ incorporates a verifier-guided step-wise beam search to improve plan quality.

- Small student models outperform larger models by an average of 17.57% in planning assignments.

- Counterfactual planning skills are successfully distilled into small-size models for the first time.

- PLASMA’s approach surpasses previous work in executability and accuracy.

- This development presents opportunities for businesses to leverage small language models in planning tasks.

Main AI News:

In the realm of language models, large models have proven their proficiency in common sense tasks. However, their high computational cost and limited accessibility pose challenges to widespread implementation. To address this, researchers from esteemed institutions have developed PLASMA (PLAn with tiny models), a cutting-edge two-pronged framework that equips small language models (LMs) with planning skills.

The framework combines inference-time decoding techniques and symbolic procedural knowledge distillation to enhance the planning capabilities of tiny LMs. The research team introduces a two-stage formulation of extended procedural knowledge distillation, which involves knowledge verbalization and knowledge distillation. By producing procedural knowledge through their method called COPLAN, a sizable (counterfactual) procedural planning dataset is created. This dataset, along with task-specific and multi-task distillation, is utilized for training smaller models within the PLASMA framework.

To further improve the quality of plans generated by small LMs, PLASMA+ incorporates a verifier-guided step-wise beam search. This step-by-step validator enhances the semantic coherence and temporal accuracy of the plans produced. Experimental results demonstrate the success of this approach, with smaller student models surpassing their larger instructor by an average of 17.57% in common planning assignments. Remarkably, even when compared to the colossal GPT-3 model, which is 16 times the size of the student models, the finest student model holds its ground.

Notably, this research also pioneers the distillation of counterfactual planning skills into small-size models, achieving a remarkable 93% validity rate in human evaluation. PLASMA’s approach outperforms previous GPT-3-based work in simulated settings, showcasing superior executability (17%) and accuracy (25%). Overall, the framework, with its symbolic procedural distillation, decoding-time algorithm, suggested tasks, and the COPLAN dataset, provides a valuable resource and a launching pad for further advancements in procedural planning research.

Knowledge Distillation from Symbolic Procedures. Source: Marktechpost Media

Conclusion:

The introduction of PLASMA revolutionizes the capabilities of small language models by equipping them with procedural planning skills. This advancement enables businesses to utilize smaller models for efficient and effective planning tasks, improving productivity and decision-making processes. With the ability to distill counterfactual planning skills, small language models become even more versatile. As the market evolves, incorporating PLASMA-like approaches empowers organizations to leverage the power of language models, opening up new possibilities for enhanced planning and strategy implementation.