TL;DR:

- The University of Zurich introduces SwissBERT, a multilingual language model for Switzerland.

- SwissBERT is trained on 21 million Swiss news articles in German, French, Italian, and Romansh Grischun.

- It addresses challenges faced by Swiss researchers in performing multilingual tasks.

- SwissBERT combines language models for Switzerland’s four national languages, enabling multilingual representations.

- The model outperforms common baselines in tasks like named entity recognition and detecting stances in user-generated comments.

- SwissBERT excels in German-Romansh alignment and zero-shot cross-lingual transfer.

- Challenges remain in recognizing named entities in historical, OCR-processed news.

- SwissBERT offers potential for future research and non-commercial applications, benefiting from its multilingualism.

Main AI News:

In the realm of Natural Language Processing (NLP), the renowned BERT model has long reigned supreme as a leading language model. Its versatility in transforming input sequences into output sequences for various NLP tasks has made it an invaluable tool. BERT, short for Bidirectional Encoder Representations from Transformers, harnesses the power of a Transformer attention mechanism. This mechanism allows the model to learn contextual relationships between words or sub-words within a textual corpus. Through self-supervised learning techniques, BERT has emerged as a prominent example of NLP advancement.

Before the advent of BERT, language models analyzed text sequences during training in either a left-to-right or a combined left-to-right and right-to-left manner. This unidirectional approach proved effective in generating sentences by predicting the subsequent words and assembling them into meaningful sequences. However, BERT introduced bidirectional training, which granted a deeper understanding of language context and flow compared to its predecessors.

Initially developed for the English language, BERT paved the way for language models tailored to specific languages, such as CamemBERT for French and GilBERTo for Italian. In a groundbreaking feat, a team of researchers from the University of Zurich has recently unveiled SwissBERT—a multilingual language model designed specifically for Switzerland. SwissBERT is trained on a vast corpus of over 21 million Swiss news articles in Swiss Standard German, French, Italian, and Romansh Grischun, encompassing a staggering total of 12 billion tokens.

SwissBERT addresses the unique challenges faced by Swiss researchers in performing multilingual tasks. Switzerland boasts four official languages—German, French, Italian, and Romansh—and combining individual language models for these diverse languages proves arduous. Furthermore, there is a lack of a dedicated neural language model for Romansh, the fourth national language. Prior to SwissBERT, the absence of a unified model hindered multilingual endeavors within the NLP field. However, SwissBERT overcomes this hurdle by seamlessly amalgamating articles in different languages and exploiting common entities and events within the news to create multilingual representations.

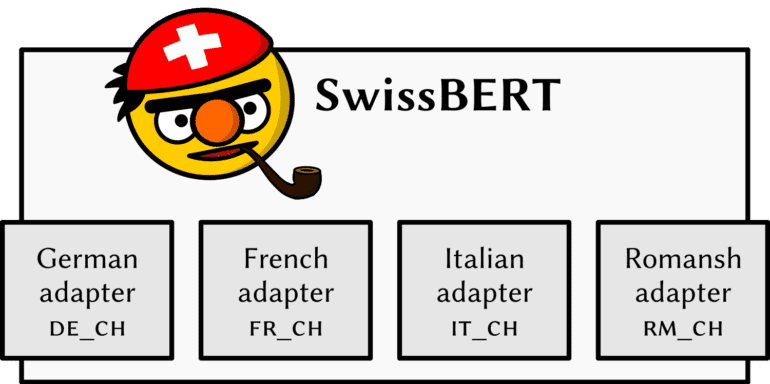

To develop SwissBERT, the researchers remodeled a cross-lingual Modular (X-MOD) transformer, which was pre-trained in 81 languages. Through the meticulous adaptation of custom language adapters, they tailored the pre-trained X-MOD transformer to their corpus. Additionally, the team constructed a Switzerland-specific subword vocabulary for SwissBERT, resulting in an impressive model comprising a staggering 153 million parameters.

The performance of SwissBERT has been evaluated across various tasks, including named entity recognition on contemporary news (SwissNER) and detecting stances in user-generated comments on Swiss politics. In these evaluations, SwissBERT surpassed common baselines and exhibited superior performance compared to XLM-R in detecting stance. Notably, when scrutinizing the model’s capabilities in Romansh, SwissBERT outperformed models lacking training in the language, excelling in zero-shot cross-lingual transfer and facilitating German-Romansh alignment at both word and sentence levels. However, the model faced challenges in recognizing named entities in historical, OCR-processed news.

Conclusion:

The development of SwissBERT, a multilingual language model for Switzerland’s four national languages, marks a significant milestone in the NLP market. By addressing the challenges of combining individual language models, SwissBERT provides researchers in Switzerland with a powerful tool for performing multilingual tasks. Its superior performance in named entity recognition and stance detection, along with its impressive German-Romansh alignment capabilities, positions SwissBERT as a valuable asset for language processing tasks. While challenges in historical news analysis remain, the release of SwissBERT and its potential for further adaptation opens up new opportunities for future research endeavors and non-commercial applications. The market can anticipate increased efficiency and accuracy in multilingual NLP tasks, thanks to the versatility of SwissBERT.