TL;DR:

- Tied-LoRA, a groundbreaking AI technique, enhances the efficiency of Low-rank Adaptation (LoRA) methods.

- It combines weight tying and selective training to reduce trainable parameters while maintaining performance.

- Tied-LoRA’s systematic experiments reveal a specific configuration, vBuA, that outperforms others, reducing parameters by 87%.

- Tasks like extractive question answering and summarization benefit significantly from Tied-LoRA’s parameter efficiency.

- Tied-LoRA offers the potential to replace conventional functions, like NLI and QA, while using only 13% of standard LoRA parameters.

Main AI News:

In the world of artificial intelligence, where efficiency and performance are of paramount importance, a groundbreaking technique known as Tied-LoRA has emerged, promising to revolutionize the realm of Low-rank Adaptation (LoRA) methods. Developed by a team of visionary researchers at Nvidia, Tied-LoRA combines the power of weight tying and selective training to unlock a new era of parameter efficiency.

LoRA, a method celebrated for its prowess in reducing trainable parameters through low-rank matrix approximations, had already taken strides in the world of parameter-efficient fine-tuning techniques. The journey continued with AdaLoRA, an extension that introduced dynamic rank adjustment and seamlessly merged adapter tuning with LoRA. VeRA, another technique proposed by Kopiczko, tackled the challenge by reducing parameters through frozen matrices and trainable scaling vectors. QLoRA pushed the boundaries even further by employing quantized base models to achieve memory-efficient LoRA.

The game-changing innovation introduced by Tied-LoRA comes in the form of weight tying. By applying this ingenious concept to low-rank weight matrices, Tied-LoRA ensures that the same consequences ripple across layers in the base language model, resulting in a significant reduction in the number of trainable parameters. The key lies in meticulously exploring various combinations of parameter training, freezing, and weight tying to strike the perfect balance between performance and efficiency.

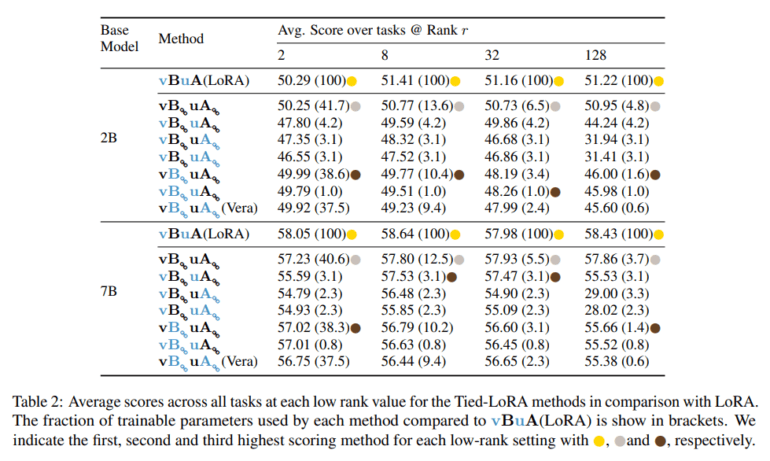

Through a series of systematic experiments conducted on diverse tasks and base language models, Tied-LoRA unveiled its true potential. A specific Tied-LoRA configuration, known as vBuA, emerged as the frontrunner, outperforming other alternatives while reducing parameters by a staggering 87%. Tasks such as extractive question answering, summarization, and mathematical reasoning witnessed the remarkable capability of Tied-LoRA to enhance parameter efficiency while maintaining competitive performance levels.

The results of these experiments underscore Tied-LoRA’s potential to replace conventional functions such as commonsense Natural Language Inference (NLI), extractive Question Answering (QA), and summarization. Notably, it accomplishes this feat while utilizing a mere 13% of the parameters found in the standard LoRA method. However, it is crucial to engage in a thorough discussion of Tied-LoRA’s limitations and comparative advantages against other parameter efficiency methods. This critical analysis will pave the way for future exploration and refinement, ensuring that Tied-LoRA remains at the forefront of the AI revolution.

Conclusion:

Tied-LoRA represents a game-changer in the AI market, offering businesses an opportunity to significantly enhance parameter efficiency without compromising performance. This innovation has the potential to reduce the computational costs associated with AI applications, making them more accessible and cost-effective for a wide range of industries. Companies that adopt Tied-LoRA could gain a competitive edge by optimizing their AI models, resulting in improved productivity and cost savings.