TL;DR:

- TinyLlama, a 1.1 billion parameter language model, addresses the need for efficient yet high-performing models in natural language processing.

- The traditional preference for larger models with vast datasets has posed accessibility challenges due to extensive computational requirements.

- TinyLlama’s innovative approach leverages FlashAttention technology for enhanced computational efficiency.

- Despite its smaller size, TinyLlama excels in various language tasks and outperforms models of comparable dimensions in commonsense reasoning and problem-solving.

- This model’s success signifies the potential for creating powerful language models without the need for extensive computational resources.

- It paves the way for more inclusive and diverse research opportunities in NLP.

Main AI News:

In the realm of natural language processing, language models play a pivotal role, and their development and refinement are pivotal for achieving precision and efficiency. The prevailing trend has centered around crafting expansive and intricate models to bolster the capacity to comprehend and produce text that resembles human language. These models hold a crucial place in various facets of the field, from translation to text generation, propelling the boundaries of machine understanding of human language.

A significant challenge in this domain is to create models that strike a delicate balance between computational demands and top-tier performance. Historically, larger models have been favored for their prowess in handling complex language tasks. However, their substantial computational prerequisites pose formidable obstacles, particularly in terms of accessibility and practicality for a broader audience, including those with limited resources.

Traditional language model development has predominantly concentrated on training mammoth models with vast datasets. These models, characterized by their colossal size and extensive training data, undeniably possess formidable capabilities. Nevertheless, they demand substantial computational prowess and resources, a constraint that can deter many researchers and practitioners, consequently constraining the scope of experimentation and innovation.

Enter TinyLlama, a groundbreaking language model introduced by the StatNLP Research Group and the Singapore University of Technology and Design, designed specifically to confront these challenges head-on. Boasting 1.1 billion parameters, this compact language model distinguishes itself by optimizing computational resources while maintaining a remarkable level of performance. TinyLlama is an open-source marvel, pre-trained on approximately 1 trillion tokens, marking a significant stride towards making high-quality natural language processing tools more accessible and attainable for a wider user base.

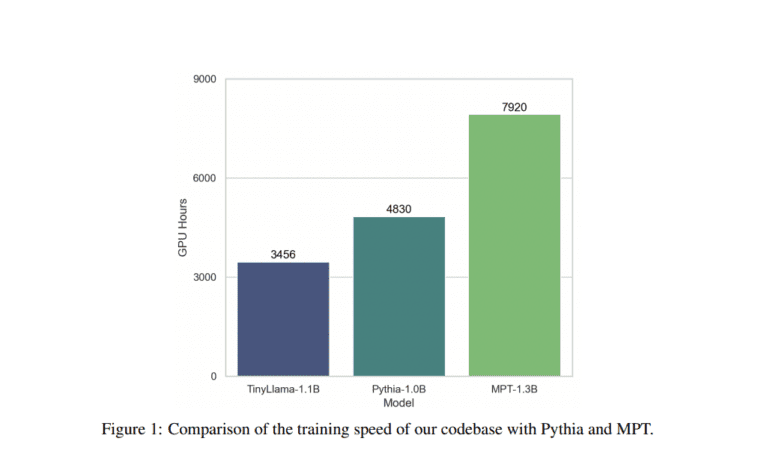

TinyLlama’s innovative approach hinges on its unique architecture. It draws inspiration from the Llama 2 model and incorporates cutting-edge technologies, including the revolutionary FlashAttention, which substantially augments computational efficiency. Despite its reduced scale compared to some of its predecessors, TinyLlama showcases exemplary performance across various downstream tasks. It effectively challenges the prevailing notion that bigger models are always superior, demonstrating that models with fewer parameters can still attain remarkable efficacy when trained on extensive and diverse datasets.

TinyLlama’s prowess in commonsense reasoning and problem-solving tasks deserves special recognition. It surpasses other open-source models of comparable dimensions across numerous benchmarks, underscoring the potential of smaller models to achieve top-tier performance when subjected to substantial data. This achievement not only expands the horizons of research and application in natural language processing but also becomes a beacon of hope, especially in situations where computational resources are scarce.

TinyLlama emerges as a monumental innovation in the realm of natural language processing. It seamlessly combines efficiency with effectiveness, addressing the urgent need for accessible, high-quality NLP tools. This model serves as a testament to the fact that through meticulous design and optimization, it is feasible to create potent language models that do not demand extravagant computational resources. The success of TinyLlama paves the way for a more inclusive and diverse landscape of research in NLP, enabling a wider array of users to contribute to and reap the rewards of advancements in this burgeoning field.

Conclusion:

TinyLlama’s introduction to the market represents a significant advancement in the field of natural language processing. Its efficient design and impressive performance open doors to wider accessibility and innovation, enabling a broader range of users to contribute to and benefit from NLP advancements. This model is poised to drive the market towards more efficient and accessible language processing tools.