TL;DR:

- Researchers explore the integration of Transformers in Reinforcement Learning (RL).

- Memory and credit assignment are pivotal in RL, and Transformers enhance memory but face challenges with credit assignment.

- Quantifiable metrics were introduced to isolate and measure memory and credit assignment elements.

- Memory-based RL algorithms, including Transformers, were rigorously evaluated across various tasks.

- Transformers excel in long-term memory but struggle to connect past actions with future consequences.

Main AI News:

The realm of Reinforcement Learning (RL) continues to evolve, with researchers at Université de Montréal and Princeton University at the forefront of innovation. Their recent collaboration delves into the integration of Transformer architectures, renowned for their prowess in managing long-term dependencies within data. This development holds immense significance for RL, a field where algorithms must master the art of sequential decision-making, often amidst intricate and dynamic environments.

The central conundrum in RL revolves around two pivotal facets: the ability to comprehend and harness past observations, commonly referred to as memory, and the discernment of how past actions influence future outcomes, known as credit assignment. These components are paramount in shaping algorithms that can adapt and make informed choices across diverse scenarios, whether it’s navigating a labyrinthine maze or strategizing in complex games.

Originally celebrated for their success in natural language processing and computer vision, Transformers have now found their place in RL to bolster memory capabilities. Yet, the extent of their effectiveness, particularly concerning long-term credit assignments, remains a subject of scrutiny. This challenge arises from the intricate interplay between memory and credit assignment in the realm of sequential decision-making. RL models must strike a delicate balance between these two elements to optimize learning efficiency. For instance, in a game-playing scenario, an algorithm must retain past moves as part of its memory and discern how these actions ripple through and impact future game states in terms of credit assignment.

To demystify the intertwined roles of memory and credit assignment within RL and assess the transformative influence of Transformers, a group of researchers introduced well-defined, quantifiable parameters for memory and credit assignment lengths. Hailing from Mila, Université de Montréal, and Princeton University, this innovative approach allows for the precise isolation and measurement of each element within the learning process. By creating tailor-made tasks meticulously designed to scrutinize memory and credit assignment independently, this study furnishes a more lucid comprehension of how Transformers impact these crucial dimensions of RL.

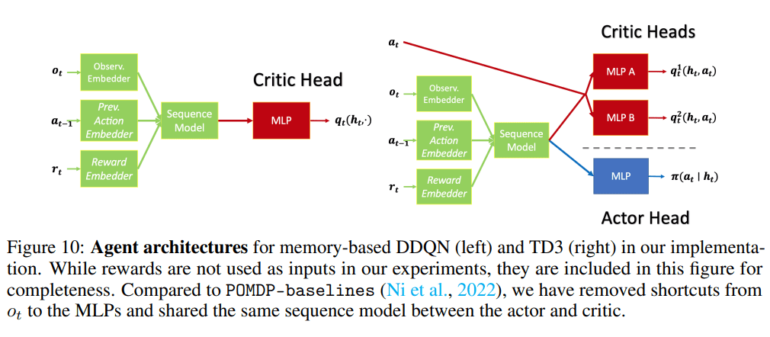

The methodology employed in this research involved a rigorous evaluation of memory-based RL algorithms, specifically those employing Long Short-Term Memory (LSTM) networks and Transformers, across a spectrum of tasks characterized by varying memory and credit assignment requisites. This systematic approach facilitated a direct and enlightening comparison of the capabilities of these two architectural paradigms across diverse scenarios. The tasks were meticulously tailored to accentuate memory and credit assignment capabilities, ranging from straightforward mazes to intricate environments replete with delayed rewards and actions.

While the integration of Transformers unquestionably elevates long-term memory within the RL framework, enabling algorithms to access information spanning as far back as 1500 steps in the past, it does not yield commensurate improvements in long-term credit assignment. This crucial discovery implies that although Transformer-based RL methodologies excel in recollecting distant past events, they grapple when it comes to connecting these memories to future outcomes. In simpler terms, Transformers excel at recalling the past but face challenges in establishing the causal links between these memories and future consequences.

Conclusion:

The integration of Transformers in Reinforcement Learning offers substantial enhancements in long-term memory but presents challenges in credit assignment. This research underscores the need for continued innovation in RL algorithms to bridge the gap between memory and credit assignment, potentially opening up new opportunities and applications in the market for more effective decision-making in dynamic and complex environments.