TL;DR:

- Large multimodal models like LLaVA, MiniGPT4, mPLUG-Owl, and Qwen-VL are making strides in processing diverse data types.

- Complex scenarios challenge these models due to varying picture resolutions and data quality demands.

- “Monkey,” an innovative approach, leverages pre-existing models to boost input resolution efficiently.

- It uses a sliding window technique, static visual encoder, and language decoder for enhanced image understanding.

- Monkey excels in precision image captioning and language differentiation, even with minor imperfections.

- It demonstrates proficiency in reading data from charts and dense textual material.

- Monkey’s capabilities have significant implications for the market.

Main AI News:

The realm of large multimodal models, adept at processing a diverse range of data types such as text and images, is experiencing a surge in popularity among academics and researchers. These models exhibit remarkable prowess in a multitude of multimodal tasks, from annotating images to answering complex visual inquiries. Pioneering models like LLaVA, MiniGPT4, mPLUG-Owl, and Qwen-VL stand as vivid testaments to the rapid advancements in this dynamic field. However, amidst the remarkable progress lies a formidable challenge—navigating the intricacies of complex scenarios characterized by varying picture resolutions and the ever-increasing demand for high-quality training data.

To address these challenges, substantial enhancements have been made to the image encoding process, coupled with the utilization of extensive datasets to elevate input resolution. Notably, LLaVA has pushed the envelope by incorporating instruction-tuning into multimodal contexts, seamlessly integrating multimodal instruction-following data. Nonetheless, the efficacy of these techniques is often hindered by the considerable resource allocation required to manage picture input sizes effectively, not to mention the substantial associated training costs. As datasets continue to swell in size, the need for more nuanced picture descriptions becomes imperative—a demand that must be met by the typically concise, one-sentence image captions found in datasets such as COYO and LAION.

In response to these pressing constraints, a collaborative effort by researchers from Huazhong University of Science and Technology and Kingsoft has yielded a resource-efficient breakthrough in the domain of Large Multimodal Models (LMM). Their innovation, aptly named “Monkey,” capitalizes on pre-existing LMMs, effectively circumventing the time-consuming pretraining phase thanks to the wealth of open-source contributions available.

At the core of Monkey’s ingenuity lies a straightforward yet highly efficient module that employs a sliding window approach to partition high-resolution images into manageable, localized segments. Each segment is individually processed through a static visual encoder, accompanied by multiple LoRA modifications and a trainable visual resampler. The language decoder then receives these encoded segments alongside the global image encoding, leading to vastly improved image comprehension. Notably, the researchers have harnessed a technique that amalgamates multi-level cues from a variety of sources, including BLIP2, PPOCR, GRIT, SAM, and ChatGPT OpenAI, to furnish rich and high-quality caption data.

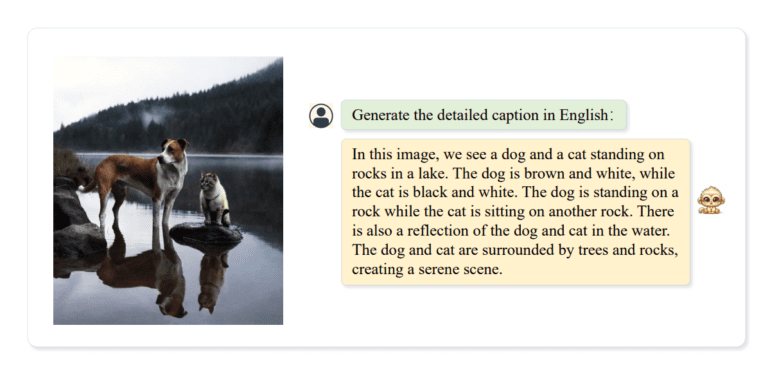

The results are nothing short of remarkable. Monkey’s image captioning capabilities exhibit precision in detailing every facet of an image, from an athlete’s attire to subtle background elements, all without errors or omissions. The model even astutely highlights details like a brown bag in the image caption, which might escape casual observation but significantly contributes to the model’s ability to draw meaningful inferences. This underscores the model’s remarkable attention to detail and its competence in providing coherent and accurate descriptions.

Furthermore, the Monkey’s versatility extends to distinguishing between various languages and the corresponding signals associated with them. This ability augments the model’s capacity to interpret and contextualize diverse linguistic elements within the visual content.

Monkey’s profound impact becomes even more evident when assessing its utility. It can effectively decipher the content of photographs, even when confronted with minor imperfections like missing characters in watermarks. Additionally, the model demonstrates exceptional prowess in reading textual data from charts, effortlessly identifying the correct response amidst dense textual material, all while remaining impervious to irrelevant distractions.

This phenomenon underscores the model’s remarkable proficiency in aligning a given text with its intended target, even when confronted with complex and obscured textual content. In essence, Monkey’s exceptional capabilities underscore its significance in fulfilling diverse objectives and its capacity to leverage global knowledge effectively.

Conclusion:

The emergence of “Monkey” as a groundbreaking solution to enhance input resolution and contextual association in large multimodal models signifies a significant stride in the AI market. With its ability to provide precise image captions, language differentiation, and efficient data processing, Monkey is poised to revolutionize various industries reliant on multimodal AI applications, including content generation, image analysis, and question-answering systems. Its resource-efficient approach and proficiency in handling complex scenarios position it as a valuable asset for businesses seeking advanced AI capabilities.