TL;DR:

- Diffusion models, a recent AI focus, have excelled in image generation, leading to novel applications.

- Text-to-image models like MidJourney create high-quality images from text prompts.

- Progress in text-to-image models drives advancements in image and content editing.

- Challenges persist in applying these advances to video editing due to consistency issues.

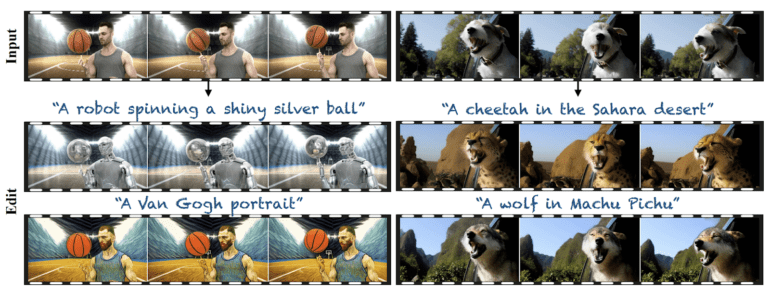

- TokenFlow emerges as an AI model for text-driven video enhancement.

- TokenFlow ensures consistent edits across video frames by leveraging inter-frame connections.

- It builds on the observation that natural videos exhibit redundant information.

- TokenFlow bridges the gap between text-driven editing and temporal consistency.

- It enhances videos by adhering to target edits while preserving spatial and motion aspects.

- TokenFlow’s integration with diffusion-based image editing methods amplifies its utility.

Main AI News:

The realm of AI has been dominated by diffusion models, a subject that has garnered significant attention over the past year. These models have proven their mettle, particularly in the domain of image generation, unveiling an entirely novel chapter in artificial intelligence.

As we navigate the era of text-to-image conversion, these models continue to evolve at an impressive pace. Among them, diffusion-based generative models like MidJourney have emerged as veritable powerhouses, demonstrating unparalleled prowess in crafting high-fidelity images from textual cues. These models leverage expansive image-text datasets, enabling them to fabricate a diverse array of lifelike visuals prompted by mere text.

This unceasing progress in text-to-image technologies has catalyzed extraordinary strides in image manipulation and content creation. In today’s landscape, users wield control over myriad aspects of both generated and authentic images, affording them the luxury to articulate their visions with precision, all while circumventing the protracted labor of manual illustration.

However, the narrative takes a nuanced turn when these breakthroughs attempt to permeate the domain of videos. Here, advancements are somewhat more deliberate. Despite the emergence of expansive text-to-video generative models, these innovations, while remarkable in their ability to generate video snippets from textual depictions, still grapple with constraints related to resolution, duration, and the intricate cadence of video dynamics they can encapsulate.

The quintessential challenge lies in the application of image diffusion models to the arena of video editing—ensuring that any edited content maintains unwavering consistency across all frames. Existing video editing methodologies rooted in image diffusion models have, indeed, achieved a level of global visual harmony through the augmentation of self-attention modules across multiple frames. However, these techniques often fall short of attaining the desired degree of temporal cohesion. This compels professionals and semi-professionals to resort to elaborate video editing pipelines, necessitating supplementary manual intervention.

Enter TokenFlow, an AI marvel harnessing the might of a pre-trained text-to-image model, poised to reshape the landscape of natural video editing through textual guidance.

TokenFlow’s primary objective is to fashion top-tier videos that faithfully align with the editing vision encapsulated within a given text prompt. While preserving the spatial arrangement and motion intrinsic to the source video, TokenFlow zeroes in on rectifying temporal incongruities—a pivotal undertaking. It systematically enforces the original inter-frame video connections during the editing process. This method taps into the realization that natural videos harbor redundancies spanning frames, echoing the akin qualities manifest in the internal representation within the diffusion model.

This revelation serves as TokenFlow’s cornerstone, allowing it to achieve consistent edits by instating conformity among the features across frames. This feat is accomplished by diffusing the edited features in tandem with the original video’s dynamics, thereby leveraging the generative essence of the state-of-the-art image diffusion model sans the demand for supplementary training or fine-tuning. Moreover, TokenFlow seamlessly collaborates with prevalent diffusion-based image editing techniques, amplifying its versatility and utility.

Conclusion:

The introduction of TokenFlow into the realm of AI-driven video editing signifies a monumental shift. This AI model’s adeptness at achieving consistent enhancement by aligning textual directives with temporal cohesion stands as a significant advancement. As TokenFlow propels the video editing landscape forward, its innovative approach augments the market’s potential, offering professionals an avenue to seamlessly blend visual creativity with fluid motion. This development underlines a pivotal moment where AI’s transformative influence on video manipulation reshapes industry dynamics and accelerates the evolution of content creation.