- UNC-Chapel Hill introduces CTRL-Adapter, revolutionizing control adaptation in AI frameworks.

- The framework seamlessly integrates ControlNets with new image and video diffusion models.

- It maintains spatial and temporal consistency in video sequences through spatial and temporal modules.

- CTRL-Adapter supports multiple control conditions, offering nuanced control over media generation.

- Extensive testing demonstrates superior performance in video generation with reduced computational demands.

- The versatility of CTRL-Adapter extends to handling sparse frame conditions and integrating multiple control parameters efficiently.

Main AI News:

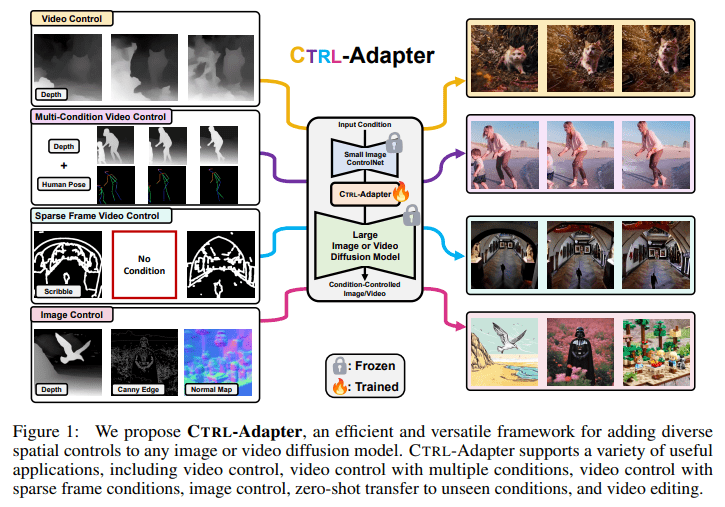

In the realm of digital media, precision in controlling image and video generation stands as a paramount necessity, fueling the evolution of groundbreaking technologies like ControlNets. These sophisticated systems empower users with the capability to finely manipulate visual content, leveraging conditions such as depth maps, canny edges, and human poses. Yet, the seamless integration of these technologies with novel models poses a formidable challenge, often entailing hefty computational resources and intricate adjustments due to mismatches in feature spaces across different models.

The primary obstacle lies in the adaptation of ControlNets, originally tailored for static images, to dynamic video applications. This adaptation becomes pivotal as video generation necessitates both spatial and temporal consistency, aspects that conventional ControlNets struggle to address efficiently. The direct application of image-centric ControlNets to video frames invariably leads to temporal inconsistencies, thereby diminishing the efficacy of the resultant media output.

Enter UNC-Chapel Hill’s groundbreaking solution: the CTRL-Adapter, an ingenious framework poised to revolutionize the seamless amalgamation of existing ControlNets with novel image and video diffusion models. Engineered to operate without necessitating alterations to the parameters of both ControlNets and diffusion models, this framework streamlines the adaptation process, markedly mitigating the need for extensive retraining endeavors.

At the heart of the CTRL-Adapter lies a fusion of spatial and temporal modules, bolstering the framework’s prowess in upholding consistency across frames within video sequences. Embracing versatility, the CTRL-Adapter accommodates multiple control conditions by harmonizing the outputs of diverse ControlNets, dynamically adjusting the integration process to cater to the unique requisites of each condition. This nuanced approach empowers users with granular control over generated media, facilitating intricate modifications across varied conditions sans exorbitant computational overheads.

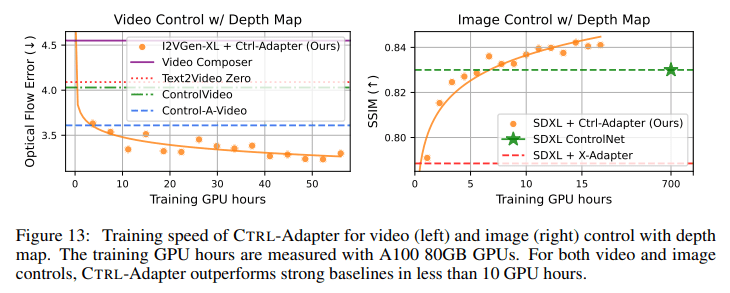

The efficacy of the CTRL-Adapter is substantiated through rigorous testing, wherein it emerges as a game-changer in video generation control while concurrently alleviating computational burdens. Notably, when seamlessly integrated with video diffusion models such as Hotshot-XL, I2VGen-XL, and SVD, the CTRL-Adapter showcases unparalleled performance on benchmark datasets like DAVIS 2017, surpassing incumbent methodologies in controlled video generation. Noteworthy is its ability to uphold fidelity in resulting media outputs while significantly economizing computational resources, accomplishing feats in under 10 GPU hours that traditional methods require hundreds of GPU hours to achieve.

The versatility of the CTRL-Adapter transcends conventional bounds, manifesting in its adeptness at handling sparse frame conditions and seamlessly integrating diverse control parameters. This multifaceted capability empowers users with enhanced control over visual attributes in images and videos, unlocking a plethora of applications ranging from video editing to intricate scene rendering, all achieved with minimal resource outlays. By enabling the seamless integration of conditions such as depth and human pose, the CTRL-Adapter pioneers efficiency benchmarks hitherto unattainable, boasting an average FID (Frechet Inception Distance) improvement of 20-30% compared to baseline models.

Source: Marktechpost Media Inc.

Conclusion:

UNC-Chapel Hill’s CTRL-Adapter heralds a new era in AI framework development, offering unparalleled efficiency and versatility in control adaptation. Its ability to streamline integration processes, maintain consistency in media outputs, and reduce computational overheads signifies a transformative shift in the market, promising enhanced productivity and cost-effectiveness for businesses and industries reliant on AI-driven media generation and manipulation.