- Researchers from the University of Auckland introduced ChatLogic, a new framework for improving multi-step reasoning in large language models (LLMs).

- Current LLMs struggle with complex deductive reasoning due to their reliance on next-token prediction, which limits their logical reasoning and contextual understanding.

- ChatLogic converts logic problems into symbolic representations, allowing LLMs to handle multi-step reasoning more effectively.

- The framework uses a method called ‘Mix-shot Chain of Thought’ (CoT), integrating prompt engineering techniques and symbolic memory for enhanced reasoning.

- Experimental results show significant accuracy improvements for LLMs with ChatLogic: GPT-3.5 reached 0.5275 accuracy, up from 0.344, and GPT-4 achieved 0.73, compared to 0.555 for the base model.

- ChatLogic also improved performance on the CONCEPTRULES datasets, with GPT-3.5 achieving 0.69 accuracy and GPT-4 reaching 0.96.

Main AI News:

Researchers from the University of Auckland have introduced ChatLogic, a pioneering framework designed to enhance the multi-step reasoning capabilities of large language models (LLMs), marking a significant breakthrough in AI technology. This innovative system addresses a critical limitation in current LLMs, which often struggle with complex deductive tasks that require coherent and logical multi-step reasoning.

Large language models have demonstrated impressive abilities in generating text and solving a range of problems. However, a persistent challenge lies in their capacity to perform extended, multi-step deductive reasoning. Traditional LLMs, trained primarily on next-token prediction, lack the necessary mechanisms to apply logical rules or maintain a deep contextual understanding over prolonged interactions. This shortfall becomes especially pronounced in tasks demanding intricate logical sequences and comprehensive contextual analysis.

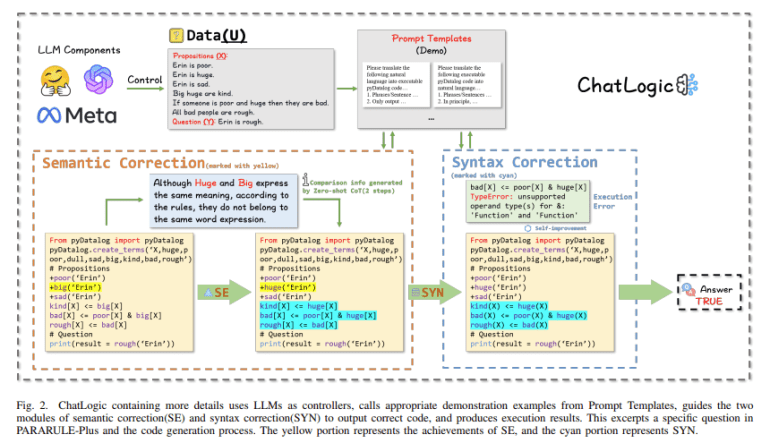

To address these challenges, the University of Auckland researchers have developed ChatLogic, which incorporates a logical reasoning engine that converts logic problems into symbolic representations. This novel approach enables LLMs to handle complex deductive tasks more effectively. ChatLogic utilizes a unique method known as ‘Mix-shot Chain of Thought’ (CoT), which blends various prompt engineering techniques to guide LLMs through logical reasoning processes. By translating natural language queries into logical symbols via pyDatalog and including modules for semantic and syntax correction, ChatLogic enhances both the stability and precision of the reasoning process.

Experimental results underscore the effectiveness of ChatLogic in improving LLM performance. In testing with the PARARULE-Plus dataset, LLMs augmented with ChatLogic achieved remarkable accuracy improvements. For instance, GPT-3.5 with ChatLogic recorded an accuracy of 0.5275, a significant increase from the base model’s 0.344. Similarly, GPT-4 with ChatLogic reached an accuracy of 0.73, compared to 0.555 for the base model. These advancements are particularly noteworthy in scenarios requiring high precision, demonstrating ChatLogic’s ability to mitigate information loss and address the limitations of long sequence handling in multi-step reasoning.

Further validation through the CONCEPTRULES datasets also highlights ChatLogic’s impact. In the simplified version of CONCEPTRULES V1, GPT-3.5 with ChatLogic achieved an accuracy of 0.69, compared to the base model’s 0.57. GPT-4 with ChatLogic reached an accuracy of 0.96, slightly surpassing the base model’s 0.95. These results emphasize the crucial role of logical reasoning engines in advancing LLM capabilities, showcasing ChatLogic’s potential to elevate performance across a range of complex tasks and datasets.

Conclusion:

ChatLogic represents a significant advancement in the capabilities of large language models, addressing key limitations in multi-step reasoning. By transforming complex logic problems into manageable symbolic representations, ChatLogic enables more accurate and stable performance in deductive tasks. This breakthrough suggests that future LLM developments will likely focus on integrating advanced reasoning frameworks, which could enhance the models’ applications across diverse fields. For the market, this innovation may lead to improved AI-driven solutions in areas requiring sophisticated logical analysis, potentially influencing sectors such as data analysis, decision-making systems, and automated problem-solving.