- University of Minnesota Twin Cities engineers unveil a magnetic tunnel junction (MTJ)-based computational random-access memory (CRAM) device.

- The CRAM device could reduce energy consumption for AI computing by up to 1,000 times.

- The research is published in npj Unconventional Computing and includes multiple patents.

- Traditional AI systems face high energy costs due to data transfer between logic and memory.

- CRAM technology allows data processing directly within memory, eliminating the need for energy-intensive data transfers.

- The International Energy Agency (IEA) predicts AI energy consumption will rise from 460 terawatt-hours (TWh) in 2022 to 1,000 TWh by 2026.

- CRAM-based accelerators could improve energy efficiency by approximately 1,000 times, with potential savings of up to 2,500 times compared to traditional methods.

- CRAM breaks away from traditional von Neumann architecture, offering a more efficient solution for AI applications.

- Spintronic devices used in CRAM provide faster, more resilient, and energy-efficient performance compared to conventional transistors.

- The team plans to collaborate with semiconductor industry leaders for large-scale demonstrations.

Main AI News:

Engineers at the University of Minnesota Twin Cities have announced a pioneering development in AI technology with the introduction of a magnetic tunnel junction (MTJ)-based computational random-access memory (CRAM) device. This innovative hardware could revolutionize AI computing by reducing energy consumption by a factor of at least 1,000. The detailed findings are published in the journal npj Unconventional Computing in a paper titled “Experimental demonstration of magnetic tunnel junction-based computational random-access memory.” The research team holds multiple patents for the technologies underlying this breakthrough.

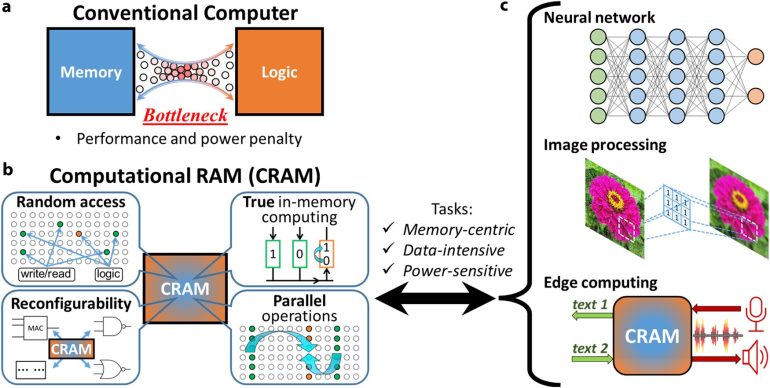

As demand for AI applications surges, energy efficiency has become a critical concern. Traditional AI systems consume substantial energy during data transfers between logic and memory components. This energy inefficiency has prompted researchers to seek alternative approaches. The University of Minnesota team has developed a new model where data processing occurs directly within the memory, termed CRAM. This approach avoids the high energy costs associated with moving data between logic and memory, presenting a significant step forward in AI technology.

Yang Lv, a postdoctoral researcher in the Department of Electrical and Computer Engineering at the University of Minnesota and the paper’s lead author, emphasized the novelty of this approach. “This work represents the first experimental demonstration of CRAM technology, where data can be processed entirely within the memory array, eliminating the need to transfer it away from the storage grid,” Lv noted.

The International Energy Agency (IEA) has projected that AI energy consumption will escalate dramatically, from 460 terawatt-hours (TWh) in 2022 to 1,000 TWh by 2026—equivalent to the electricity usage of Japan. The CRAM-based machine learning inference accelerator is estimated to improve energy efficiency by approximately 1,000 times. In some cases, the technology has demonstrated potential energy savings of up to 2,500 and 1,700 times compared to traditional methods.

This research is the culmination of over two decades of development. Jian-Ping Wang, the senior author and Distinguished McKnight Professor, recalled that the initial idea of using memory cells directly for computing was once deemed unrealistic. “The concept was considered radical 20 years ago, but with continuous efforts from our team and advancements across various disciplines—including physics, materials science, engineering, and computer science—we have proven that this technology is viable and ready for integration into practical applications,” Wang said.

The CRAM architecture represents a fundamental shift from traditional von Neumann architecture, which separates computation and memory functions. By integrating computation directly within the memory array, CRAM addresses the inefficiencies inherent in conventional systems and provides a more energy-efficient solution for AI applications. Ulya Karpuzcu, an Associate Professor and co-author of the paper, highlighted CRAM’s adaptability: “As an extremely energy-efficient digital in-memory computing substrate, CRAM can be configured to match the performance needs of diverse AI algorithms. It is significantly more energy-efficient than traditional AI system components.”

The CRAM technology leverages spintronic devices, which use the spin of electrons rather than electrical charge to store data. This approach not only enhances energy efficiency but also provides greater speed and resilience under harsh conditions compared to traditional transistor-based chips. The research team is now collaborating with semiconductor industry leaders, including partners in Minnesota, to conduct large-scale demonstrations and advance the development of this promising technology.

Overall, this breakthrough in memory technology represents a major leap forward in making AI systems more energy-efficient, potentially transforming the field and addressing the growing demands of AI computing.

Conclusion:

The development of the CRAM technology by the University of Minnesota represents a significant advancement in AI computing. By enabling computation directly within memory and achieving up to 1,000 times greater energy efficiency, this innovation addresses critical concerns related to the growing energy demands of AI applications. As AI energy consumption is projected to double by 2026, the CRAM device offers a compelling solution to mitigate this surge, potentially reshaping the industry’s approach to energy efficiency. This breakthrough could lead to more sustainable AI systems and drive further advancements in memory and computing technologies. The collaboration with semiconductor leaders and large-scale demonstrations will be pivotal in integrating this technology into practical applications, potentially setting new standards for energy efficiency in AI and related fields.