TL;DR:

- Context is crucial for effective communication and moral decision-making.

- CLARIFYDELPHI is an interactive system that extracts context from social and moral situations.

- It poses thought-provoking questions to elicit missing context and enhance moral judgments.

- A reinforcement learning framework with a defeasibility reward optimizes the generation of context-enriching questions.

- CLARIFYDELPHI outperforms other methods in generating relevant and informative clarification questions.

- The team has released valuable datasets and accessible trained models to foster research in the field.

Main AI News:

In the realm of effective communication, context reigns supreme. It molds the very essence of words, ensuring they are heard and comprehended accurately by the recipient. Context is not merely an afterthought; it guides both speaker and listener, shedding light on the importance of certain aspects, facilitating inferences, and, most crucially, imparting meaning to the message at hand. When it comes to making moral decisions and exercising common sense and moral reasoning in social situations, context assumes an equally pivotal role.

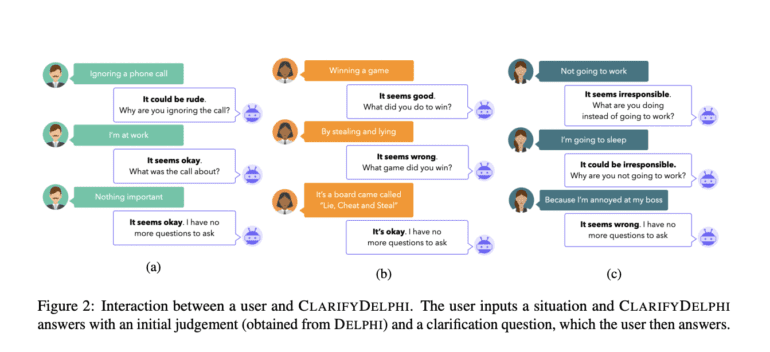

Enter Delphi, the preceding model, which adeptly models the moral judgments individuals make in various everyday scenarios. However, Delphi is not without its limitations. It lacks a comprehensive understanding of the surrounding context, hindering its ability to provide nuanced moral judgments. To overcome this obstacle, a team of ingenious researchers has introduced CLARIFYDELPHI—an interactive system that harnesses the power of reinforcement learning to extract context from ambiguous situations, enriching moral judgments in the process.

The crux of CLARIFYDELPHI lies in its proficiency to elicit crucial context by posing thought-provoking questions. Imagine queries such as “Why did you lie to your friend?” serving as catalysts for unraveling the missing pieces of the puzzle. The researchers assert that the most enlightening questions are those capable of evoking diverse moral judgments.

In other words, when varying responses to a question lead to divergent assessments of morality, it underscores the indispensable role of context in shaping moral judgment. In pursuit of this goal, the team has devised a reinforcement learning framework fortified with a defeasibility reward. By maximizing the disparity between moral judgments triggered by hypothetical answers, they have paved the way for Proximal Policy Optimization (PPO) to optimize the generation of context-enriching questions.

The empirical evaluation of CLARIFYDELPHI has yielded staggering results, surpassing alternative methods in generating clarification questions. The questions generated by CLARIFYDELPHI possess relevance, informativeness, and an inherent ability to foster defeasible reasoning. This empirical success underscores the efficacy of their methodology in extracting crucial contextual data. Moreover, the authors have quantified the amount of supervised clarification question training data necessary for an optimal initial policy and have showcased the pivotal role questions play in generating defeasible updates.

The contributions of this exceptional team can be encapsulated as follows:

- The team has pioneered a Reinforcement Learning-based approach that introduces defeasibility as a novel form of relevance in generating clarification questions for social and moral situations.

- They have generously released δ-CLARIFY, an extensive dataset comprising 33k crowdsourced clarification questions, fostering transparency and collaboration.

- Furthermore, they have unveiled δ-CLARIFYsilver, an invaluable resource containing generated questions conditioned on a defeasible inference dataset, enabling further advancements in the field.

- The trained models, accompanied by their source code, are readily accessible, promoting knowledge dissemination and fostering a vibrant research community.

The adaptability of human moral reasoning lies in its capacity to discern when moral rules ought to apply and to acknowledge legitimate exceptions in light of contextual nuances. By unearthing concealed context and enabling more precise moral judgments, CLARIFYDELPHI empowers individuals to navigate the complexities of morality with increased accuracy. In comparison to alternative approaches, ClarifyDelphi’s repertoire of questions possesses the prowess to either fortify or weaken answers, offering a truly comprehensive perspective.

Without a doubt, CLARIFYDELPHI emerges as a beacon of promise—a remarkable model adept at generating insightful and pertinent questions that shed light on divergent moral judgments. Its potential to revolutionize the understanding of the context in moral decision-making is unparalleled, ushering in a new era of nuanced ethical considerations in our increasingly interconnected world.

Conclusion:

The advent of CLARIFYDELPHI signifies a significant milestone in the realm of moral decision-making. By leveraging the power of reinforcement learning and thoughtful questioning, this innovative system brings context to the forefront, enabling individuals to make more accurate and nuanced moral judgments. The superiority of CLARIFYDELPHI in generating relevant questions and extracting crucial contextual data positions it as a game-changer in the market.

Its potential impact extends beyond research and academia, offering businesses and organizations the opportunity to foster ethical decision-making frameworks that consider the diverse perspectives and contextual nuances present in social and moral situations. Embracing CLARIFYDELPHI can lead to more informed and comprehensive approaches to addressing moral complexities, ultimately enhancing trust, reputation, and stakeholder relationships in the market.