TL;DR:

- Capsule networks are a novel neural network architecture designed to overcome the limitations of traditional convolutional neural networks (CNNs).

- Capsules, groups of neurons, represent specific features or parts of an object, providing robustness to variations in input data.

- Dynamic routing within capsule networks enables the learning of complex hierarchical relationships between features.

- Capsule networks outperform CNNs in tasks like digit recognition and small object classification.

- They exhibit enhanced resilience against adversarial attacks and offer promising results in natural language processing and video analysis.

- Challenges include computational complexity and the need for further research for optimal design and integration with other techniques.

Main AI News:

In the ever-evolving landscape of machine learning and artificial intelligence, countless methods and algorithms have emerged, all striving to elevate system performance. Among these innovations, capsule networks have garnered significant attention in recent years due to their potential to revolutionize machine learning. This article delves into the pivotal role of capsule networks in enhancing machine learning performance and the profound impact they exert on the field.

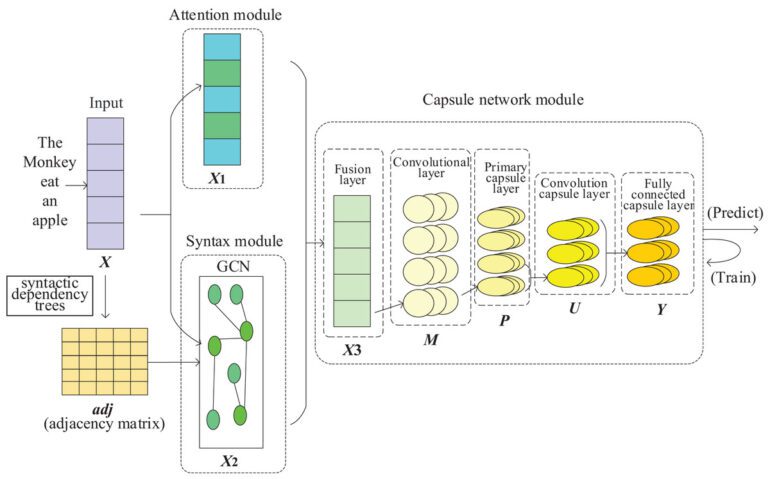

Originally introduced by the distinguished Geoffrey Hinton in 2017, capsule networks represent a distinctive neural network architecture designed to address the limitations inherent in traditional convolutional neural networks (CNNs). Although CNNs have long served as the go-to choice for image recognition and classification tasks, they suffer from certain drawbacks, such as their inability to grasp spatial relationships between features and their vulnerability to adversarial attacks. Capsule networks, on the other hand, surmount these challenges by incorporating a more sophisticated mechanism that encodes and processes hierarchical relationships within input data.

At the core of capsule networks lies the concept of capsules, which comprise small clusters of neurons working harmoniously to represent specific features or components of an object. These capsules exhibit enhanced resilience to variations in input data, accommodating changes in position, orientation, or scale of the features they represent. This resilience is achieved through a process known as dynamic routing, which empowers capsules to construct a more accurate representation of the input data by selectively directing information flow between capsules at different levels of the network hierarchy.

The dynamic routing mechanism within capsule networks bestows several advantages over traditional CNNs. Firstly, it enables the network to unravel intricate and hierarchical relationships between features, leading to superior performance on tasks that necessitate a profound understanding of the input data. Secondly, it fortifies the network against adversarial attacks, as capsules adapt to minute perturbations in the input data without succumbing to misleading predictions. Lastly, the dynamic routing process facilitates more efficient training, as the network can concentrate on learning the most relevant features for a given task instead of attempting to comprehend all possible feature combinations.

Numerous studies have scrutinized the performance of capsule networks across diverse machine learning tasks, yielding promising outcomes. For instance, capsule networks have surpassed CNNs in tasks like digit recognition and small object classification, exhibiting enhanced accuracy and reduced susceptibility to adversarial attacks. Furthermore, capsule networks have made significant strides in tackling more intricate endeavors, such as natural language processing and video analysis, fostering encouraging advancements.

Despite these encouraging results, several challenges must be addressed before capsule networks can attain mainstream adoption among machine learning practitioners. Foremost among these challenges is the computational complexity associated with the dynamic routing process, which may result in prolonged training times and increased memory requirements when compared to traditional CNNs. Additionally, further research is imperative to determine optimal designs and optimizations for capsule networks across different tasks and data types, as well as to explore avenues for their seamless integration with other machine learning techniques.

Conclusion:

The emergence of capsule networks presents a transformative opportunity in the machine learning market. Their ability to address the limitations of traditional CNNs and unlock complex hierarchical relationships provides a competitive advantage for businesses. With improved performance in image recognition, object classification, and other tasks, capsule networks offer more reliable and accurate solutions. Moreover, their enhanced resilience against adversarial attacks instills confidence in the security and reliability of machine learning systems.

As businesses strive to leverage the power of AI and improve their competitive edge, adopting capsule networks can lead to significant advancements and breakthroughs in various domains. Companies investing in the research and development of capsule networks, optimizing their designs, and integrating them with existing machine learning techniques will position themselves as leaders in the market, driving innovation and achieving superior performance in the era of artificial intelligence.