TL;DR:

- NYU and Google AI researchers explore machine learning’s frontiers in advanced deductive reasoning.

- Deductive reasoning complexity in tasks like medical diagnosis and theorem proving necessitates a broad range of deduction rules.

- Large Language Models (LLMs) demonstrate deductive reasoning skills through in-context learning (ICL) and chain-of-thought (CoT) prompting.

- The study examines LLMs’ capacity to generalize deductive abilities beyond initial demonstrations.

- Proofs are categorized based on premises, length, and deduction rules.

- LLMs are tested on depth- and width-generalization and compositional generalization.

- In-context learning benefits from basic examples with diverse deduction rules and distractors.

- CoT prompts induce out-of-distribution reasoning, contrary to prior beliefs about LLMs.

- Pretraining alone doesn’t equip LLMs for certain deduction rules; explicit examples are necessary.

- Model size’s impact on performance is weak; tailored instruction and extended pretraining improve smaller models.

- Future research may explore the efficacy of simpler examples in addressing complex test cases.

Main AI News:

In the realm of deductive reasoning, the intricacy of proofs knows no bounds, with a myriad of deduction rules and subproofs paving the way for limitless complexity. Whether it be the domain of medical diagnosis or theorem proving, the daunting expanse of proof space makes it impractical to amass data that encompasses all possible scenarios. Hence, the quest for a comprehensive reasoning model begins with the foundation of basic proofs, poised to extend its prowess to tackle even the most convoluted challenges.

A recent collaborative effort between researchers from New York University and Google AI has shed light on a promising avenue for advancing deductive reasoning. Leveraging in-context learning (ICL) and chain-of-thought (CoT) prompting, the team has demonstrated that Large Language Models (LLMs) possess the ability to engage in deductive reasoning. While previous research placed primary focus on a handful of deduction rules, such as modus ponens, this new investigation takes a holistic approach.

Crucially, the assessment conducted by these researchers is rooted in in-demonstration scenarios, where test cases are drawn from the same distribution as the in-context demonstrations. This alignment between training and testing conditions underscores the model’s capacity to generalize its deductive abilities.

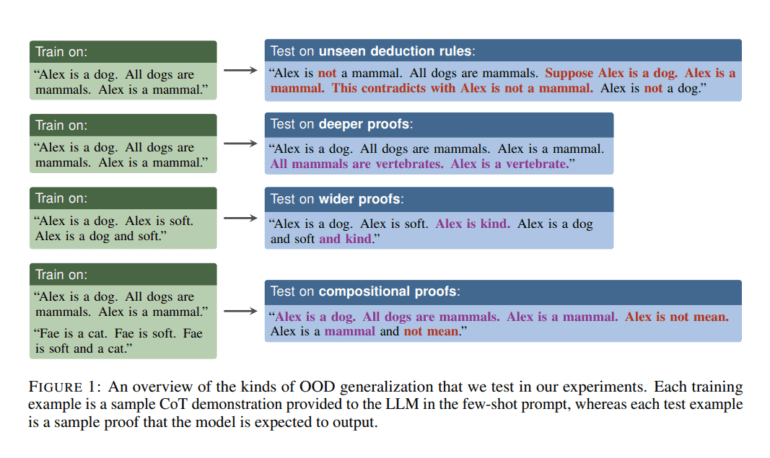

The study’s central inquiry revolves around the LLMs’ aptitude to extend their deductive reasoning capabilities beyond the confines of their initial demonstrations. To probe this, the academics categorize proofs along three dimensions:

- The number of premises employed at each stage of the demonstration.

- The length of the sequential chain of steps forming the proof.

- The array of deduction rules applied.

The total size of a proof, they assert, is an amalgamation of these three dimensions. Building on prior research, the team undertakes two pivotal explorations to assess the general deductive reasoning prowess of LLMs:

- Depth- and width-generalization tests the model’s ability to reason through lengthier proofs than those found in its in-context examples.

- Compositional generalization challenges the LLMs to harness a multitude of deduction rules within a single proof.

Interestingly, the research posits that in-context learning thrives when introduced with rudimentary examples showcasing a diverse range of deduction rules. To prevent the model from becoming overly specialized, it is imperative that these in-context examples incorporate unfamiliar deduction principles, such as proof by cases and proof by contradiction, while being interspersed with distractors.

Furthermore, the findings suggest that CoT prompts have the potential to induce out-of-distribution (OOD) reasoning in LLMs, leading to successful generalization to compositional proofs. This revelation defies prior literature, which often questioned the compositional generalizability of LLMs. Importantly, in-context learning diverges from traditional supervised learning, particularly gradient descent on in-context samples. Notably, in instances where in-context examples incorporate specific deduction rules, heightened generalization to compositional proofs is observed.

However, pretraining alone appears insufficient to equip the model with the ability to formulate hypothetical subproofs. For certain deduction rules, such as proof by cases and contradiction, LLMs require explicit examples to generalize effectively. Surprisingly, the study finds that the relationship between model size and performance is tenuous. With tailored instruction and extended pretraining, even smaller models, though not the most compact, can rival their larger counterparts.

To delve deeper into the intricacies of ICL and CoT triggering, the researchers spotlight a critical area for future exploration. They unveil the intriguing revelation that the most effective in-context examples often stem from a distribution distinct from the test example, even when considering a specific test case. This phenomenon remains unaccounted for by Bayesian inference and gradient descent, prompting further inquiry into the efficacy of simpler examples in addressing relatively complex test cases. As the pursuit of understanding extrapolation from specific instances continues, additional research is undoubtedly warranted.

Conclusion:

The collaborative research by NYU and Google AI showcases the promising potential of Large Language Models in advancing deductive reasoning. This has significant implications for industries relying on complex reasoning tasks, such as healthcare and mathematics, as LLMs can generalize their abilities to handle more intricate problems. This innovation opens the door to more efficient problem-solving and decision-making processes, with smaller models becoming viable competitors in the market, provided they receive the right training and instruction. Businesses should monitor these developments closely to leverage the capabilities of LLMs in their operations.