TL;DR:

- LLMs, known for their language capabilities, have been fine-tuned for domain-specific tasks.

- Combining anchor models with augmenting models can introduce novel capabilities.

- Traditional methods involve further pre-training, but this may not be practical due to computational costs.

- Distinct models offer an alternative to harness established capabilities without issues like catastrophic forgetting.

- Researchers introduce the Composition to Augment Language Models (CALM) framework.

- CALM incorporates trainable parameters in both models’ intermediate layer representations.

- CALM aims to optimize the fusion of models, enhancing their performance while retaining distinct capabilities.

- CALM finds practical applications in language inclusivity and code generation.

- The composed model excels in translation and arithmetic reasoning for low-resource languages.

- In code generation, it outperforms individual base models in code explanation and completion tasks.

Main AI News:

Large Language Models (LLMs) have long been recognized for their foundational abilities in commonsense reasoning and coherent language generation. However, recent developments have seen these models fine-tuned for specific domains, such as code generation and mathematical problem-solving. This evolution raises the question of whether it is possible to combine the strengths of an anchor model with those of a domain-specific augmenting model to unlock new capabilities.

Traditionally, achieving this fusion of capabilities required further pre-training or fine-tuning of the anchor model, a process often hampered by computational constraints. Working with distinct models offers a promising alternative, enabling the utilization of established capabilities without the risk of catastrophic forgetting that can occur with traditional methods.

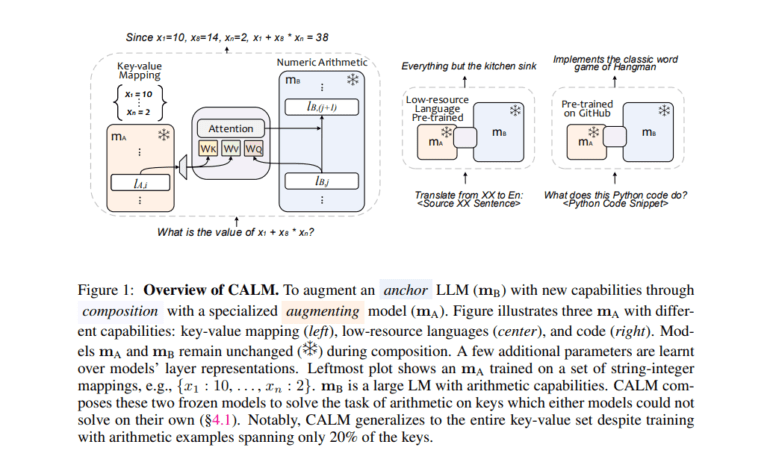

To address the challenges of training and data limitations, researchers at Google Research and Google DeepMind present a practical scenario for model composition. This scenario involves having access to one or more augmenting models alongside an anchor model, with restrictions on altering their weights and access to a limited dataset representing their combined capabilities. This approach introduces the innovative Composition to Augment Language Models (CALM) framework.

CALM distinguishes itself from superficial augmenting and anchor model combinations by incorporating a small set of trainable parameters within the intermediate layer representations of both models. The goal is to discover an optimal fusion of these models, enhancing their collective performance in tackling complex tasks more effectively than either model operating independently. Importantly, CALM ensures that the distinctive capabilities of each model are retained.

CALM’s practical applications are far-reaching, with a focus on language inclusivity and code generation. In the realm of language inclusivity, researchers combine a model trained specifically on low-resource languages with an LLM. This combination grants them access to advanced generation and reasoning abilities, leading to remarkable improvements in translation and arithmetic reasoning tasks for low-resource languages.

Notably, this composed model surpasses the performance of the two base models and outperforms versions of the LLM that underwent further pre-training or LoRA fine-tuning tailored for low-resource languages. In the domain of code generation, researchers integrate a model trained on diverse open-source code across multiple programming languages with the LLM. This integration leverages the underlying low-level logic and generation capabilities of the LLM, resulting in superior performance on tasks involving code explanation and completion when compared to the individual base models.

Conclusion:

The CALM framework represents a significant advancement in AI capabilities by enabling the efficient fusion of anchor and augmenting models. This innovation holds promise for a range of applications, from enhancing language inclusivity to improving code generation. It has the potential to drive market demand for AI solutions that can effectively address complex tasks across diverse domains.