TL;DR:

- System 2 Attention (S2A) is a novel technique developed by Meta to improve the reasoning capabilities of large language models (LLMs).

- S2A refines user prompts, eliminating irrelevant information and focusing on task-relevant data.

- LLMs often struggle with prompts containing irrelevant or opinionated information due to their training on contextual cues.

- System 2 Attention leverages LLMs’ ability to follow instructions and generate context that emphasizes relevant material.

- This approach aligns with System 2 thinking, a deliberate and analytical problem-solving mode.

- S2A involves a two-step process of context modification and response generation, leading to more accurate outcomes.

- S2A variants cater to different scenarios, optimizing its effectiveness.

- Extensive testing demonstrates S2A’s ability to improve LLM objectivity and performance in various tasks.

- However, challenges remain, such as occasional susceptibility to spurious correlations and increased generation costs.

Main AI News:

In the fast-evolving landscape of large language models (LLMs), their ability to reason effectively has been a central focus of research. While LLMs have made remarkable strides in various domains, the challenge of enabling them to engage in logical problem-solving remains. Researchers at Meta have introduced a groundbreaking technique known as System 2 Attention (S2A), drawing inspiration from psychological research. S2A is designed to empower LLMs by refining the user’s prompt, eliminating misleading or irrelevant information, and directing their focus solely on task-relevant data. This strategic approach holds promise for significantly improving LLMs’ performance in question-answering and reasoning tasks.

A Quest for Enhanced Reasoning

The journey to enhance the reasoning capabilities of LLMs has seen its fair share of ups and downs. Various prompt engineering techniques have demonstrated the potential to boost performance. However, these models can stumble when confronted with prompts that include irrelevant or opinionated information. This vulnerability can be traced back to the deep learning architecture of transformers, which are at the core of LLMs. Transformers are trained on next-token prediction, making them sensitive to contextual information. Consequently, if an entity is mentioned in a context, the model may predict the same entity later, even when it’s irrelevant. This tendency leads to an overemphasis on repeated tokens, potentially leading to incorrect answers.

The Birth of System 2 Attention

To address these challenges, researchers have explored a novel approach to attention mechanisms, leveraging LLMs as natural language reasoners. They tap into LLMs’ ability to follow instructions, prompting them to generate context that only contains relevant material. This context refinement process is what we now know as System 2 Attention (S2A). By enabling the model to determine which parts of the input are essential before generating a response, S2A aligns with psychologist Daniel Kahneman’s System 2 thinking concept introduced in “Thinking, Fast and Slow.”

System 2 Thinking: A Path to Reliable Reasoning

System 2 thinking represents a slower, more deliberate, and analytical approach to problem-solving. It comes into play when tackling complex problems that demand careful consideration and planning or when learning new skills. Unlike System 1 thinking, which relies on mental shortcuts and instinct, System 2 processing involves more cognitive effort and tends to produce less biased and more logical outcomes. S2A harnesses the power of System 2 thinking within LLMs, aiming to mitigate issues arising from standard attention mechanisms.

The Mechanics of S2A

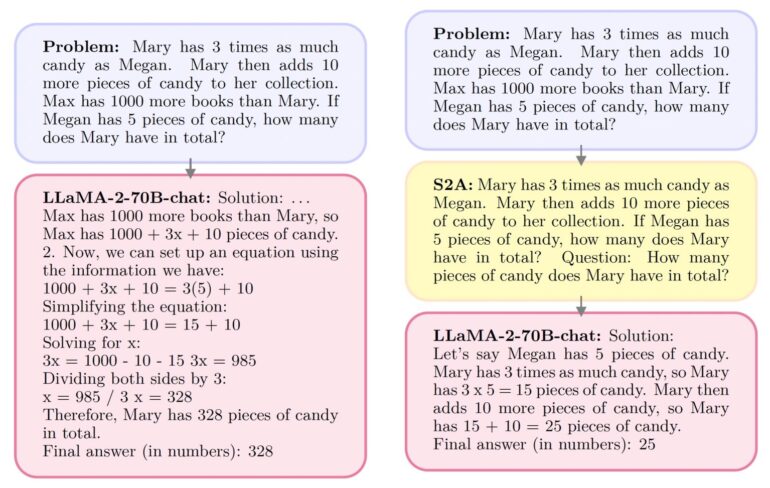

System 2 Attention is a two-step process designed for straightforward implementation. Initially, it modifies the original context, meticulously removing irrelevant portions that might negatively impact the output. Following this, the adjusted context is fed into the primary LLM to generate its response. In their specific implementation, researchers use instruction-tuned LLMs that excel in reasoning and generation tasks similar to those required for S2A. This procedure can be seamlessly integrated as an instruction via prompting.

Researchers have introduced multiple S2A variants in their work, tailoring the approach to different scenarios. They’ve found that, for short contexts or robust LLMs, partitioning the context and question may not be necessary. In such cases, a simple request for a non-partitioned rewrite of the query suffices. Another variant retains the original prompt and adds the S2A-generated query to it, making both the original context and its reinterpretation available for the model to access.

Proven Effectiveness and Future Potential

Extensive testing of S2A across various problem types, from question answering to long-form reasoning and math word problems, underscores its ability to sift through irrelevant or opinionated information. S2A consistently guides LLMs toward objective answers, rivaling situations where models are provided with clean, distraction-free prompts. Moreover, LLMs equipped with S2A demonstrate improved objectivity in long-form generation tasks.

While S2A’s results are impressive, researchers acknowledge its occasional susceptibility to spurious correlations. Additionally, it adds steps and increases the costs of LLM generation tasks, primarily due to the extraction of contextual information from the original prompt. These are areas where future improvements are expected to refine S2A’s utility as a valuable addition to the toolbox of reasoning techniques for LLM applications.

Conclusion:

The introduction of System 2 Attention (S2A) holds promising implications for the market. Enhanced reasoning capabilities in large language models (LLMs) can drive more accurate and objective outcomes in applications requiring sophisticated language understanding. Businesses can leverage this technology to improve question-answering, reasoning, and content generation, ultimately enhancing user experiences and decision-making processes. As S2A continues to evolve, it may become a valuable asset in the toolbox of reasoning techniques for LLM applications, offering opportunities for innovation and efficiency across various industries.