TL;DR:

- Comparing natural languages and protein sequences has transformed the study of life’s language.

- Scaling up protein language models has a significant impact and surpasses NLP model scaling.

- The misconception that larger models are inherently better leads to increased computational costs and limited accessibility.

- RITA and ProGen2 models demonstrate the relationship between model size and performance.

- ESM-2 survey highlights the up-scaling trend in protein language models.

- However, model size alone does not guarantee optimal performance; knowledge-guided optimization is crucial.

- The Ankh project integrates knowledge-guided optimization, unlocking the language of life through better representations of amino acids.

- Ankh offers pre-trained models (Ankh big and Ankh base) for protein engineering applications.

- The project promotes research innovation and accessibility through practical resources.

Main AI News:

In the realm of deciphering the language of life, a groundbreaking approach has emerged, revolutionizing the study of proteins by drawing parallels between natural languages and protein sequences. While this comparison has undeniably contributed to advancements in natural language processing (NLP) applications, it’s important to recognize that the insights gained from NLP do not seamlessly transfer to the realm of protein language. Moreover, the impact of scaling up protein language models far surpasses that of NLP models, making it a critical frontier in scientific research and discovery.

The widespread adoption of larger NLP models, with their vast number of parameters and extensive training steps, has led to a common misconception that bigger models inherently yield more accurate and comprehensive representations. This fallacy has shifted the focus from seeking precise and relevant protein representations to prioritizing model size. However, this inclination toward larger models comes at a cost: heightened computational requirements that hinder accessibility. Notably, protein language models have recently undergone substantial growth, with model sizes escalating from 106 to 109 parameters. The benchmark for these models’ performance is set by ProtTrans’s ProtT5-XL-U50, an encoder-decoder transformer pre-trained on the UniRef50 database, which boasts 3 billion training parameters and 1.5 billion inference parameters. This historical benchmark sheds light on the current state-of-the-art (SOTA) protein language models.

To establish a framework for scaling principles in protein sequence modeling, researchers have turned to the RITA family of language models. These models serve as an initial step toward understanding how model performance evolves in relation to size. RITA comprises four alternative models, each exhibiting a proportional increase in size from 85 million to 300 million, to 680 million, and finally, 1.2 billion parameters. ProGen2, another noteworthy collection of protein language models, reinforces this pattern, boasting an impressive 6.4 billion parameters. Adding to the discourse, ESM-2, a comprehensive survey of general-purpose protein language models, highlights a correlation between size and performance, with model sizes ranging from 650 million to 3 billion and 15 billion parameters. This recent addition further supports the trend of up-scaling protein models.

However, the simplistic notion that larger protein language models equate to superior performance overlooks crucial factors such as computing costs and the design and deployment of task-agnostic models. This inadvertently raises barriers to innovative research, limiting scalability potential. While model size undoubtedly influences the pursuit of research goals, it is not the sole determining factor. The scaling of pre-training datasets must align with the same trajectory; larger datasets are not always superior to smaller ones with higher quality. This argument asserts that scaling up language models is a conditional process, necessitating a protein knowledge-guided approach to optimization rather than relying solely on model size.

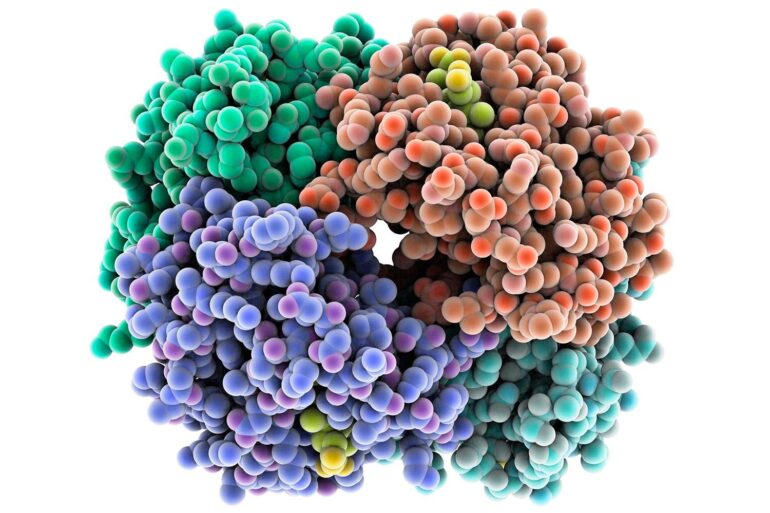

The primary objective of this study is to integrate knowledge-guided optimization within an iterative empirical framework, enabling widespread access to research innovation through practical resources. By unraveling the language of life and enhancing the representation of amino acids – the fundamental “letters” of this language – the researchers have aptly named their project “Ankh,” drawing inspiration from the Ancient Egyptian symbol for the key to life. The Ankh project has two pivotal components that validate its generality and optimization.

The first component involves a generation study focused on protein engineering, encompassing both High-N (family-based) and One-N (single sequence-based) applications. By surpassing the performance of the current state-of-the-art across various structure and function benchmarks, Ankh demonstrates its capacity to outperform existing models. The second component revolves around identifying the optimal attributes for achieving such performance, encompassing not only the model architecture but also the software and hardware utilized throughout the model’s development, training, and deployment. To cater to diverse application needs, the researchers have introduced two pre-trained models: Ankh big and Ankh base, each offering distinct computational capabilities. For convenience, the flagship model, Ankh big, is simply referred to as “Ankh.” These pre-trained models are readily available on the project’s GitHub page, which also provides detailed instructions on codebase execution and implementation.

Conclusion:

The rise of AI-powered protein language models and their application in general-purpose sequence modeling has significant implications for the market. This emerging technology enables researchers to unlock the language of life by deciphering protein sequences and improving their understanding of biological processes. However, it is essential for market participants to recognize that simply increasing model size does not guarantee superior performance. Instead, the focus should be on knowledge-guided optimization, ensuring that protein representations are accurate and relevant.

This approach not only enhances research innovation but also addresses the challenges of computational costs and accessibility. As the field continues to evolve, businesses should stay informed about the latest advancements in AI-driven protein language models and explore opportunities to leverage this technology for various applications, such as protein engineering and drug discovery. By embracing these transformative models, organizations can gain a competitive edge and contribute to advancements in life sciences and biotechnology.