TL;DR:

- QUILT-1M is a groundbreaking histopathology dataset sourced from educational YouTube videos, comprising one million image-text pairs.

- This dataset fills a crucial void in histopathology, offering unique, rich textual descriptions and multiple perspectives for comprehensive understanding.

- Created through advanced models, algorithms, and diverse data sources, QUILT-1M sets new quality standards.

- QUILT-1M surpasses existing models in various assessments, including zero-shot, linear probing, and cross-modal retrieval tasks.

- QUILT-1M promises transformative potential for computer scientists and histopathologists alike.

Main AI News:

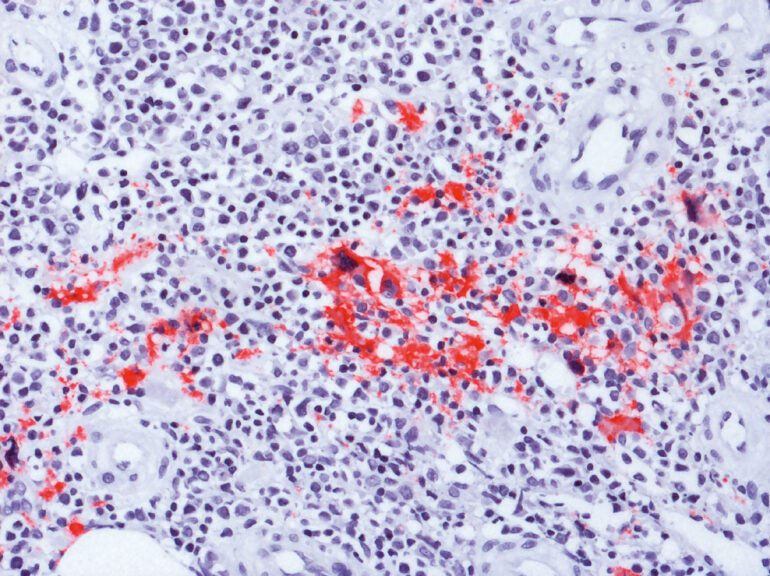

In the realm of histopathology, the quest for comprehensive datasets has been akin to searching for a needle in a haystack. However, a paradigm-shifting solution has emerged in the form of QUILT-1M. This pioneering framework capitalizes on the treasure trove of knowledge tucked away on YouTube, especially within the realm of educational histopathology videos. QUILT-1M, an audacious endeavor, assembles a staggering one million paired image-text samples, solidifying its status as the most extensive vision-language histopathology dataset ever crafted.

The dearth of such datasets has long impeded progress within the field of histopathology, a domain reliant on intricate, interwoven representations to fathom the intricacies of diverse disease subtypes. QUILT-1M brings forth an array of advantages that promise to revolutionize the status quo. To begin with, it treads on untouched terrain, ensuring its distinctiveness in the realm of histopathology knowledge. Secondly, the repository is enriched with meticulous textual narrations culled from expert voices embedded within educational videos, offering an exhaustive information repository. Lastly, the inclusion of multiple sentences per image affords diverse perspectives, ensuring a profound comprehension of each histopathological image.

Curating this monumental dataset was no ordinary feat; it involved an amalgamation of cutting-edge models, intricate algorithms, and the collective wisdom stored in human knowledge databases. To further augment its scope, QUILT-1M expanded its horizons by incorporating data from diverse sources, including Twitter, research papers, and the venerable PubMed. The dataset’s quality underwent rigorous evaluation, subjected to an array of metrics, ranging from ASR error rates to the precision of language model corrections, and culminating in sub-pathology classification accuracy assessments.

In terms of performance, QUILT-1M stands as a veritable titan, outshining established models such as BiomedCLIP across an array of assessments, including zero-shot scenarios, linear probing, and cross-modal retrieval tasks that span the spectrum of sub-pathology types. QUILTNET, an integral component of this groundbreaking initiative, emerges triumphant in no less than twelve zero-shot tasks, spanning a diverse array of eight distinct sub-pathologies. The research team underscores the transformative potential of QUILT-1M, a resource poised to redefine the landscape for both computer scientists and histopathologists alike.

Conclusion:

The advent of QUILT-1M in the histopathology arena signifies a monumental leap forward. This extensive vision-language dataset is poised to redefine research possibilities and revolutionize histopathological models. It not only addresses the scarcity of comprehensive datasets but also sets new benchmarks for quality and performance. For the market, this means a fresh wave of opportunities for innovation and insight, benefiting both computer scientists and histopathologists in their quest to understand and combat complex diseases.