TL;DR:

- Researchers at Osaka University conducted a groundbreaking study on human facial expressions.

- 44 distinct facial actions were meticulously analyzed using 125 tracking markers.

- Findings reveal the complexity of genuine human emotions conveyed through facial movements.

- Implications for improving artificial facial expressions in robotics and computer graphics.

- Potential for enhancing facial recognition technology and medical diagnostics.

Main AI News:

In a recent breakthrough in AI research, a team of scientists from Osaka University in Japan has undertaken an in-depth exploration of the mechanical aspects of human facial expressions. Their aim is to enhance the ability of androids to accurately recognize and display emotions, bringing us closer to a world where artificial beings can convey authentic feelings.

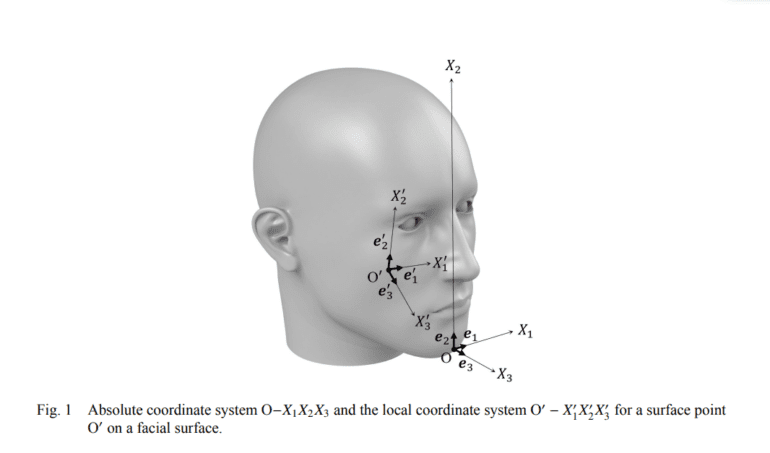

This groundbreaking study, featured in the Mechanical Engineering Journal, was a collaborative effort involving multiple institutions. It delved into the intricacies of 44 distinct facial actions, employing a meticulous approach that utilized 125 tracking markers. Through this meticulous analysis, the researchers examined the minutiae of human facial expressions, from subtle muscle contractions to the interplay of various tissues beneath the skin.

Facial expressions, as the researchers discovered, are a complex interplay of local deformations, involving layers of muscle fibers, fatty tissues, and intricate movements. Even what may appear as a simple smile involves a cascade of minute motions. This complexity underscores the challenge of replicating these nuances artificially. However, from an engineering perspective, human faces serve as remarkable information display devices, conveying a wide range of emotions and intentions.

The wealth of data generated by this study serves as a guiding light for researchers working on artificial faces, whether in digital form or physical manifestations within androids. A deeper understanding of facial tensions and compressions promises to result in more realistic and accurate artificial expressions. The complex facial structure beneath the skin, unraveled through deformation analysis, provides insights into how seemingly straightforward facial actions can yield sophisticated expressions through the stretching and compressing of skin.

Beyond the realm of robotics, this research carries promising implications for improved facial recognition technology and medical diagnostics. At present, medical diagnoses often rely on doctors’ intuitive observations of facial movements, leaving room for potential inaccuracies. This research aims to bridge that gap by providing a more precise understanding of facial dynamics.

While this study is based on the analysis of a single individual’s facial expressions, it represents a foundational step toward comprehending the intricate motions across diverse faces. As the field of robotics continues to strive for the decipherment and conveyance of emotions, this research holds the potential to refine facial movements across various domains, including computer graphics used in the entertainment industry. This progress is poised to mitigate the ‘uncanny valley’ effect, where artificial faces can evoke discomfort due to their close but not quite human-like appearance.

Conclusion:

This pioneering research on human facial expressions signifies a significant step towards bridging the gap between artificial and authentic emotional displays in robotics and computer graphics. It has the potential to revolutionize industries by improving facial recognition technology and enhancing medical diagnostics. Businesses involved in AI, robotics, and entertainment should closely monitor and leverage these findings to stay at the forefront of innovation in the market.