TL;DR:

- MoD-SLAM is a cutting-edge method for SLAM systems, focusing on unbounded scenes with just RGB images.

- It eliminates the need for RGB-D input, enhancing scalability and accuracy in large scenes.

- Utilizes neural radiance fields (NeRF) and loop closure detection for detailed and accurate reconstruction.

- Key components include a depth estimation module, a depth distillation process, and advanced spatial encoding techniques.

- Outperforms existing neural SLAM systems like NICE-SLAM and GO-SLAM in tracking accuracy and reconstruction fidelity.

Main AI News:

In the realm of Simultaneous Localization And Mapping (SLAM) systems, achieving real-time, precise, and scalable dense mapping has long been a challenge. Conventional methods often stumble when faced with unbounded scenes, relying on RGB-D input, which can result in inaccurate scale reconstruction or scale drift in expansive environments.

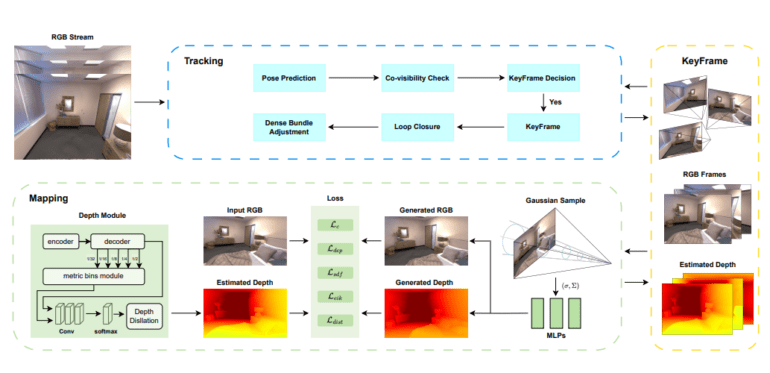

However, a groundbreaking solution has emerged: MoD-SLAM. This state-of-the-art method is set to redefine the landscape of SLAM systems, particularly in handling unbounded scenes using only RGB images. Unlike its predecessors, which depend on RGB-D input, MoD-SLAM introduces a pioneering monocular dense mapping approach, capitalizing on neural radiance fields (NeRF) and loop closure detection to deliver intricate and precise reconstructions.

MoD-SLAM addresses the shortcomings of current methods by sidestepping the need for RGB-D input, thus boosting its scalability and versatility. It achieves this feat through a depth estimation module and depth distillation process, which generate accurate depth maps from RGB images, mitigating scale reconstruction inaccuracies. Moreover, to tackle scenes lacking well-defined boundaries, the system employs advanced techniques such as multivariate Gaussian encoding and reparameterization, ensuring stability while capturing detailed spatial information.

The core components of MoD-SLAM are meticulously crafted to overcome the specific challenges encountered in SLAM systems. By incorporating loop closure detection, it effectively eliminates scale drift, further enhancing accuracy.

Experimental results, gleaned from both synthetic and real-world datasets, attest to the unparalleled performance of MoD-SLAM. When compared to existing neural SLAM systems like NICE-SLAM and GO-SLAM, MoD-SLAM demonstrates superior tracking accuracy and reconstruction fidelity, particularly in vast, unbounded scenes. It’s a leap forward in the realm of SLAM technology, heralding a new era of precision and scalability in mapping and reconstruction endeavors.

Conclusion:

MoD-SLAM represents a significant advancement in SLAM technology, offering a solution for real-time, precise, and scalable dense mapping in unbounded scenes. Its ability to operate with just RGB images enhances its applicability across various industries, from robotics to augmented reality. With superior performance demonstrated in both synthetic and real-world datasets, MoD-SLAM is poised to disrupt the market, providing a competitive edge to businesses relying on accurate mapping and 3D reconstruction.