TL;DR:

- Large Language Models (LLMs) struggle with tabular data but can be enhanced through instruction tuning, prompting, and agent-based methods.

- These approaches improve adaptability and performance on new tasks but require significant computational resources and careful dataset curation.

- Renmin University of China researchers propose these methods to address LLM limitations, resulting in enhanced accuracy and efficiency across various table-related tasks.

Main AI News:

In the realm of data processing, Large Language Models (LLMs) have excelled in handling various data formats like text, images, and audio. However, challenges arise when dealing with tabular data. To optimize LLMs for tasks such as database queries, spreadsheet computations, and report generation from tables, they must effectively interpret tabular inputs. Researchers at Renmin University of China have tackled this challenge by delving into instruction tuning, prompting, and agent-based methodologies within the domain of LLMs.

Present techniques predominantly revolve around training or fine-tuning LLMs for specific table-related tasks. Nonetheless, these approaches often lack robustness and struggle to generalize to new tasks efficiently. The proposed solution outlined in their study introduces instruction tuning, prompting, and agent-based strategies tailored for LLMs to mitigate these limitations.

Let’s delve into how these three pivotal features aid in deciphering tabular data:

- Instruction tuning: This method entails fine-tuning LLMs across a spectrum of datasets, offering heightened adaptability and enhanced performance on unexplored tasks.

- Prompting techniques: These techniques focus on transforming tables into prompts while preserving their semantic essence. This empowers LLMs to process tabular data effectively.

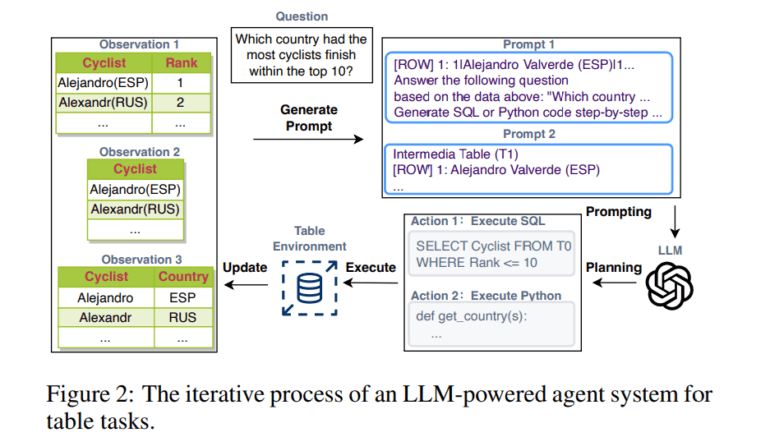

- Agent-based approach: This approach assists LLMs in engaging with external tools and executing intricate table-related tasks through iterative observation, action planning, and reflection.

These methodologies showcase promising outcomes in terms of accuracy and efficiency. However, they may necessitate substantial computational resources and meticulous dataset curation.

The research endeavors to furnish a pragmatic framework for addressing the intricacies of automating table-related tasks using LLMs. Through their proposed methodology, researchers have witnessed noteworthy enhancements in the accuracy and efficiency of LLMs across diverse table-related endeavors. While the approach’s performance is dependable, it is not without its shortcomings, notably its high computational demands, dataset curation requirements, and limitations in generalizability.

Conclusion:

The advancements in automating table-related tasks using LLMs present a substantial opportunity for the market. Businesses can leverage these innovations to streamline data processing operations, leading to improved efficiency and accuracy. However, it’s essential to consider the associated costs and limitations, such as computational resources and generalizability, when implementing these solutions. Overall, integrating LLM-based approaches for handling tabular data can yield significant benefits for businesses across various industries.