TL;DR:

- Orthogonal Finetuning (OFT) is revolutionizing AI art by enabling precise control over text-to-image generation models.

- OFT uses orthogonal transformations to maintain semantic generation capabilities while producing photorealistic images from textual descriptions.

- It enhances generation quality and efficiency, outperforming existing methods in stability and speed.

- Practical applications span across digital art, advertising, gaming, education, automotive, medical imaging, and personalized content generation.

- Challenges remain in terms of scalability, compositionality, and parameter efficiency.

Main AI News:

In the realm of AI image generation, the spotlight shines brightly on text-to-image diffusion models, thanks to their remarkable prowess in crafting photorealistic images from textual descriptions. These models wield sophisticated algorithms to decipher text and transform it into visually stunning content, pushing the boundaries of creativity and comprehension that were once the domain of humans alone. This technological marvel holds boundless promise across a spectrum of industries, from graphic design to virtual reality, ushering in a new era of crafting intricate images that seamlessly align with textual inputs.

Yet, a critical challenge looms large in this domain: the art of finetuning these models to gain precise control over the generated images. Striking the right balance between high-fidelity image generation and nuanced text interpretation has been a formidable task. The need for these models to faithfully adhere to textual directives while preserving their creative essence is paramount, especially in applications that demand specific image attributes or styles. The current methods of guiding these models involve tweaking neuron weights within the network, either through subtle learning rate adjustments or comprehensive neuron weight re-parameterization. However, these techniques often fall short in maintaining the pre-trained generative performance of the models.

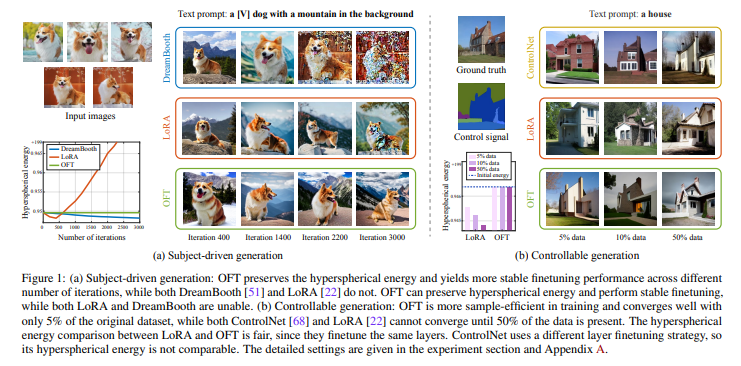

Enter the pioneers from esteemed research institutions, including the MPI for Intelligent Systems, University of Cambridge, University of Tübingen, Mila, Université de Montréal, Bosch Center for Artificial Intelligence, and The Alan Turing Institute, who introduce us to the game-changing concept of Orthogonal Finetuning (OFT). This method elevates control over text-to-image diffusion models to unprecedented heights. OFT employs an orthogonal transformation approach, with a laser focus on preserving the hyperspherical energy – a metric of the relational structure among neurons. This ingenious methodology ensures the preservation of the models’ semantic generation capabilities, resulting in more precise and dependable image generation from textual cues.

To comprehensively grasp the significance of this breakthrough, let us delve into the four dimensions that illuminate the potential of this novel method:

- Simplified Finetuning with OFT:

- Core Methodology: OFT leverages orthogonal transformations to adapt large text-to-image diffusion models for downstream tasks, all without compromising their hyperspherical energy. This approach is the guardian of the models’ semantic generation prowess.

- Advantages of Orthogonal Transformation: The preservation of pairwise angles among neurons in each layer stands as a linchpin in upholding the semantic integrity of the images produced.

- Constrained Orthogonal Finetuning (COFT): An extension of OFT, COFT imposes additional constraints, further enhancing the stability and accuracy of the finetuning process.

- Enhanced Generation Quality and Efficiency:

- Subject-Driven and Controllable Generation: OFT finds application in two specific arenas – crafting subject-specific images from a few reference images and a text prompt, and controllable generation, where the model incorporates additional control signals.

- Improved Sample Efficiency and Convergence Speed: The OFT framework emerges as the undisputed champion in terms of generation quality and convergence speed, outperforming existing methods in both stability and efficiency.

- Practical Applications and Broader Impact:

- Digital Art and Graphic Design: Artists and designers now have at their disposal a potent tool in OFT, enabling the swift creation of intricate visuals from textual descriptions, thereby accelerating the creative process.

- Advertising and Marketing: OFT empowers marketers to generate unique and tailored visual content based on specific textual inputs, facilitating the rapid prototyping of advertising concepts and visuals tailored to diverse themes and messages.

- Virtual Reality and Gaming: In the realm of VR and gaming, OFT lends its magic to the creation of immersive environments and character models based on descriptive texts, streamlining the design process and injecting fresh creativity into game development.

- Educational Content Creation: Educational content benefits immensely from OFT, as it can effortlessly craft illustrative diagrams, historical reenactments, or scientific visualizations from textual descriptions, thereby enriching the learning experience with precise and engaging visuals.

- Automotive Industry: OFT finds its place in the automotive world by visualizing car models with different described features, aiding in design choices and customer presentations.

- Medical Imaging and Research: In the field of medical research, OFT can generate visual representations of complex medical concepts or conditions described in texts, assisting in education and diagnosis.

- Personalized Content Generation: OFT adds a personal touch to content generation, creating customized images and content based on individual text inputs, enhancing user engagement in apps and digital platforms.

- Open Challenges and Future Directions:

- Scalability and Speed: Challenges persist in enhancing the scalability of OFT, particularly concerning the computational efficiency tied to matrix inverse operations within Cayley parametrization.

- Exploring Compositionality: Researchers aim to explore how orthogonal matrices derived from multiple OFT finetuning tasks can be harmoniously combined while preserving knowledge across all downstream tasks.

- Enhancing Parameter Efficiency: The quest continues to find innovative ways to improve parameter efficiency in a more unbiased and effective manner, marking a compelling frontier in AI artistry.

Conclusion:

Orthogonal Finetuning (OFT) emerges as a transformative force, unlocking a new era in AI art by seamlessly merging the realms of text and image creation. Its potential ramifications ripple across industries, promising to reshape the way we craft visuals, tell stories, and engage with technology. As researchers delve deeper into the uncharted territories of AI artistry, the horizon beckons with boundless possibilities.