TL;DR:

- Alibaba DAMO Academy’s GTE-tiny is a lightweight and speedy text embedding model, utilizing the BERT framework and trained on an extensive corpus of relevant text pairs.

- It maintains a smaller size while offering impressive performance, making it a potent choice for various downstream tasks.

- GTE-tiny excels in semantic search, clustering, sentence transformation, and translation.

- Applications include enhancing search engines, simplifying question-answering systems, and streamlining text summarization.

- Accessible through Hugging Face, GTE-tiny is versatile and easy to integrate into existing software.

- Alibaba DAMO Academy continues to optimize its performance for evolving market needs.

Main AI News:

In the ever-evolving landscape of AI, Alibaba DAMO Academy introduces GTE-tiny, a nimble and efficient text embedding model that promises to revolutionize downstream tasks. Built upon the robust foundation of the BERT framework, GTE-tiny stands out for its swiftness and versatility, having undergone rigorous training on a vast corpus of pertinent text pairs, spanning diverse domains and practical applications.

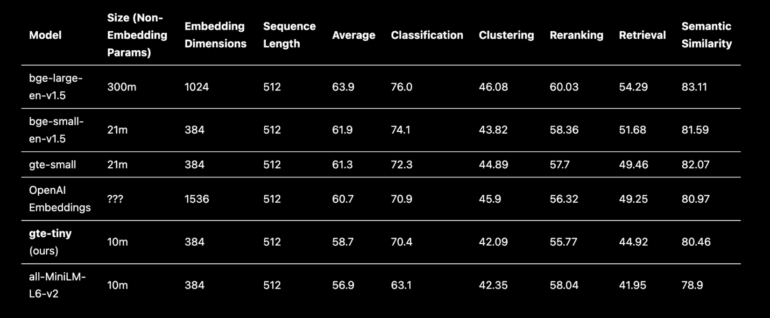

GTE-tiny, while being smaller in size compared to its sibling, gte-small, displays commendable performance. In fact, it’s designed to strike a harmonious balance – compact yet powerful. It offers a performance akin to an all-MiniLM-L6-v2 system, presenting an enticing alternative for AI enthusiasts. Additionally, GTE-tiny boasts compatibility with ONNX, providing you with even more options for integration.

At its core, GTE-tiny is a sentence transformation maestro. It excels in tasks such as semantic search and clustering, effortlessly translating sentences and paragraphs into a dense 384-dimensional vector space. Remarkably, this transformation is achieved while maintaining a smaller footprint and remarkable speed, a mere shadow of its predecessor, thenlper/gte-small.

The applications of GTE-tiny are as diverse as they are impactful. Here are just a few ways this powerful model can supercharge your downstream processes:

- Search and retrieval of data: GTE-tiny empowers your systems to dig deep into your data repository, swiftly locating relevant information.

- Identical meaning in different texts: Discover the common threads among seemingly distinct texts, streamlining your content analysis.

- Reordering of text: Let GTE-tiny restructure your content intelligently, improving readability and coherence.

- Responding to Queries: Equip your AI with the ability to comprehend and respond to user queries more effectively than ever before.

- Synopsis of Text: Summarize lengthy documents effortlessly, extracting key insights for quick decision-making.

- Translation by machines: Bridge language barriers seamlessly, allowing for efficient communication across borders.

GTE-tiny emerges as the ultimate choice for tasks that demand efficiency without compromising on quality. Its versatility extends to applications like text embedding models for mobile devices and the development of real-time search engines. The possibilities are endless, and the advantages are undeniable.

Let’s delve deeper into some exemplary applications:

- Search Engine Enhancement: GTE-tiny elevates your search engine’s capabilities by embedding user queries and documents into a shared vector space. The result? A more efficient and precise retrieval of relevant materials.

- Question-Answering Simplified: Enable your question-answering system to excel by encoding questions and passages into a shared vector space. GTE-tiny makes finding the perfect answer a breeze.

- Text Summarization Made Easy: Harness GTE-tiny’s prowess to generate concise summaries from lengthy text documents. Time-saving and efficient, it’s a game-changer for content curation.

For those eager to explore GTE-tiny’s potential, Hugging Face, a renowned open-source repository for machine learning models, offers seamless access. Integration into your existing or new software is a breeze, making GTE-tiny an accessible choice for all.

While GTE-tiny may be relatively new, it’s already proving its mettle in various downstream applications. Rest assured. The Alibaba DAMO Academy is dedicated to optimizing its performance, ensuring that GTE-tiny continues to evolve and empower researchers and developers in their pursuit of cutting-edge text embedding models and associated downstream tasks. GTE-tiny is more than just a model; it’s a tool that unlocks the boundless possibilities of AI-driven text transformation.

Conclusion:

GTE-tiny’s emergence as a compact yet powerful text embedding model marks a significant development for the market. Its ability to efficiently handle various downstream tasks, coupled with ease of integration, positions it as a game-changer for businesses seeking to leverage AI-driven text transformation for enhanced data retrieval, content analysis, and user interaction. Expectations are high for the continued evolution of GTE-tiny, making it a valuable asset for researchers and developers in the field.