TL;DR:

- Large Language Models (LLMs) are gaining popularity and continuously evolving with advancements in deep learning and AI.

- LLMs are fine-tuned using direct training signals to enhance their performance in tasks like classification accuracy, question answering, and document summarization.

- Learn from Textual Interactions (LETI) is a new fine-tuning paradigm that enables LLMs to learn from textual feedback and understand why errors occur.

- LETI provides textual feedback to assess the correctness of the model’s outputs and identify errors in generated code, similar to the iterative process of software development.

- LETI uses a Solution Evaluator, such as a Python interpreter, to evaluate the generated solutions and provide feedback.

- The training data for fine-tuning LLMs includes natural language instructions, LM-generated programs, and textual feedback.

- LETI significantly improves the performance of LLMs on code generation tasks without relying on ground-truth outputs for training.

- Textual feedback allows LLMs to achieve the same performance as binary feedback but with fewer gradient steps.

- LETI enhances language models by leveraging detailed textual feedback to learn from mistakes and improve performance in code generation tasks.

Main AI News:

With the rise in popularity of Large Language Models (LLMs), the field of AI research is witnessing constant advancements and discoveries. These deep learning technologies have permeated various domains, continually evolving to provide enhanced capabilities. LLMs undergo extensive training on vast amounts of raw text, and to optimize their performance, these models undergo a process called fine-tuning. During this process, LLMs are trained on specific tasks using direct training signals that measure their performance, such as classification accuracy, question answering, or document summarization.

In recent times, a new paradigm known as Learn from Textual Interactions (LETI) has emerged, exploring the untapped potential of Large Language Models to learn from textual interactions and feedback. LETI allows language models to understand not only when they make mistakes but also the reasons behind those errors. This approach enables LLMs to overcome the limitations of solely relying on labeled data and scalar rewards.

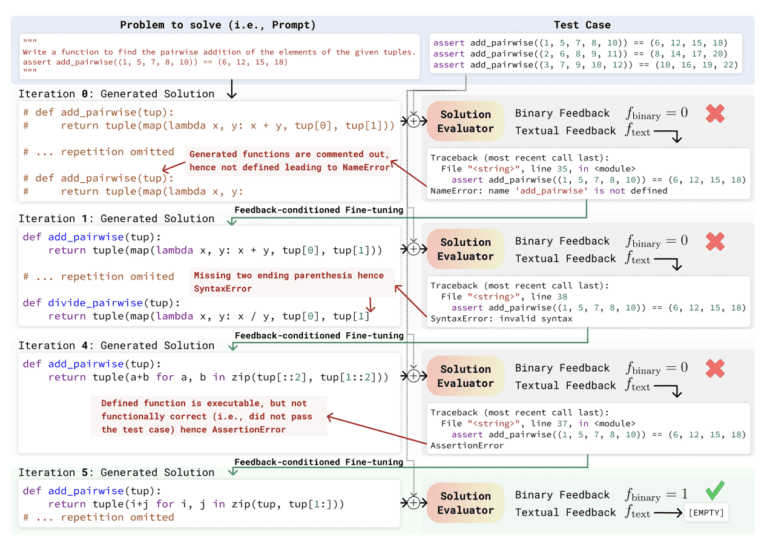

The team of researchers behind LETI’s development emphasizes how this approach provides textual feedback to the language model, helping to assess the accuracy of its outputs through binary labels. It effectively identifies and explains errors within the generated code. The LETI paradigm mirrors the iterative process of software development, where developers write a program, test it, and refine it based on feedback. Similarly, LETI fine-tunes LLMs by providing textual feedback that pinpoints bugs and errors.

During the fine-tuning process, the model is presented with a problem description in natural language, after which it generates a set of potential solutions. These solutions are then evaluated by a Solution Evaluator using a set of test cases. The researchers employed a Python interpreter to leverage the error messages and stack traces obtained from the generated code as textual feedback. The Solution Evaluator essentially acts as this Python interpreter.

The training data for fine-tuning the model consists of three main components: natural language instructions, LM-generated programs, and textual feedback. When the generated program fails to provide a solution, feedback is provided to the LLM. Conversely, if the generated program offers an accurate solution, a reward token in the form of binary feedback is given to the model to reinforce its performance. This generated textual feedback is instrumental in the fine-tuning process of the LM, known as Feedback-Conditioned Fine-Tuning.

To evaluate the effectiveness of LETI, the researchers employed a dataset called the Multiple Big Programming Problems (MBPP) dataset, which focuses on code generation tasks. The results demonstrate that LETI significantly improves the performance of two base LMs with different scales on the MBPP dataset, all without relying on ground-truth outputs for training. On the HumanEval dataset, LETI achieves similar or superior performance compared to the base LMs when faced with unseen problems. Additionally, researchers discovered that using textual feedback allows the model to achieve the same level of performance with fewer gradient steps in comparison to binary feedback.

Conlcusion:

LETI presents a powerful approach to fine-tuning language models, enabling them to learn from detailed textual feedback. It empowers these models to grow from their mistakes and enhance their performance in tasks like code generation. The potential of LETI appears highly promising, opening up new avenues for improving the capabilities of language models in various domains.