TL;DR:

- Scientific Machine Learning (SciML) combines classical modeling with machine learning.

- Three primary subfields of SciML: PDE solvers, PDE discovery, and operator learning.

- Operator learning focuses on finding unknown operators, critical for dynamic systems and PDEs.

- Challenges in operator learning include neural operator design and optimization.

- Research from the University of Cambridge and Cornell provides a comprehensive guide to operator learning, addressing key topics.

- Numerical PDE solvers, training data quality, and optimization techniques are crucial in operator learning.

- Neural operators extend traditional deep learning for function space mapping.

- Operator learning promises to reshape our understanding of dynamical systems and PDEs through neural networks.

Main AI News:

The extraordinary capabilities of Artificial Intelligence (AI) and Deep Learning have charted new frontiers across diverse domains, encompassing computer vision, language modeling, healthcare, biology, and beyond. Emerging on this horizon is the realm of Scientific Machine Learning (SciML), an amalgamation of classical modeling methodologies rooted in Partial Differential Equations (PDEs) and the transformative prowess of machine learning in approximations.

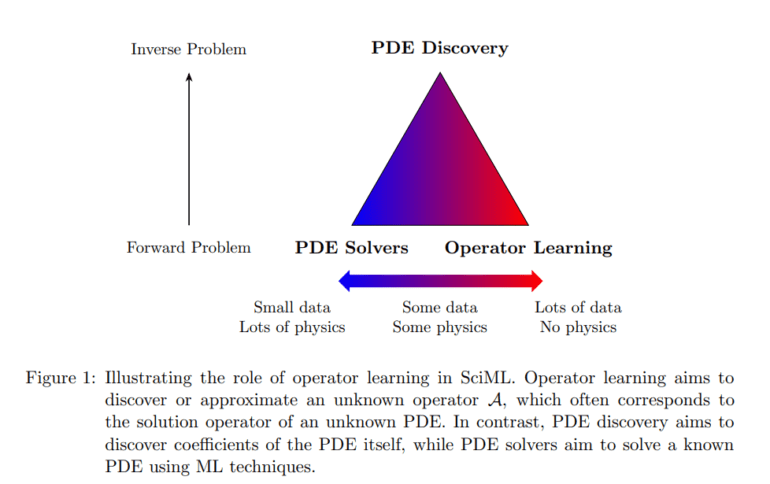

SciML is a multifaceted discipline, comprising three primary subfields: PDE solvers, PDE discovery, and operator learning. PDE discovery is dedicated to discerning the coefficients of a PDE from empirical data, while PDE solvers harness the capabilities of neural networks to approximate known PDE solutions. Within this triumvirate, operator learning emerges as a specialized methodology with the singular objective of identifying or approximating an enigmatic operator, often the solution operator to a differential equation.

Operator learning is an intricate process that entails gleaning insights from available data pertaining to a partial differential equation (PDE) or dynamic system. It grapples with several challenges, including the design of an adept neural operator, expeditious resolution of optimization quandaries, and ensuring the ability to generalize from fresh data.

In recent scholarship, researchers hailing from the prestigious institutions of Cambridge and Cornell University have unveiled a meticulously crafted mathematical blueprint for comprehending operator learning. Their comprehensive study spans an array of critical facets, encompassing the selection of apt PDEs, exploration of diverse neural network architectures, refinement of numerical PDE solvers, adept management of training datasets, and the execution of efficient optimization techniques.

Operator learning assumes particular significance when confronted with the imperative of elucidating the characteristics of a dynamic system or PDE. It excels in deciphering complex or nonlinear interactions that might pose computational challenges to conventional methodologies. The research team underscores the importance of judiciously selecting neural network topologies, which are tailored to process functions as both inputs and outputs, as opposed to discrete vectors. The configuration of activation functions, layer counts, and weight matrices is paramount, as these elements significantly influence the capacity to capture the intricate behavior inherent in the underlying system.

The study underscored that operator learning is intimately entwined with numerical PDE solvers, which serve to expedite the learning process and provide approximations to PDE solutions with precision. Seamless integration of these solvers is imperative for the attainment of accurate and swift results. The quality and quantity of training data wield substantial influence over the efficacy of operator learning. The judicious selection of boundary conditions and the numerical PDE solver play a pivotal role in fashioning dependable training datasets. The process also necessitates the formulation of an optimization problem to identify optimal neural network parameters. The judicious choice of a loss function that quantifies the disparity between anticipated and actual outputs is indispensable. This pivotal process encompasses the selection of optimization techniques, management of computational complexity, and rigorous evaluation of outcomes.

Notably, the researchers have introduced the concept of neural operators within the domain of operator learning. These operators, analogous to neural networks, operate on infinite-dimensional inputs. They acquire expertise in mapping function spaces by extending the foundations of conventional deep learning. Neural operators have been defined as amalgams of integral operators and nonlinear functions, offering novel perspectives in working with functions instead of vectors. Various design paradigms, including DeepONets and Fourier neural operators, have been proposed to tackle computational challenges associated with evaluating integral operators or approximating kernels.

Conclusion:

The emergence of operator learning within Scientific Machine Learning (SciML) presents exciting opportunities for businesses and industries across the spectrum. By combining classical modeling with the power of neural networks, SciML opens doors to enhanced problem-solving capabilities in complex dynamic systems and partial differential equations. As organizations seek more efficient and accurate solutions in fields like healthcare, engineering, and finance, the insights from this research offer a roadmap to harness the potential of operator learning, paving the way for innovative advancements and competitive advantages in the market.