TL;DR:

- Large Language Models (LLMs) excel in complex reasoning tasks without explicit fine-tuning.

- Researchers explore the role of pre-training via next-token prediction in bolstering reasoning capacities.

- The bayesian approach elucidates how LLMs harness reasoning abilities through pre-training.

- Implicit reasoning paths observed during pre-training aid in inference and solution generation.

- Localized structures in data facilitate reasoning trajectories between concepts.

- The analysis focuses on mathematical and logical reasoning, showcasing efficacy in both domains.

- LM pre-trained on random walk paths from KGs outperforms traditional path ranking algorithms.

- Utilizing pre-existing CoT training data enhances LM’s performance in resolving math word problems.

Main AI News:

In contemporary times, Large Language Models (LLMs) have exhibited remarkable adeptness in tackling intricate reasoning dilemmas. These encompass tasks ranging from unraveling mathematical conundrums to applying logic in problem-solving endeavors, even navigating challenges steeped in worldly knowledge sans explicit fine-tuning. Researchers have embarked on unraveling the pivotal role of pre-training in cultivating reasoning capabilities via next-token prediction.

In a recent scholarly inquiry, a consortium of researchers has directed their focus towards grasping the genesis of reasoning aptitude, essentially, the proficiency to deduce novel insights from previously assimilated knowledge. The crux of LLMs’ emergent capabilities lies in intensive pre-training endeavors. The study primarily endeavors to scrutinize the impact of pre-training data on the reasoning paradigm of language models.

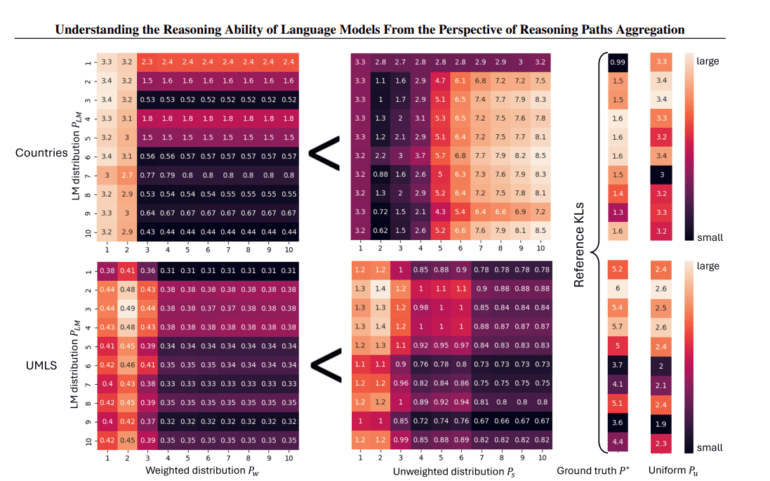

The research team has elucidated that their investigation adopts a Bayesian framework to elucidate how reasoning abilities in LLMs can be harnessed through pre-training centered on next-token prediction. Per the hypothesis posited, LLMs leverage the next-token prediction objective to compile implicit reasoning pathways discerned during pre-training. These reasoning trajectories can be construed as textual linkages bridging two concepts within real-world contexts. As per this conjecture, LLMs might traverse these reasoning conduits to leap from one idea to another during inference, potentially yielding a cascade of chain-of-thought (CoT) solutions or tacit reasoning yielding no overt outputs.

While prior studies have underscored the significance of localized structures in interconnections between variables in training data, especially concerning CoT reasoning, this investigation contends that when a reasoning path connects two concepts, their co-occurrence in the data engenders a graph-like localized framework.

The inquiry has concentrated on two prevalent forms of reasoning, mathematical and logical, to scrutinize these hypotheses. The analysis delves into reasoning across knowledge graphs (KGs) utilizing random walk paths forged during pre-training for logical reasoning. The findings indicate that vis-a-vis conventional path ranking algorithms, an LM pre-trained on random walk paths derived from a KG can adeptly infer absent correlated links.

Furthermore, the study tackles the conundrum of resolving math word problems (MWPs) concerning mathematical reasoning. The approach leverages pre-existing CoT training data to formulate random walk reasoning pathways instead of commencing from scratch during LM pre-training. The LM is subsequently honed via next-token prediction along these trajectories. The research team has disclosed that outcomes from experiments conducted across multiple MWP datasets consistently surpass conventional guided fine-tuning methodologies.

Conclusion:

Understanding the intricate mechanisms behind language model reasoning, particularly through the aggregation of reasoning paths, presents significant implications for the market. It sheds light on the potential for enhanced performance and efficacy across various industries reliant on natural language processing technologies, ranging from customer service chatbots to data analysis tools. This deeper comprehension paves the way for more sophisticated and effective applications, driving innovation and competitiveness in the market.