TL;DR:

- Large language models (LLMs) face challenges due to their immense size, demanding significant GPU memory and computational resources.

- TC-FPx, a comprehensive GPU kernel design scheme, addresses memory access and runtime issues associated with weight de-quantization.

- FP6-LLM, the result of TC-FPx integration, significantly improves LLM performance, enabling efficient inference with reduced memory requirements.

- FP6-LLM allows single-GPU inference of complex models, showcasing impressive improvements in throughput.

- This breakthrough presents new opportunities for the application of LLMs in various business domains.

Main AI News:

In the sphere of computational linguistics and artificial intelligence, the perpetual quest to optimize the efficiency of large language models (LLMs) continues unabated. These LLMs, celebrated for their multifaceted language-related capabilities, grapple with formidable challenges owing to their colossal scale. Case in point, GPT-3, with its staggering 175 billion parameters, imposes substantial demands on GPU memory, underscoring the imperative for more memory-efficient and high-performance computational methods.

The primary conundrum in deploying large language models lies in their sheer magnitude, necessitating copious GPU memory and computational resources. The memory wall exacerbates this challenge during token generation, where the speed of model inference hinges primarily on the time required to retrieve model weights from GPU DRAM. Therefore, the pressing need for efficient methodologies to alleviate memory and computational burdens while preserving model prowess remains paramount.

Traditional approaches to large language models frequently rely on quantization techniques, which employ fewer bits to represent each model weight, yielding a more streamlined representation. However, these methods have their limitations. For instance, while they reduce the model size, 4-bit and 8-bit quantizations inadequately support linear layer execution on modern GPUs, potentially compromising either model quality or inference speed.

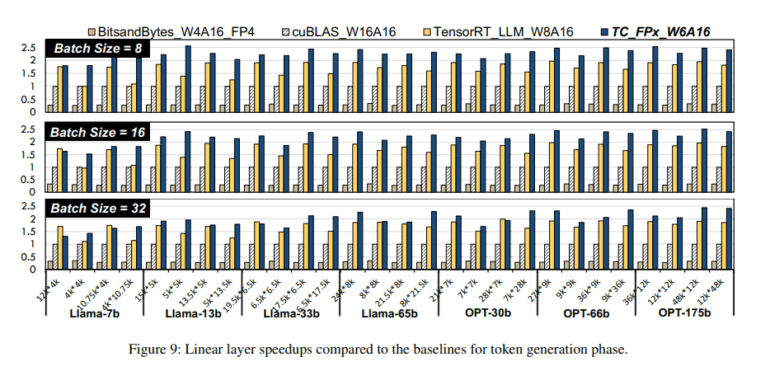

Enter TC-FPx, a groundbreaking innovation stemming from a collaboration between Microsoft, the University of Sydney, and Rutgers University. TC-FPx introduces a comprehensive GPU kernel design scheme with unified Tensor Core support for varying quantization bit-widths, encompassing 6-bit, 5-bit, and 3-bit. This pioneering design confronts the challenges of unwieldy memory access and excessive runtime overhead associated with weight de-quantization in large language models head-on. By seamlessly integrating TC-FPx into existing inference systems, they have given birth to FP6-LLM, a revolutionary end-to-end support system for quantized LLM inference.

TC-FPx employs ahead-of-time bit-level pre-packing and SIMT-efficient GPU runtime to optimize memory access and minimize the runtime overhead of weight de-quantization. This approach brings about a significant enhancement in the performance of large language models, enabling more efficient inference with reduced memory requirements. The research team’s demonstration underscores the prowess of FP6-LLM, allowing the inference of models like LLaMA-70b using just a single GPU, achieving substantially higher normalized inference throughput than the FP16 baseline.

The performance evaluation of FP6-LLM stands as a testament to its remarkable advancements in normalized inference throughput, outshining the FP16 baseline by a substantial margin. FP6-LLM’s breakthrough empowers the inference of intricate models with a single GPU, presenting a notable stride forward in the domain and opening up fresh possibilities for the application of large language models across diverse domains.

Conclusion:

The introduction of FP6-LLM, driven by TC-FPx innovation, heralds a promising era for large language models in the business landscape. By optimizing memory access and reducing runtime overhead, FP6-LLM empowers businesses to efficiently harness the capabilities of LLMs, potentially revolutionizing industries with its enhanced performance and cost-effectiveness.