- MIT CSAIL introduces MAIA (Multimodal Automated Interpretability Agent) to understand neural models, particularly in computer vision.

- MAIA automates interpretability tasks like feature interpretation and bias identification using neural networks.

- Traditional methods rely on manual efforts, whereas MAIA utilizes modular frameworks and pre-trained models.

- MAIA outperforms baseline methods and approaches human expert labels in understanding neural model behavior.

Main AI News:

MIT CSAIL researchers have unveiled MAIA (Multimodal Automated Interpretability Agent), a groundbreaking system aimed at revolutionizing the comprehension of neural models, particularly in the realm of computer vision. The intricate nature of these models necessitates a deep understanding to enhance accuracy, ensure robustness, and pinpoint biases. Traditionally, such understanding has been achieved through manual processes like exploratory data analysis and controlled experimentation, which are not only laborious but also costly. Enter MAIA, which harnesses the power of neural networks to automate interpretability tasks, including feature interpretation and failure mode discovery.

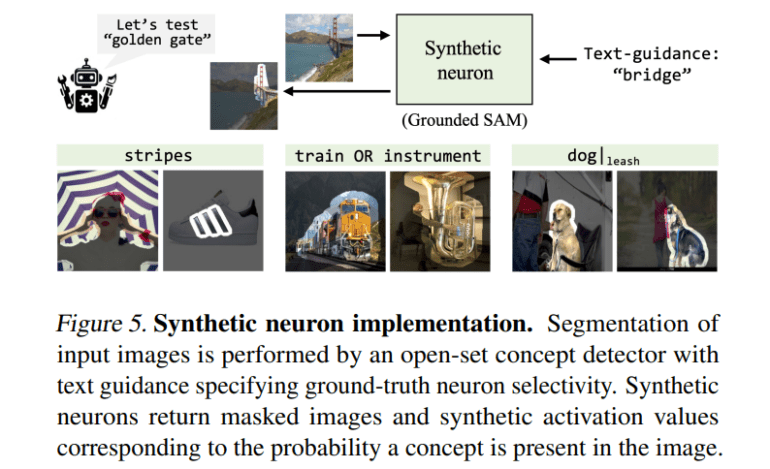

Existing methods for model interpretability often fall short in scalability and accuracy, relegating them to mere hypothesis generators rather than actionable insight providers. MAIA, however, takes a different approach by employing a modular framework to automate interpretability tasks. At its core lies a pre-trained vision-language model, bolstered by a suite of tools that empower the system to iteratively conduct experiments on neural models. These tools encompass everything from synthesizing and editing inputs to computing exemplars from real-world datasets and summarizing experimental findings.

The efficacy of MAIA in elucidating neural model behavior is evident when compared against both baseline methods and human expert labels. Its framework is meticulously crafted to facilitate seamless experimentation on neural systems, with interpretability tasks seamlessly woven into Python programs. By leveraging a pre-trained multimodal model, MAIA can analyze images directly and craft experiments tailored to addressing user queries about model behavior. The System class within MAIA’s API enables individual components to be interpreted and experimented upon, while the Tools class houses a suite of functions for constructing modular programs that probe hypotheses about system behavior.

In evaluations focused on the black-box neuron description task, MAIA shines, demonstrating its prowess in generating predictive explanations of vision system components, pinpointing spurious features, and autonomously identifying biases in classifiers. It surpasses baseline methods and even rivals human expert labels in its ability to generate comprehensive descriptions of both real and synthetic neurons. MAIA stands as a testament to the potential of automated interpretability agents in unlocking the mysteries of neural models and paving the way for advancements in computer vision and beyond.

Conclusion:

MAIA’s introduction signifies a significant advancement in the field of neural model comprehension, particularly in computer vision. By automating interpretability tasks and outperforming traditional methods, MAIA paves the way for more accurate, robust, and unbiased neural models, offering immense potential for applications across various industries, from healthcare to autonomous vehicles. Businesses in these sectors should monitor developments closely and consider integrating MAIA into their workflows to stay competitive in an increasingly AI-driven landscape.