TL;DR:

- OLMo (Open Language Model) by AI2 promotes transparency in AI by providing access to model architecture, training data, and methodology.

- It offers a comprehensive framework for language model creation, analysis, and improvement.

- Built on AI2’s Dolma set, OLMo enables robust model pretraining with a sizable open corpus.

- OLMo facilitates further research by offering resources for understanding and replicating the model’s training process.

- Extensive evaluation tools ensure rigorous assessment of the model’s performance, enhancing scientific understanding.

- Available in multiple versions, OLMo’s scalability accommodates diverse applications, from basic language understanding to complex generative tasks.

- Meticulous evaluation, combining online and offline phases, demonstrates OLMo’s prowess in commonsense reasoning and language modeling.

Main AI News:

In the realm of Artificial Intelligence (AI), the advent of Large Language Models (LLMs) has marked a significant leap forward in various tasks such as text generation, language translation, and code completion. However, the most advanced models often remain shrouded in secrecy, with limited access to crucial details like architecture, training data, and methodology.

Transparency is paramount for understanding, evaluating, and improving these models, particularly in identifying biases and potential risks. Addressing this challenge, the Allen Institute for AI (AI2) has unveiled OLMo (Open Language Model), a framework designed to foster transparency in Natural Language Processing.

OLMo serves as a cornerstone in advocating for openness in language model technology evolution. It offers not just a language model but a comprehensive framework for model creation, analysis, and enhancement. Beyond granting access to model weights and inference capabilities, OLMo provides tools for training, evaluation, and documentation, promoting a culture of openness and collaboration.

Key Features of OLMo:

- Built on Dolma Set: Leveraging AI2’s Dolma set and a sizable open corpus, OLMo enables robust model pretraining.

- Resource Accessibility: OLMo provides all necessary resources to understand and replicate the model’s training process, fostering openness and facilitating further research.

- Evaluation Tools: Extensive evaluation tools allow for rigorous assessment of the model’s performance, enhancing scientific understanding.

OLMo is available in various versions, including 1B and 7B parameter models, with a larger 65B version in development. Its scalability accommodates diverse applications, from basic language understanding to complex generative tasks requiring nuanced contextual knowledge.

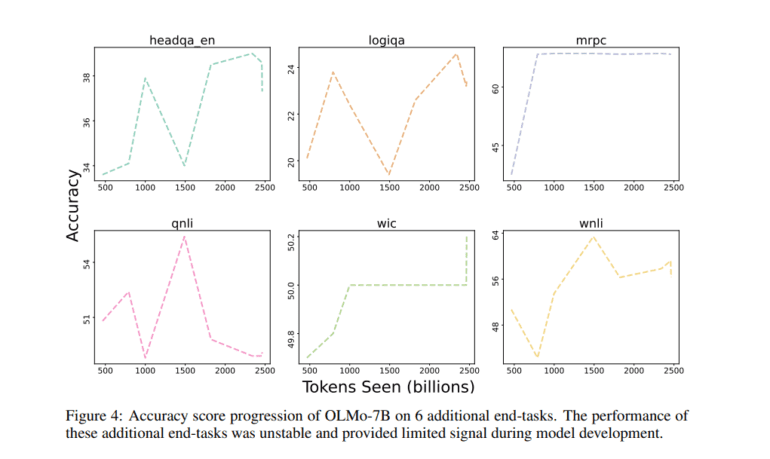

The evaluation process for OLMo has been meticulous, combining both online and offline phases. Offline evaluation, utilizing the Catwalk framework, includes intrinsic and downstream language modeling assessments. Online assessments during training inform decisions on initialization, architecture, and other aspects.

Zero-shot performance evaluation on nine core tasks demonstrates OLMo’s prowess in commonsense reasoning. Intrinsic language modeling evaluation, conducted using Paloma’s extensive dataset, showcases OLMo-7B as the largest model for perplexity assessments, ensuring a comprehensive understanding of its capabilities.

Conclusion:

The introduction of OLMo represents a significant step towards fostering transparency and collaboration in the Natural Language Processing market. By providing access to critical model details and evaluation tools, OLMo empowers researchers to advance AI technologies ethically and responsibly, driving innovation and trust in the industry.