- Chain-of-Thought (CoT) reasoning enhances language models (LMs) for intricate tasks.

- LLMs show logical reasoning capabilities despite not being explicitly designed for it.

- “Iteration heads” in transformers facilitate multi-step reasoning.

- Controlled tasks like copying and polynomial iterations aid in understanding CoT reasoning.

- Transformers develop internal reasoning circuits influenced by training data.

- Iterative algorithms execution in LMs involves sequential processing.

- CoT reasoning mechanisms emerge within transformer architectures.

- Data curation boosts skill transfer and learning efficiency in LMs.

- Pretraining on simpler tasks followed by fine-tuning on complex ones accelerates convergence.

Main AI News:

In the ever-evolving landscape of language model algorithms, the concept of Chain-of-Thought (CoT) reasoning stands out as a pivotal enhancement, empowering models to tackle intricate reasoning tasks with finesse. Originally geared towards next-token prediction, Language Models (LMs) have transcended their initial design, demonstrating an uncanny ability to articulate intricate steps in their decision-making process. This semblance of logical reasoning, albeit unintended, underscores the remarkable adaptability of LMs. Studies indicate that while LMs may falter in solving problems through isolated token predictions, their prowess shines when they can orchestrate a sequence of tokens, effectively leveraging these sequences as a computational tape to navigate through complex problem domains.

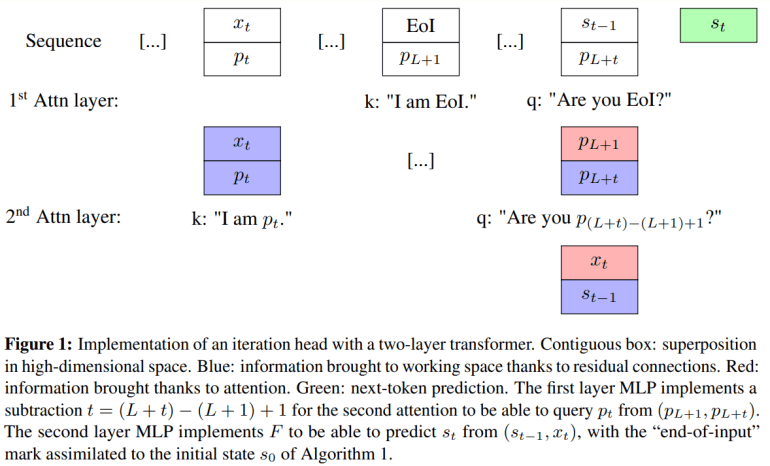

A consortium of researchers hailing from FAIR, Meta AI, Datashape, INRIA, and various esteemed institutions delve into the genesis of CoT reasoning within transformer architectures. They introduce the concept of “iteration heads,” specialized attention mechanisms pivotal for iterative reasoning, and meticulously chart their evolution and functionality within the neural network framework. Their comprehensive investigation illustrates how these heads empower transformers to unravel intricate problems through multi-step reasoning, with a primary focus on elementary yet controlled tasks such as copying and polynomial iterations. Experimental evidence underscores the versatility of these skills across different problem domains, suggesting that transformers organically develop internal frameworks for reasoning, shaped by the nuances of their training data, thus elucidating the robust CoT capabilities observed in expansive model architectures.

Examining the Core: Deciphering the Mechanisms of Iterative Reasoning in Language Models In the dynamic realm of business magazine analyses, the focus sharpens on deciphering the intricacies of iterative reasoning mechanisms within language models (LMs), particularly in the context of transformer architectures. Pioneering a theoretical framework dubbed “iteration heads,” researchers unveil a sophisticated approach for two-layer transformers to proficiently tackle iterative tasks by harnessing intricate attention mechanisms. Rigorous experimentation validates the emergence of this theoretical construct during the training phase, underscoring its resilience across diverse problem sets and model configurations. Furthermore, through meticulous ablation studies, researchers dissect variations in the learned circuits, offering invaluable insights into the underlying mechanisms fueling Chain-of-Thought (CoT) reasoning in transformer architectures.

Conclusion:

The insights gleaned from the analysis of iterative reasoning mechanisms within language models offer profound implications for the market. As businesses increasingly rely on sophisticated AI models for decision-making and problem-solving, understanding the evolution of these models’ capabilities becomes paramount. The emergence of Chain-of-Thought (CoT) reasoning sheds light on the adaptability and resilience of transformer architectures, indicating potential avenues for enhancing performance and efficiency in various industries. Moreover, strategic data curation strategies present opportunities for optimizing model training and accelerating innovation, positioning organizations to stay ahead in an ever-evolving landscape of AI-driven solutions.