- UT Austin introduces PUTNAMBENCH, a new benchmark for neural theorem-provers.

- The benchmark uses problems from the William Lowell Putnam Mathematical Competition, known for its challenging college-level questions.

- PUTNAMBENCH includes 1,697 formalizations of 640 problems, available in Lean 4, Isabelle, and Coq.

- Evaluation results show current methods like GPT-4 and Sledgehammer struggle with the benchmark’s problems.

- The benchmark highlights challenges in synthesizing new lemmas and creating innovative proof strategies.

- PUTNAMBENCH’s multilingual approach ensures a thorough assessment across different theorem-proving environments.

Main AI News:

In the realm of artificial intelligence, automating mathematical reasoning has been a long-standing ambition. Prominent formal frameworks such as Lean 4, Isabelle, and Coq have significantly contributed to this field by enabling machine-verifiable proofs of complex mathematical theorems. To advance the development of neural theorem-provers, which aim to automate theorem proving, it is crucial to have robust benchmarks that test these systems rigorously.

Current benchmarks, including MINI F2F and FIMO, primarily address high-school-level mathematics, leaving a gap in evaluating neural theorem provers against more complex, undergraduate-level problems. Addressing this gap, researchers at the University of Texas at Austin have launched PUTNAMBENCH, a pioneering benchmark designed to assess neural theorem-provers with problems from the prestigious William Lowell Putnam Mathematical Competition. Known for its challenging college-level questions, the Putnam competition provides an ideal foundation for a rigorous benchmarking tool. PUTNAMBENCH features 1,697 formalizations of 640 problems, with resources available in Lean 4, Isabelle, and a notable subset in Coq. This multilingual framework ensures a thorough assessment across various theorem-proving environments.

The benchmark’s methodology involves meticulous formalizations of Putnam competition problems, ensuring thorough debugging and availability in multiple formal proof languages. The problems span various undergraduate mathematics topics such as algebra, analysis, number theory, and combinatorics, designed to evaluate both problem-solving skills and conceptual understanding. PUTNAMBENCH thus presents a significant challenge to neural theorem provers.

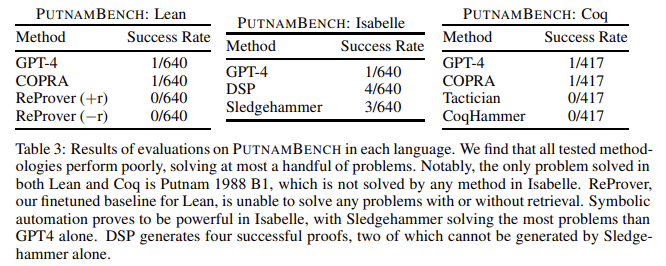

Evaluations using PUTNAMBENCH encompassed several neural and symbolic theorem-provers including Draft-Sketch-Prove, COPRA, GPT-4, Sledgehammer, and Coqhammer. Results indicated that current methods were only partially successful, with GPT-4 solving just one out of 640 problems in Lean 4 and Coq, and Sledgehammer addressing three out of 640 issues in Isabelle.

A notable challenge revealed by PUTNAMBENCH is the difficulty in synthesizing new lemmas and integrating them into complex proofs. Although current theorem provers can effectively handle standard proof steps well-represented in their training data, they struggle with generating innovative proof strategies. This highlights the necessity for advanced neural models capable of deeper mathematical reasoning and knowledge.

PUTNAMBENCH’s inclusion of multiple proof languages distinguishes it from earlier benchmarks. By covering Lean 4, Isabelle, and Coq, PUTNAMBENCH offers a comprehensive evaluation of theorem-proving methods, ensuring a detailed understanding of their strengths and limitations across different formal proof environments.

Conclusion:

PUTNAMBENCH represents a significant advancement in benchmarking neural theorem-provers by introducing more complex, undergraduate-level mathematical challenges. This development is likely to drive innovation in the field, as it reveals critical gaps in current AI capabilities and highlights the need for more sophisticated models. The inclusion of multiple formal proof languages also provides a more comprehensive evaluation, potentially influencing future research and development efforts. By addressing these advanced benchmarks, AI developers can better understand the limitations of existing systems and work towards creating more effective theorem-proving tools.