- Rutgers University introduces VCHAR, a novel AI framework for Complex Human Activity Recognition.

- VCHAR treats atomic activity outputs as distributions over specified intervals, eliminating the need for precise temporal labeling.

- The framework leverages generative methodologies to enhance interpretability and usability of activity classifications.

- Utilizes variance-driven approach with Kullback-Leibler divergence for accurate recognition of activities in smart environments.

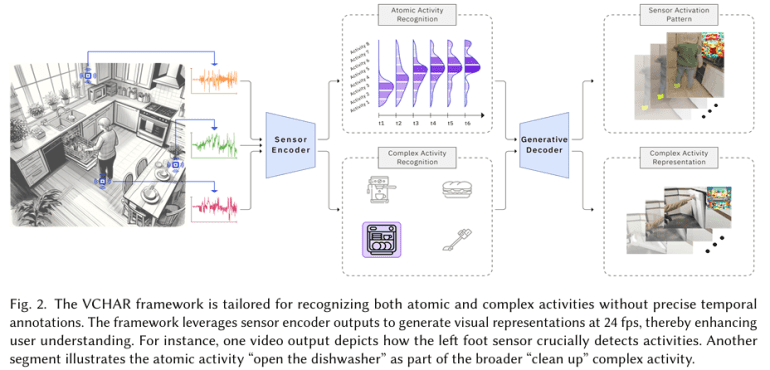

- Introduces a generative decoder framework transforming sensor-based outputs into visual narratives, integrating complex activities with sensor data.

- Demonstrates competitive performance on diverse datasets, emphasizing adaptability through pretrained models and one-shot tuning.

Main AI News:

The Variance-Driven Complex Human Activity Recognition (VCHAR) framework, developed by researchers at Rutgers University, revolutionizes Complex Human Activity Recognition (CHAR) by treating atomic activity outputs as distributions over specified intervals. This approach eliminates the need for meticulous temporal labeling, a common bottleneck in ubiquitous computing applications such as smart environments. By leveraging generative methodologies, VCHAR provides clear explanations for complex activity classifications through video-based outputs, enhancing accessibility for users without deep machine learning expertise.

Key to VCHAR’s methodology is its variance-driven strategy utilizing the Kullback-Leibler divergence to approximate distributions of atomic activity outputs within defined time intervals. This method enables accurate recognition of critical atomic activities while accommodating transient states and irrelevant data, thereby improving detection rates in the absence of detailed labeling.

Moreover, VCHAR introduces a novel generative decoder framework that transforms sensor-based model outputs into cohesive visual representations, integrating complex and atomic activities with relevant sensor data. This includes the use of a Language Model (LM) agent for data organization and a Vision-Language Model (VLM) for generating comprehensive visual narratives. The framework’s pretrained “sensor-based foundation model” and “one-shot tuning strategy” further enhance adaptability to specific scenarios, demonstrated through competitive performance on publicly available datasets.

By synthesizing comprehensive visual narratives, VCHAR not only enhances interpretability and usability of CHAR systems but also facilitates communication of findings to stakeholders without technical backgrounds. This capability bridges the gap between raw sensor data and actionable insights, positioning VCHAR as a promising solution for real-world smart environment applications requiring precise and contextually relevant activity recognition.

Conclusion:

The introduction of VCHAR signifies a significant advancement in Complex Human Activity Recognition, addressing critical challenges in smart environments by eliminating the dependence on meticulous temporal labeling. This innovation not only enhances the accuracy of activity detection but also improves the accessibility and interpretability of AI-driven insights. VCHAR’s adaptability and competitive performance underscore its potential to reshape the market landscape, catering to diverse applications in healthcare, elderly care, surveillance, and emergency response sectors.