TL;DR:

- VidChapters-7M introduces a dataset with 7 million meticulously segmented chapters from 817,000 videos, addressing the scarcity of data for video organization research.

- It offers three distinct tasks: Video Chapter Generation, Video Chapter Generation with Predefined Boundaries, and Video Chapter Grounding, enhancing video content accessibility.

- A comprehensive evaluation showcases the dataset’s remarkable impact on dense video captioning tasks, elevating the state of the art in both zero-shot and fine-tuning scenarios.

- VidChapters-7M originates from YT-Temporal-180M, which brings potential biases in video category distribution, requiring responsible usage.

- Models trained on this dataset could inadvertently reflect biases from platforms like YouTube, necessitating ethical considerations in deployment and analysis.

Main AI News:

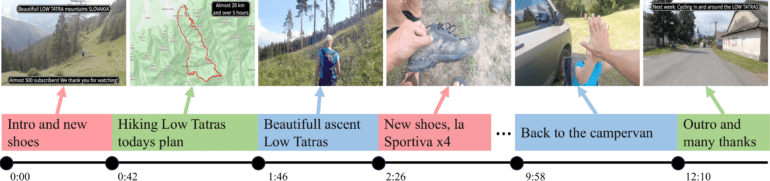

In today’s dynamic world of digital content, efficient video organization is key to delivering value to users seeking specific information within lengthy videos. However, this critical aspect of video management has long been neglected due to the scarcity of publicly available data for research purposes. Enter VidChapters-7M, a groundbreaking dataset that comprises a staggering 7 million meticulously segmented chapters extracted from 817,000 videos. This innovative resource promises to reshape the way we navigate and interact with video content.

VidChapters-7M: The Data Revolution

VidChapters-7M was meticulously curated to bridge the gap in video organization research. Unlike previous efforts that required labor-intensive manual annotation, this dataset leverages user-annotated chapters from online videos, paving the way for scalability and efficiency. With VidChapters-7M, researchers finally have access to a wealth of data that empowers them to tackle video organization challenges head-on.

The Three Pioneering Tasks

Within the VidChapters-7M framework, three distinct tasks have been introduced, each pushing the boundaries of video organization:

- Video Chapter Generation: This task involves the temporal division of videos into segments, each accompanied by a descriptive title. It aims to enhance video accessibility by providing users with a clear structure for navigating content.

- Video Chapter Generation with Predefined Boundaries: Here, the challenge lies in generating titles for segments with annotated boundaries. This task adds an extra layer of complexity to the chapter generation process, enabling more precise content organization.

- Video Chapter Grounding: This task requires localizing a chapter’s temporal boundaries based on its annotated title. It ensures that users can seamlessly jump to the specific content they’re interested in.

The Path to Excellence

To gauge the effectiveness of these tasks, a comprehensive evaluation was conducted, employing both fundamental baseline approaches and cutting-edge video-language models. The results are nothing short of remarkable, as VidChapters-7M unlocks new possibilities in dense video captioning tasks. Whether it’s zero-shot or fine-tuning scenarios, this dataset consistently propels the state of the art on benchmark datasets like YouCook2 and ViTT.

Beyond the Horizon

However, it’s important to acknowledge VidChapters-7M’s origins in YT-Temporal-180M, which brings certain limitations associated with biases in video category distribution. As video chapter generation models advance, it’s crucial to recognize their potential societal impacts, especially in applications like video surveillance. Models trained on VidChapters-7M may inadvertently inherit biases present in videos from platforms like YouTube. Thus, a thoughtful and responsible approach is essential when deploying, analyzing, or building upon these models.

Conclusion:

VidChapters-7M marks a turning point in video organization research, offering a scalable and efficient solution to the age-old problem of segmenting lengthy videos into chapters. Its three pioneering tasks and remarkable impact on downstream applications highlight its potential to revolutionize the way we interact with video content. However, as we move forward with this powerful resource, let’s do so with an awareness of the challenges and responsibilities it entails. Together, we can ensure that the future of video organization remains both innovative and ethical.