TL;DR:

- Vision and language research progresses, linking images and captions.

- Localized Narratives (ImLNs) use speech and cursor movement for visual grounding.

- ImLNs extended to videos through meticulous character-focused narratives.

- Videos offer complex interactions, necessitating refined annotation techniques.

- Video Localized Narratives (VidLNs) capture intricate scenarios with detailed annotations.

- VidLNs dataset empowers Video Narrative Grounding (VNG) and Video Question Answering (VideoQA).

- VNG challenge demands precise localization of nouns in videos.

- The proposed approach showcases progress, addressing intricate challenges.

Main AI News:

In the rapidly evolving landscape of vision and language research, recent strides have been made in connecting static images with corresponding captions. These datasets have ventured into associating specific words within captions with distinct regions in images, employing a range of methodologies. The latest innovation, Localized Narratives (ImLNs), takes a unique approach by having annotators verbally describe images while moving their mouse cursor across the mentioned regions. This dual-action process mimics natural communication, offering extensive visual grounding for each word. Yet, it’s crucial to acknowledge that still images freeze a solitary moment in time. The allure intensifies when considering video annotation, as videos unfold complete narratives, portraying dynamic interactions among multiple entities and objects.

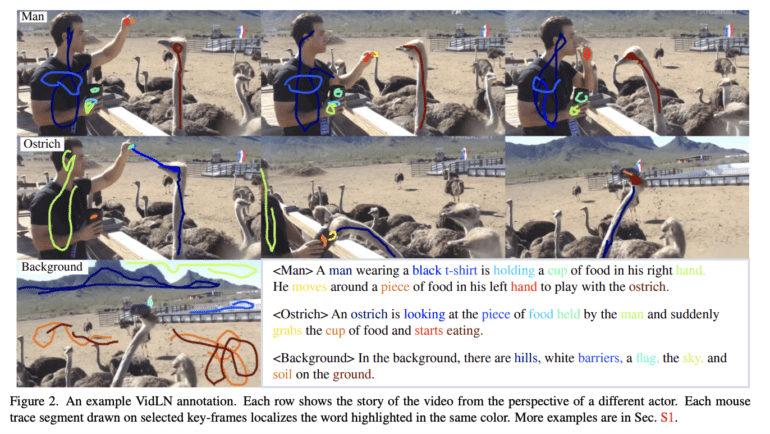

In response to the intricate and time-intensive task, an elevated annotation strategy has emerged, extending ImLNs to videos. This refined protocol empowers annotators to construct a video’s narrative within a controlled environment. The process initiates with a meticulous observation of the video, identifying key characters (like “man” or “ostrich”), and pinpointing pivotal key frames that encapsulate significant moments for each character.

Following this, individual character narratives are meticulously woven. Annotators verbalize the character’s involvement across various events, concurrently guiding the cursor over keyframes to accentuate pertinent actions and objects. These spoken depictions encompass not only the character’s name and attributes but also the actions they undertake, such as interactions with fellow characters (“playing with the ostrich”) and inanimate objects (“grabbing the cup of food”). To provide comprehensive context, a separate phase includes a brief background description.

By employing key frames, the temporal constraints are alleviated, and disentangling complex scenarios becomes feasible. This disentanglement aids in portraying intricate events involving multiple characters interacting amongst themselves and with numerous passive objects. Similar to ImLN, this protocol harnesses mouse trace segments to localize each word. The study further integrates several refinements to ensure precise localizations, surpassing the achievements of prior endeavors.

Researchers have applied this methodology to various datasets, introducing Video Localized Narratives (VidLNs). These videos intricately capture scenarios involving interactions among diverse characters and inanimate objects, leading to captivating narratives illuminated by meticulous annotations.

The depth of the VidLNs dataset establishes a robust groundwork for diverse tasks, including Video Narrative Grounding (VNG) and Video Question Answering (VideoQA). The pioneering VNG challenge necessitates a technique that can pinpoint nouns in an input narrative by generating segmentation masks on video frames. This task presents a formidable test, as the text often features identical nouns requiring contextual cues for disambiguation. While these benchmarks remain intricate challenges that are far from fully resolved, the proposed approach signifies meaningful strides in the right direction (for more details, refer to the published paper).

Conclusion:

The introduction of VidLNs marks a significant advancement in the convergence of vision and language. By extending the innovative ImLNs approach to videos, a comprehensive and refined annotation protocol emerges. This breakthrough not only enriches video descriptions but also enhances the grounding of words in complex narratives. The VidLNs dataset forms a solid foundation for business opportunities in Video Narrative Grounding (VNG) and Video Question Answering (VideoQA) applications, potentially revolutionizing how businesses interact with and utilize video content for enhanced communication and engagement.