- VisionGPT-3D emerges from collaborative efforts of leading institutions to merge cutting-edge vision models.

- The framework seamlessly integrates state-of-the-art models like SAM, YOLO, and DINO for streamlined model selection and refined outcomes.

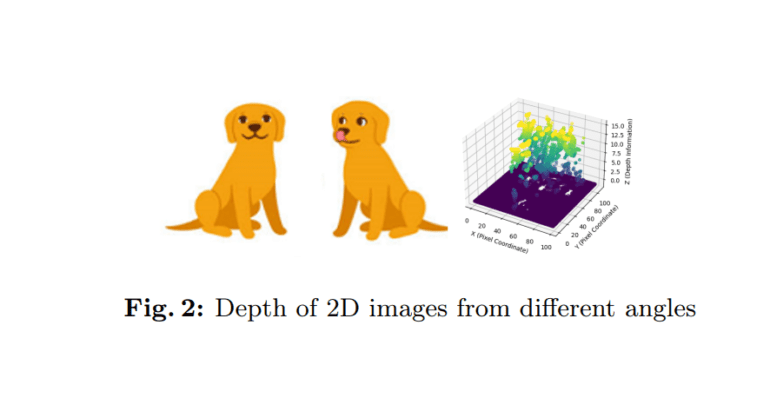

- VisionGPT-3D focuses on reconstructing 3D imagery from 2D representations through methodologies like multi-view stereo and depth estimation.

- Critical steps involve generating depth maps, transforming them into point clouds, and crafting meshes using advanced algorithms.

- Object segmentation within depth maps and rigorous validation protocols ensure the accuracy and fidelity of generated visuals.

Main AI News:

The convergence of textual and visual realms has revolutionized daily operations, amplifying capabilities from image and video generation to the identification of intricate components within. While earlier computer vision frameworks primarily targeted object recognition and categorization, recent strides, exemplified by OpenAI GPT-4, have forged connections between natural language processing and visual understanding. Despite these advancements, the translation of textual prompts into vibrant visual landscapes poses a persistent challenge for artificial intelligence. Notwithstanding the commendable milestones achieved by models like GPT-4 and counterparts such as SORA, the ever-evolving panorama of multimodal computer vision beckons forth a realm ripe with possibilities for innovation and finesse, particularly in the realm of generating three-dimensional visual constructs from two-dimensional imagery.

Unveiling VisionGPT-3D: A Synthesis of Cutting-Edge Vision Models

In a collaborative effort spanning institutions including Stanford University, Seeking AI, University of California, Los Angeles, Harvard University, Peking University, and the University of Washington, Seattle, researchers have unveiled VisionGPT-3D. This groundbreaking framework stands as a testament to the amalgamation of state-of-the-art vision models, including SAM, YOLO, and DINO, seamlessly weaving their capabilities to streamline model selection and refine outcomes across a spectrum of multimodal inputs. At its core, VisionGPT-3D is tailored for tasks such as the reconstruction of three-dimensional imagery from two-dimensional representations, harnessing methodologies encompassing multi-view stereo, structure from motion, depth from stereo, and photometric stereo. The implementation intricately navigates depth map extraction, point cloud generation, mesh formation, and video synthesis to offer a comprehensive solution.

Critical Steps Toward VisionGPT-3D Realization

The blueprint for VisionGPT-3D unfolds through a meticulously orchestrated sequence of steps. Initial strides encompass the derivation of depth maps, which are crucial for furnishing distance-related insights through mechanisms like disparity analysis or neural network-driven estimations akin to MiDas. Subsequent stages involve the transformation of these depth maps into point clouds, entailing a symphony of operations encompassing region identification, boundary delineation, noise filtration, and surface normal computation, all tailored to faithfully capture the scene’s geometry in a three-dimensional space. Emphasis is placed on object segmentation within depth maps, employing techniques such as thresholding, watershed segmentation, and mean-shift clustering to foster efficient delineation, enabling targeted manipulation and collision mitigation.

Advancing from point cloud generation, the journey progresses towards mesh creation, leveraging algorithms like Delaunay triangulation and surface reconstruction techniques to craft a tangible surface from the point cloud data. The selection of algorithms is guided by a nuanced analysis of scene attributes, ensuring adaptability to varying curvature profiles while preserving intricate features. Validation of the resultant mesh stands as a pivotal checkpoint, facilitated through methodologies like surface deviation analysis and volume conservation, assuring fidelity to the underlying geometrical constructs.

Culmination and Validation: Ensuring Integrity Across the Visual Spectrum

The culmination of the VisionGPT-3D framework culminates in the synthesis of videos from static frames, orchestrating elements such as object positioning, collision detection, and motion based on real-time analysis and adherence to physical principles. Rigorous validation protocols validate color accuracy, frame rate consistency, and overall fidelity to the intended visual representation, which is pivotal for seamless communication and user satisfaction. The framework not only automates the selection of state-of-the-art vision models but also discerns optimal mesh creation algorithms grounded in a meticulous analysis of two-dimensional depth maps. Moreover, an AI-driven approach guides the selection of object segmentation algorithms, refining segmentation efficiency and accuracy based on inherent image characteristics. Rigorous validation mechanisms, including 3D visualization tools and the VisionGPT-3D model itself, underscore the integrity and robustness of the generated outputs.

Conclusion:

The introduction of VisionGPT-3D marks a significant milestone in the realm of computer vision, promising enhanced capabilities in generating three-dimensional visuals from two-dimensional inputs. Its seamless integration of cutting-edge models and meticulous validation mechanisms sets a new standard for accuracy and fidelity. This advancement holds immense potential for various industries, including entertainment, manufacturing, and healthcare, where precise visualization plays a pivotal role in decision-making and innovation. As VisionGPT-3D continues to evolve, its impact on the market is poised to catalyze advancements in AI-driven visual processing and reshape industries reliant on accurate 3D reconstruction.