TL;DR:

- Human multisensory perception vs. robotic spatial awareness challenge.

- “Localization” and “Mapping” in robot cognition.

- Localization and mapping problems are termed as “chicken or egg” issues.

- Deep learning empowers SLAM (Simultaneous Localization and Mapping) systems.

- Three deep learning properties: robust perception, cognitive capabilities and experiential learning.

- Deep learning’s versatile applications: pose estimation, association problem solving, feature discovery.

- Challenges: dependence on labeled datasets, lack of generalization, model opacity.

- Future prospects: refined robotic spatial understanding through deep learning integration.

Main AI News:

In the realm of artificial intelligence and robotics, the intricacies of human perception have long been a benchmark for advancement. Picture yourself answering the questions, “Where are you now?” or “What do your surroundings look like?” with precision, thanks to the remarkable multisensory perception ingrained in the human experience. This remarkable ability endows us with a profound spatial awareness, enabling us to navigate and comprehend the world around us effortlessly. However, consider posing the same inquiry to a robotic counterpart – how would it tackle this challenge?

The crux of the matter lies in the fact that without a map, a robot remains oblivious to its location, much like being lost in a vast expanse without coordinates. Furthermore, lacking insight into its surroundings, the robot becomes unable to craft a coherent map. This predicament mirrors the classic philosophical paradox of the chicken and the egg, and within the domain of machine learning, it is aptly christened the localization and mapping problem.

In the machine learning lexicon, “Localization” denotes a robot’s ability to gather internal data pertaining to its movement, encompassing its position, orientation, and velocity. On the flip side, “Mapping” pertains to the capacity to discern external environmental conditions, encompassing elements such as spatial configurations, visual attributes, and semantic traits. These functions may operate in isolation, with one focusing on intrinsic states and the other on extrinsic circumstances. Alternatively, they can synergize as a unified entity known as Simultaneous Localization and Mapping (SLAM).

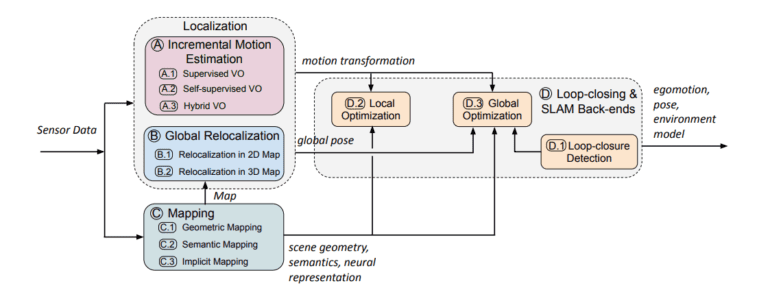

However, the journey toward effective SLAM systems is fraught with challenges. Prevailing algorithms, including image-based relocalization, visual odometry, and SLAM, confront a slew of hurdles. Imperfect sensor measurements, dynamic scenes, unfavorable lighting conditions, and real-world constraints act as roadblocks to their seamless implementation. The diagram above visually depicts the integration of discrete modules into a deep learning-based SLAM framework. This comprehensive research endeavor presents an exhaustive survey on the convergence of deep learning-based and traditional approaches, effectively resolving two pivotal questions:

Is deep learning poised to reshape visual localization and mapping? Within the research community, there exists a consensus that deep learning harbors three distinct attributes that could propel it toward revolutionizing general-purpose SLAM systems in the foreseeable future.

- First and foremost, deep learning presents robust perceptual tools that seamlessly integrate into the visual SLAM front-end, extracting features from intricate scenarios that pose challenges for odometry estimation and relocalization. It further enriches mapping endeavors by providing intricate depth information.

- Secondly, the empowerment bestowed by deep learning endows robots with advanced cognitive and interactive capabilities. Neural networks excel in bridging abstract concepts with human-understandable terminology, thus facilitating the labeling of semantic elements within mapping or SLAM frameworks – a task traditionally vexing to achieve using rigorous mathematical constructs.

- Lastly, the realm of learning methodologies equips SLAM systems or standalone localization/mapping algorithms to learn through experience and actively assimilate novel information for self-improvement.

Channeling the Potential: Applying Deep Learning to Address the Visual Localization and Mapping Conundrum

- Deep learning stands as a versatile tool for modeling various facets of SLAM and individual localization/mapping algorithms. A prime example is the creation of end-to-end neural network models that directly infer pose from images. This proficiency is particularly pronounced in handling testing conditions, such as barren regions devoid of distinctive features, ever-changing lighting scenarios, and motion-induced blurring – domains where conventional modeling techniques falter.

- The role of deep learning extends to solving association challenges in SLAM, a sphere encompassing relocalization, semantic mapping, and loop-closure detection. By establishing connections between images and maps, semantically labeling pixels, and identifying pertinent scenes from prior observations, deep learning illuminates the path forward.

- Unearthing intrinsic features germane to the task at hand is another forte of deep learning. Through the adept utilization of prior knowledge, such as geometric constraints, a self-learning framework can be erected, enabling SLAM to adapt parameters based on input images.

It is prudent to acknowledge that the effectiveness of deep learning techniques hinges on extensive, meticulously annotated datasets, essential for discerning meaningful patterns. However, these models often grapple with the challenge of generalizing to unfamiliar environments. Additionally, while these models serve as potent solutions, they possess a characteristic opacity, functioning akin to enigmatic black boxes. Furthermore, the computational demands of localization and mapping systems can be prodigious, albeit amenable to parallelization, unless mitigated by model compression strategies.

Conclusion:

The integration of deep learning into visual localization and mapping marks a significant paradigm shift. Robotic systems stand to gain enhanced spatial cognition, bridging the gap between human-like perception and machine-driven awareness. The convergence of these technologies opens doors to transformative advancements in various sectors, including autonomous navigation, industrial automation, and environmental monitoring. As the capabilities of deep learning continue to evolve, the market can anticipate a surge in innovative solutions that redefine how robots perceive and interact with their surroundings, revolutionizing industries and offering new avenues for growth and efficiency.