TL;DR:

- VMamba combines the strengths of CNNs and ViTs while overcoming their limitations.

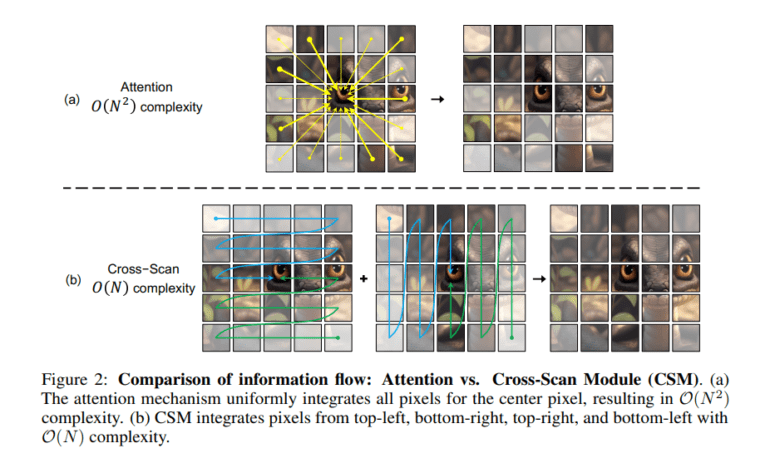

- Introduces Cross-Scan Module (CSM) for efficient spatial traversal.

- Achieves exceptional results in object detection and semantic segmentation benchmarks.

- Demonstrates superior accuracy and global effective receptive fields.

- VMamba presents a groundbreaking tool in computer vision.

Main AI News:

In the ever-evolving landscape of visual representation learning, two prominent challenges have long persisted: the computational inefficiency plaguing Vision Transformers (ViTs) and the limited capacity inherent in Convolutional Neural Networks (CNNs) to grasp global contextual information. While ViTs excel in fitting capabilities and international receptive fields, they suffer from quadratic computational complexity. On the other hand, CNNs offer scalability and linear complexity concerning image resolution but fall short in dynamic weighting and global perspective. The need for a model that harmoniously combines the strengths of both CNNs and ViTs, without inheriting their respective computational and representational limitations, becomes abundantly clear.

Extensive research has been dedicated to advancing machine visual perception. CNNs and ViTs have solidified their roles as foundational models in processing visual information, each possessing unique strengths. Meanwhile, State Space Models (SSMs) have gained acclaim for their efficiency in modeling long sequences, exerting influence across both Natural Language Processing (NLP) and computer vision domains.

Enter the Visual State Space Model (VMamba), a groundbreaking architectural innovation in visual representation learning, developed through collaborative efforts between UCAS, Huawei Inc., and Pengcheng Lab. VMamba draws inspiration from the state space model and aspires to address the computational inefficiencies associated with ViTs while retaining their unparalleled advantages, including global receptive fields and dynamic weights. The crux of this research lies in VMamba’s ingenious approach to tackling the direction-sensitive challenge within visual data processing, embodied by the Cross-Scan Module (CSM), meticulously designed for efficient spatial traversal.

The CSM, a pivotal component of VMamba, adeptly transforms visual images into patch sequences, leveraging a 2D state space model as its core framework. The model’s selective scan mechanism and discretization process synergize to augment its capabilities, setting it apart from conventional approaches. Extensive experiments validate VMamba’s prowess, demonstrating its superior effective receptive fields when compared to benchmark models like ResNet50 and ConvNeXt-T. Moreover, its performance in semantic segmentation on the ADE20K dataset reinforces its status as a formidable contender in the field.

Delving into specifics, VMamba has achieved remarkable results in various benchmarks. Notably, it boasts an impressive 48.5-49.7 mAP in object detection and 43.2-44.0 mIoU in instance segmentation on the COCO dataset, surpassing established models. On the ADE20K dataset, the VMamba-T model shines with a 47.3 mIoU, further bolstered to 48.3 mIoU with multi-scale inputs in semantic segmentation, consistently outperforming competitors such as ResNet, DeiT, Swin, and ConvNeXt, as mentioned previously. Its unwavering accuracy in semantic segmentation across various input resolutions reinforces VMamba’s global effective receptive fields as a defining feature, setting it apart from models with localized Effective Receptive Fields (ERFs).

The research on VMamba signifies a monumental leap forward in the realm of visual representation learning. It successfully amalgamates the strengths of CNNs and ViTs, offering a transformative solution to their inherent limitations. The ingenious CSM elevates VMamba’s efficiency to new heights, rendering it a versatile powerhouse for an array of visual tasks, all while maintaining superior computational effectiveness. VMamba’s ability to retain global receptive fields within a linear complexity framework underscores its potential as a groundbreaking tool poised to reshape the future of computer vision.

Conclusion:

The emergence of VMamba as a hybrid model blending the capabilities of CNNs and ViTs while addressing their inefficiencies signifies a significant breakthrough in the field of visual representation learning. The innovative Cross-Scan Module and impressive benchmark results position VMamba as a transformative tool with the potential to reshape the computer vision market by offering enhanced computational efficiency and superior accuracy in a range of visual tasks.