TL;DR:

- LabelFormer, developed by Waabi and the University of Toronto, is a transformer-based AI model.

- It efficiently refines object trajectories for auto-labelling, addressing the need for precise labeled datasets in self-driving technology.

- Auto-labelling methods are gaining attention for their potential to provide larger datasets at a lower cost than human annotation.

- LabelFormer operates in the offboard perception setting, leveraging temporal context for superior results.

- It eliminates redundant computing by refining trajectories in a single shot, making it computationally efficient.

- LabelFormer’s simplicity sets it apart from complex pipeline-based approaches, making it a cost-effective solution.

- Experimental assessments demonstrate LabelFormer’s speed, performance, and ability to outperform window-based methods.

- Its auto-labelling capabilities lead to more accurate detections, making it a game-changer for the self-driving technology market.

Main AI News:

In the realm of modern self-driving systems, the quest for enhanced object recognition capabilities has led to the extensive utilization of large-scale manually annotated datasets. These datasets serve as the foundation for training object detectors tasked with identifying various traffic participants within the frame. However, the ever-growing demand for more extensive and precise datasets has sparked the emergence of auto-labeling methods, drawing significant attention from the industry.

Auto-labeling, if executed efficiently, presents a promising solution to the need for larger datasets while significantly reducing the expenses associated with human annotation. The key lies in achieving computational efficiency that rivals human annotation, coupled with the production of labels that meet or even exceed human standards. The potential benefits are undeniable – the ability to train more accurate and robust perception models using these auto-labeled datasets is a tantalizing prospect.

A primary sensor of choice for many self-driving platforms is LiDAR, and it plays a pivotal role in this context. Researchers at Waabi and the University of Toronto have taken a proactive approach by introducing LabelFormer, a revolutionary transformer-based AI model. This model takes on the crucial task of refining object trajectories for auto-labeling, bringing us closer to the realization of cost-effective, high-quality labeling on a massive scale.

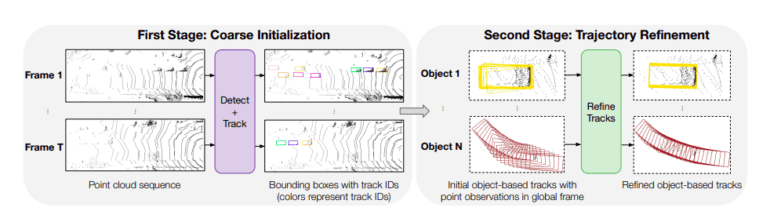

LabelFormer operates in the realm of offboard perception, a setting distinguished by its absence of real-time constraints and the luxury of access to future observations. Fig. 1 illustrates the typical approach employed in addressing the offboard perception challenge. Drawing inspiration from the human annotation process, this model follows a “detect-then-track” framework. Initially, it acquires objects and their coarse bounding box trajectories, and subsequently, each object track undergoes independent refinement.

The first stage of this process is dedicated to maximizing recall by tracking as many objects in the scene as possible. This is essential to ensure comprehensive coverage. The second stage, referred to as “trajectory refinement,” is where LabelFormer truly shines. It focuses on generating higher-quality bounding boxes, a critical component of precise object recognition.

Navigating the complexities of object occlusions, sparse observations as the range extends, and the diverse sizes and motion patterns of objects requires a novel approach. LabelFormer addresses these challenges by efficiently harnessing the temporal context of complete object trajectories. Unlike current techniques, which rely on suboptimal sliding window methods and neural networks applied individually at each time step within a limited temporal context, LabelFormer takes a more holistic approach.

By utilizing self-attention blocks in a transformer-based architecture, LabelFormer capitalizes on dependencies over time. It encodes initial bounding box parameters and LiDAR observations at each time step, eliminating the redundancy of repeatedly retrieving features from the same frame. This breakthrough ensures that ample temporal context is utilized while staying within computational constraints.

LabelFormer stands out not only for its efficiency but also for its simplicity. Unlike previous complex pipelines that incorporated multiple distinct networks to handle static and dynamic objects differently, LabelFormer offers a straightforward and cost-effective trajectory refining technique. It delivers more precise bounding boxes, outperforming existing window-based approaches in terms of computational efficiency and providing auto-labelling with a distinct advantage over human annotation.

In their comprehensive experimental assessment using highway and urban datasets, Waabi and the University of Toronto demonstrate the speed and performance superiority of LabelFormer. This transformative approach not only accelerates the auto-labeling process compared to window-based methods but also produces higher-quality results. Furthermore, LabelFormer’s ability to auto-label larger datasets for downstream item detectors results in more accurate detections compared to relying solely on human data or other auto-labeling solutions.

Conclusion:

LabelFormer’s introduction into the self-driving technology market signifies a major advancement. Its ability to efficiently and accurately refine object trajectories for auto-labelling not only reduces costs but also improves the quality of labeled datasets. This breakthrough technology has the potential to accelerate the development and deployment of self-driving systems, offering a competitive edge to companies in the rapidly evolving autonomous vehicle industry.