TL;DR:

- Machine learning (ML) is revolutionizing weather forecasting.

- WeatherBench 2 was introduced by Google, Deepmind, and European Centre for Medium-Range Weather Forecasts.

- Benchmarking framework for weather prediction models.

- Features open-source evaluation code, optimized datasets.

- Focus on global, medium-range forecasting (1-15 days).

- Inclusive of diverse metrics for evaluation.

- Aligns with meteorological agency standards.

- WeatherBench 2.0 (WB2) sets the gold standard.

- Bridges experimental methods with operational benchmarks.

- Facilitates efficient machine learning, reproducibility.

Main AI News:

The landscape of weather forecasting is undergoing a revolutionary transformation, thanks to the rapid advancements in machine learning (ML) techniques. As the prowess of ML models rivals that of conventional physics-based counterparts in terms of predictive accuracy, a new era dawns upon us—one where the precision of global weather predictions stands poised for remarkable enhancement. In this pursuit, the foundation for success lies in the realm of objective evaluations, characterized by well-established metrics that illuminate the path ahead.

Recent collaborative research spearheaded by Google, Deepmind, and the European Centre for Medium-Range Weather Forecasts heralds the arrival of WeatherBench 2, a groundbreaking benchmarking and comparative framework for ushering in a new era of weather prediction models. This remarkable endeavor doesn’t merely present a comprehensive replication of the renowned ERA5 dataset, a cornerstone for training most ML models; it goes above and beyond by introducing an open-source evaluation code and a repository of meticulously optimized ground-truth and baseline datasets, readily accessible via cloud-based platforms.

WeatherBench 2 has been meticulously designed to cater to the exigent demands of global, medium-range forecasting—spanning from 1 to 15 days ahead. Notably, this initiative remains committed to evolution, with a roadmap that envisions expansion to encompass diverse forecasting scenarios, including the realms of nowcasting, short-term prognostications (0-24 hours), and extended-term predictions (15+ days).

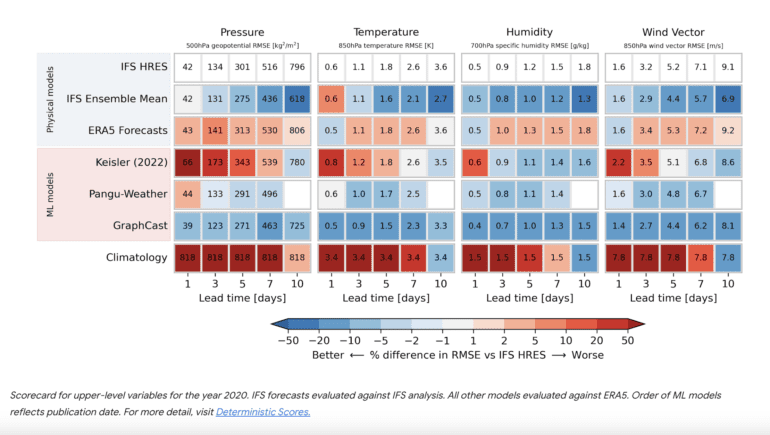

Evaluating the precision of weather forecasts is an intricate endeavor, far beyond a mere numerical score. The significance of average temperatures might eclipse considerations surrounding the frequency and intensity of wind gusts for different stakeholders. Navigating this multifaceted landscape, WeatherBench 2 presents an ensemble of metrics. Distilling the essence of this pursuit, a set of pivotal criteria, aptly termed “headline” metrics, takes center stage. This selection aligns harmoniously with the evaluation methodologies practiced by meteorological agencies and the venerable World Meteorological Organization, ensuring a coherent representation of study outcomes.

Stepping into the spotlight is WeatherBench 2.0 (WB2)—a veritable gold standard that propels data-driven weather prediction onto the global stage. Embracing the full spectrum of innovative AI techniques that have surfaced since the inaugural release of the WeatherBench benchmark, WB2 stands as a testament to progress. At its core, WB2 mirrors the operational forecast evaluation processes synonymous with leading weather centers. This alignment not only bridges the gap between experimental methodologies and operational benchmarks but also sets the stage for meticulous comparisons.

The overarching objective of this ambitious undertaking is twofold: to streamline machine learning operations and to foster a culture of reproducibility through the open dissemination of evaluation codes and data resources. The architects behind WB2 hold firm to the belief that its horizons are boundless, with the potential for augmentation hinging upon the collective needs of the community. In anticipation of future chapters, the scholarly discourse surrounding WB2 hints at prospective expansions—possibly entailing a keener focus on appraising extremities and influential variables at finer resolutions, potentially through harnessing station observations.

Conclusion:

The advent of WeatherBench 2 marks a watershed moment in the landscape of weather forecasting. With machine learning capabilities reaching parity with traditional models, this benchmarking framework heralds a new era of precision. The incorporation of open-source tools, comprehensive datasets, and diverse evaluation metrics positions WeatherBench 2 as the gold standard in data-driven global weather prediction. This transformative step not only propels machine learning operations but also promises a new level of reproducibility and accuracy, influencing the market by redefining the way weather forecasting models are evaluated and compared.