TL;DR:

- Large language models (LLMs) have advanced language comprehension and production.

- LLMs have improved question answering, but struggle with specialized knowledge.

- WebGPT integrates online browsing and comprehensive answers but has limitations.

- Researchers introduce WebGLM, a web-enhanced QA system based on GLM-10B.

- WebGLM offers effectiveness, affordability, and user sensitivity comparable to WebGPT.

- WebGLM incorporates novel approaches like LLM-augmented retrieval and bootstrapped generation.

- WebGLM addresses the challenges of extensive resources and slow response times.

- It enhances user experience and aligns with human preferences.

Main AI News:

In the realm of language processing, large language models (LLMs) have undoubtedly raised the bar for what computers can comprehend and generate. Models such as GPT-3, PaLM, OPT, BLOOM, and GLM-130B have made remarkable strides in advancing language capabilities. Among the various language applications, question answering has witnessed significant improvement, thanks to breakthroughs in LLM technology. Recent studies have demonstrated that LLMs perform on par with supervised models in closed-book QA and in-context learning QA, showcasing their impressive memorization capacity. However, it’s crucial to acknowledge that LLMs possess finite limits and struggle to meet human expectations when confronted with challenges requiring substantial specialized knowledge. Consequently, recent endeavors have focused on enhancing LLMs with external knowledge through retrieval and online search mechanisms.

One noteworthy innovation in this domain is WebGPT, a system that integrates online browsing, comprehensive answers to intricate queries, and valuable references. Despite its popularity, the original WebGPT approach has yet to gain widespread adoption. Primarily, it heavily relies on expert-level annotations of browsing trajectories, meticulously crafted responses, and answer preference labeling—resources that are expensive, time-consuming, and require extensive training. Moreover, the behavior cloning approach employed by WebGPT, which involves instructing the system to interact with a web browser, issue operation instructions like “Search,” “Read,” and “Quote,” and extract relevant information from online sources, necessitates that its base model, GPT-3, closely resemble human experts.

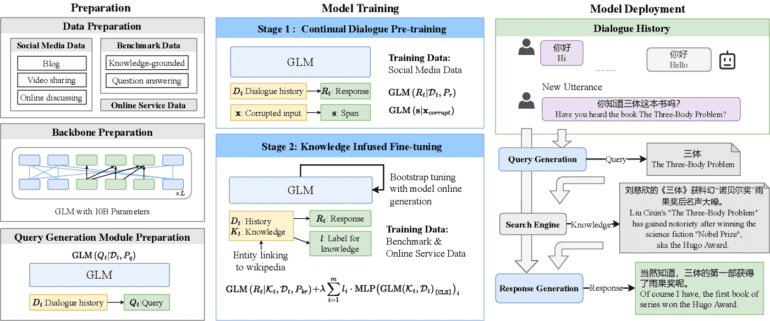

Furthermore, the multi-turn structure of web surfing demands substantial computational resources and can lead to a suboptimal user experience. For instance, WebGPT-13B takes approximately 31 seconds to respond to a 500-token query. Addressing these challenges, a team of researchers from Tsinghua University, Beihang University, and Zhipu.AI introduced WebGLM, a cutting-edge web-enhanced question-answering system built upon the formidable 10-billion-parameter General Language Model (GLM-10B). Figure 1 illustrates the architecture of this groundbreaking system. WebGLM stands out due to its effectiveness, affordability, sensitivity to human preferences, and, most significantly, its performance on par with WebGPT.

To achieve outstanding performance, WebGLM incorporates several novel approaches and designs. One such approach is the LLM-augmented Retriever, a two-staged retriever that combines fine-grained LLM-distilled retrieval with coarse-grained web search. Inspired by the ability of LLMs like GPT-3 to intuitively identify relevant references, this technique has the potential to refine smaller dense retrievers. Another noteworthy component is the bootstrapped generator, a response generator based on the GLM-10B model. This generator leverages LLM in-context learning and is trained on extensively quoted long-formed QA samples. By utilizing citation-based filtering instead of relying solely on human experts, LLMs can provide high-quality data, empowering the system to excel without incurring substantial costs.

Additionally, WebGLM employs a scorer that learns from user thumbs-up signals derived from online QA forums. This scorer acquires a deep understanding of the preferences of the majority of users when it comes to various responses. By considering the human factor, WebGLM ensures that its answers align with the expectations and preferences of users, enhancing the overall user experience.

Conclusion:

WebGLM’s introduction as a web-enhanced question-answering system based on GLM-10B brings significant advancements to the market. Its effectiveness, affordability, and user sensitivity make it a formidable competitor to existing systems like WebGPT. WebGLM’s novel approaches, such as LLM-augmented retrieval and bootstrapped generation, demonstrate the potential to refine and enhance smaller dense retrievers. By addressing challenges related to resources and response times, WebGLM ensures a superior user experience. As a result, the market can expect an improved and more efficient web-enhanced QA solution that aligns with human preferences and expands the capabilities of language models.