TL;DR:

- Wolfram Language version 13.3 introduces comprehensive support for Large Language Models (LLMs).

- LLM subsystem allows developers to harness the power of LLMs directly within the Wolfram Language.

- Integration of LLMs enables natural language programming and symbolic programming in Wolfram Language.

- LLM-powered computation enhances the language’s mathematical and logical problem-solving capabilities.

- The update includes Chat Notebooks, enabling intuitive interaction with LLMs.

- The built-in LLM offers self-correcting capabilities and different personas for code assistance.

- Wolfram launched the prompt repository, expanding the functionality of LLMs with diverse prompts.

- The prompt repository serves as a community-driven platform for extending Wolfram Language capabilities.

- It eliminates the need for extensive LLM wrangling by providing readily accessible and callable prompts.

- The Wolfram language is becoming an indispensable tool for AI enthusiasts and developers.

Main AI News:

In a groundbreaking move, Wolfram has taken a decisive step forward in the realm of generative AI with its latest update, version 13.3. The Wolfram language now offers comprehensive support for Large Language Models (LLMs) and seamlessly integrates an AI model into the Wolfram Cloud.

This remarkable update is the culmination of Wolfram’s continuous efforts to equip the language with the necessary tools for LLM readiness. The introduction of an LLM subsystem within the language enables developers to harness the power of LLMs directly. It builds upon the earlier addition of LLM functions technology in May, which encapsulated AI capabilities into callable functions. With the new subsystem now accessible to users, developers have an unprecedented opportunity to leverage LLM technology for their projects.

These advancements usher in a new era of data interaction for developers. By combining Stephen Wolfram’s vision of natural language programming with the Wolfram language’s symbolic programming prowess, a formidable force has been unleashed. Moreover, when coupled with the Wolfram language API, this integration can be seamlessly incorporated into larger systems, providing unparalleled power through a natural language interface.

LLM-powered computation is a domain where the Wolfram language excels, owing to its exceptional symbolic programming capabilities. Through the use of code, the language can effortlessly handle intricate mathematical functions, including algebra, matrix manipulations, and differential equations. To further enhance the language’s logical problem-solving capabilities, Stephen Wolfram, the language’s creator, made the pivotal decision to integrate LLM capabilities into the Wolfram language.

Stephen Wolfram’s journey with LLMs began with the development of the Wolfram ChatGPT plugin, which empowered the chatbot with the language’s symbolic programming abilities. This inspired Stephen to explore new frontiers and elevate symbolic language to unprecedented heights. Speaking to AIM, he shared, “We’re on the cusp of using the symbolic language [Wolfram] to incorporate LLM as a component in a larger software stack. It’s a beautifully synergistic approach.“

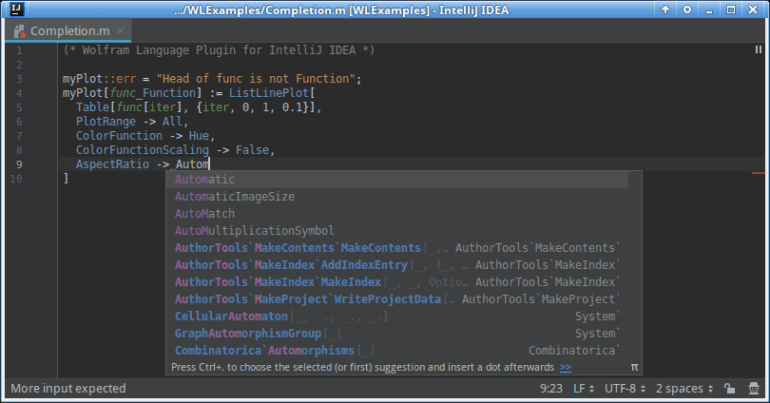

The version 13.3 update signifies a significant step in that direction by seamlessly integrating LLMs into the Wolfram language through a dedicated subsystem. Following the introduction of the chatbot plugin and LLM function calling, Stephen introduced Chat Notebooks. This groundbreaking feature enables users to effortlessly interact with LLMs within the Wolfram Notebook, using a simple text box. It empowers users to generate powerful code in the Wolfram language without requiring in-depth knowledge of the syntax. Stephen described this interface as an exemplification of “using an LLM as a linguistic interface with common sense,” as it allows users to engage with the language intuitively.

Stephen believes that this integration represents a natural progression of the language’s existing capabilities, stating, “When you’re working on something familiar, it’s usually faster and better to think directly in Wolfram Language and input the computational code you need. However, if you’re exploring new territories or just starting out, the LLM can be an invaluable tool to guide you towards your initial code.”

The built-in LLM boasts self-correcting capabilities, enabling it to identify and rectify errors before executing code snippets. Consequently, it can debug existing code in the Wolfram language, meticulously analyzing stack traces and error documentation to address issues. Furthermore, the LLM offers various personas, each tailored to serve a specific purpose. The code assistant persona generates and explains code, the code writer persona focuses solely on code generation, while personas like Wolfie and Birdnado respond to users “with attitude.”

To further expand the functionality of LLMs, Wolfram launched the prompt repository. This repository provides additional function prompts and modifier prompts, augmenting the capabilities of the language’s AI tools. While Stephen emphasized that the Wolfram language will continue to integrate seamlessly with LLMs, the prompt repository currently showcases the immense potential of the language’s new AI tools.

The prompt repository serves as a thriving community-driven platform, offering a diverse range of prompts that enable the Wolfram language’s LLM to adopt different personas, each with its specific use cases. Additionally, the community has contributed a plethora of functions that extend the language’s capabilities beyond its already comprehensive set of built-in functions.

The prompts within the repository are categorized into three main groups: personas, functions, and modifiers. Personas dictate the style of interaction with users, functions generate output based on existing text, and modifiers apply specific effects to the output produced by LLMs. Each of these functions can be easily invoked in code, allowing developers to seamlessly integrate them into existing projects or expand the functionality of their programs.

The repository plays a crucial role in streamlining workflows by eliminating the need for extensive LLM wrangling. As stated by Wolfram, “While using just the prompt’s description might work in some cases, oftentimes further clarification is needed. Sometimes, there’s a certain amount of ‘LLM wrangling’ required. This necessitates some degree of ‘prompt engineering’ for almost any prompt.”

The repository effectively eliminates the need for prompt engineering by providing a comprehensive database of readily accessible and callable prompts. These prompts, in turn, are transformed into Wolfram Language code by the LLM. By utilizing the language’s capabilities, developers can effortlessly manipulate extensive pieces of text. Moreover, since each prompt can be invoked as a function, it can be seamlessly integrated into any program, empowering it with LLM superpowers. With the imminent launch of the enhanced LLM functionality, the Wolfram language is poised to become an indispensable tool for both AI enthusiasts and developers alike.

Conclusion:

The latest update to the Wolfram Language, version 13.3, introduces advanced generative AI capabilities through the integration of Large Language Models (LLMs). This update empowers developers by enabling them to harness the power of LLMs directly within the Wolfram Language, combining natural language programming with symbolic programming. The enhanced LLM functionality, along with features like Chat Notebooks and the prompt repository, streamlines workflows and expands the language’s capabilities. This development signifies a significant step forward in the market, positioning the Wolfram Language as an indispensable tool for AI enthusiasts and developers seeking powerful generative AI capabilities.