TL;DR:

- “Woodpecker” is a groundbreaking tool developed by a Chinese research team to combat hallucination in AI models.

- Hallucination is a challenge where AI models generate overly confident outputs unrelated to their training data.

- The tool targets multimodal large language models (MLLMs) such as GPT-4V, combining text and vision processing.

- Woodpecker utilizes three AI models, including GPT-3.5 Turbo, to identify and correct hallucinations.

- It employs a five-stage process, including key concept extraction and visual knowledge validation.

- Research shows a remarkable 30.66% accuracy improvement over a baseline model.

- Woodpecker has the potential for widespread integration into various AI models.

Main AI News:

In the realm of AI advancement, a formidable challenge has been tamed by a collaborative effort between the University of Science and Technology of China and Tencent’s YouTu Lab. Their groundbreaking innovation, known as “Woodpecker,” holds the potential to rectify a persistent issue that has plagued artificial intelligence models – hallucination.

Hallucination, in the context of AI, refers to the perplexing tendency of models to generate outputs with unwarranted confidence, often straying far from the factual foundation of their training data. This conundrum has cast its shadow over extensive research on large language models (LLMs), leaving its imprint on renowned creations like OpenAI’s ChatGPT and Anthropic’s Claude.

Woodpecker, the brainchild of the USTC/Tencent team, emerges as a beacon of hope in this landscape. Its primary mission is to correct hallucinations within the domain of multimodal large language models (MLLMs), a niche encompassing models like GPT-4, notably its visual variant, GPT-4V, and other systems that seamlessly intertwine vision and various processing capabilities with text-based language modeling.

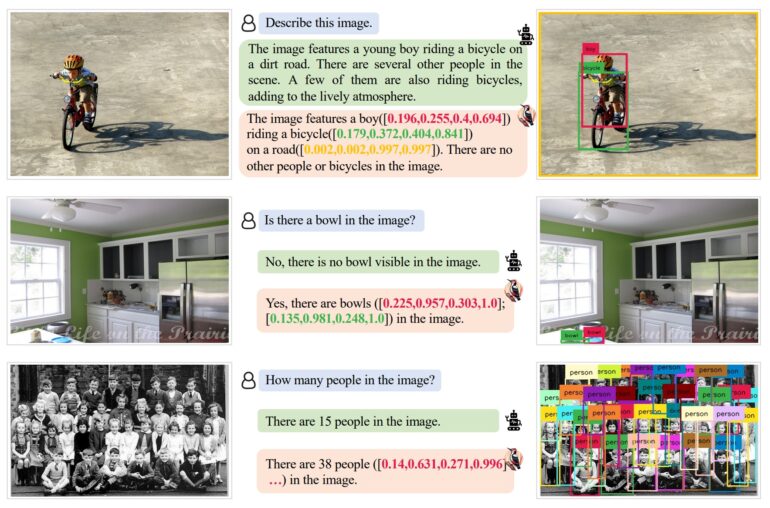

Diving into the heart of Woodpecker’s operation, we find an ensemble of three distinct AI models, strategically orchestrated to tackle the hallucination conundrum. These virtuosos, namely GPT-3.5 Turbo, Grounding DINO, and BLIP-2-FlanT5, play the pivotal role of evaluators. They meticulously scrutinize and discern hallucinations, offering precise instructions to the model under correction, guiding it to reforge its output in harmony with its training data.

The method employed by these AI models within Woodpecker unfolds in a meticulous five-stage process. It commences with key concept extraction, followed by the formulation of pertinent questions. Visual knowledge validation is the next crucial step, paving the way for the generation of verifiable visual claims. Finally, the process culminates in the correction of hallucinations, sealing the AI model’s commitment to fidelity.

Intriguingly, the researchers assert that these cutting-edge techniques usher in a new era of transparency and robustness, yielding a remarkable improvement in accuracy. Their findings reveal an impressive 30.66% improvement over the baseline MiniGPT-4/mPLUG-Owl, a testament to Woodpecker’s efficacy. Furthermore, they suggest that the versatility of Woodpecker makes it seamlessly adaptable to a plethora of “off the shelf” MLLMs, potentially revolutionizing the landscape of artificial intelligence integration.

Conclusion:

Woodpecker’s emergence signifies a significant leap forward in addressing the persistent issue of hallucination in AI models. Its success in enhancing accuracy by over 30% showcases its potential to not only improve existing AI models but also open up new opportunities for innovation and reliability in the AI market. This innovation could lead to increased trust and adoption of AI technologies across various industries.