TL;DR:

- Researchers from UK universities introduced X-Raydar, an open-source AI system for chest X-ray anomaly detection.

- X-Raydar employs neural networks and natural language processing (NLP) for image and text-based classification.

- Utilizes a massive dataset of 2,513,546 chest X-ray studies and 1,940,508 free-text radiological reports spanning 13 years.

- X-Raydar-NLP algorithm labels chest X-rays based on a 37-category taxonomy from reports.

- Achieved impressive performance, with a mean AUC of 0.919, surpassing historical radiologist reporters on multiple findings.

- Strong performance in critical findings such as pneumothorax, opacification, and mass/nodules.

- Offers comprehensive labeling with an eight-area radiological taxonomy.

- Provides public access via a web portal for real-time chest X-ray interpretation.

- Researchers open-source their trained network architectures for further research.

Main AI News:

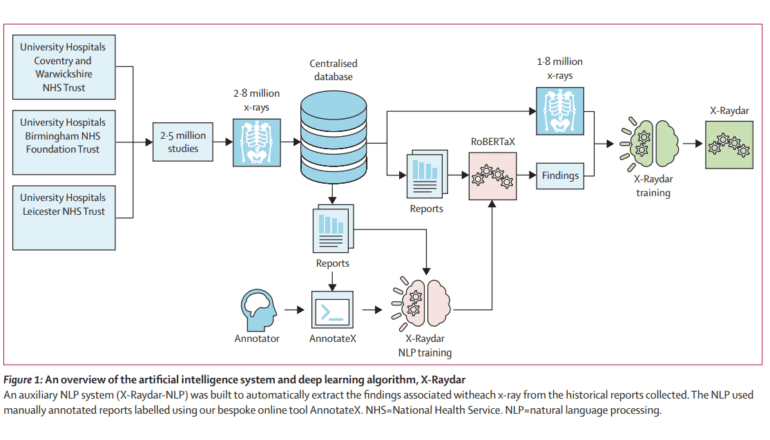

In a groundbreaking development, a team of researchers hailing from esteemed universities in the United Kingdom has unveiled X-Raydar, an open-source artificial intelligence (AI) system designed to revolutionize comprehensive chest X-ray abnormality detection. Leveraging neural networks, X-Raydar and X-Raydar-NLP, this system is engineered to classify prevalent chest X-ray anomalies by analyzing both images and their accompanying free-text reports. The dataset underpinning this innovation spans an impressive 13 years and encompasses a staggering 2,513,546 chest X-ray studies, along with 1,940,508 meticulously curated free-text radiological reports.

The secret sauce behind this remarkable achievement is the custom-trained natural language processing (NLP) algorithm known as X-Raydar-NLP. It has been instrumental in labeling chest X-rays, utilizing a meticulously crafted taxonomy consisting of 37 distinct findings extracted directly from the reports themselves. The AI algorithms underwent rigorous evaluation on three retrospective datasets, consistently demonstrating performance levels that mirror those of historical clinical radiologist reporters across a range of clinically significant findings.

X-Raydar’s prowess shines through in the numbers, boasting a mean AUC (Area Under the Curve) of 0.919 for the auto-labeled set, 0.864 for the consensus set, and 0.842 for the MIMIC-CXR test. A noteworthy highlight is its superiority over historical reporters, outperforming them on 27 out of 37 findings within the consensus set, maintaining parity on nine, and conceding inferiority on just one finding. This translates to an average improvement rate of a remarkable 13.3%. Impressively, X-Raydar’s performance aligns seamlessly with that of trained radiologists for critical findings, including pneumothorax, parenchymal opacification, and parenchymal mass or nodules.

The development of X-Raydar extended beyond just advanced detection capabilities. It encompassed the creation of an expansive radiological taxonomy covering eight anatomical areas and non-anatomical structures, enabling comprehensive labeling. An NLP algorithm, X-Raydar-NLP, underwent extensive training on 23,230 manually annotated reports to extract the necessary labels. Meanwhile, the computer vision algorithm, X-Raydar, employed the powerful InceptionV3 for feature extraction and optimized results via a custom loss function and class weighting factors.

The evaluation phase featured a consensus set comprising 1,427 images meticulously annotated by expert radiologists, an auto-labeled set numbering 103,328, and an independent dataset known as MIMIC-CXR, which boasted 252,374 cases. X-Raydar-NLP showcased its prowess with an impressive mean sensitivity of 0.921 and specificity of 0.994 when detecting clinically relevant findings in free-text reports. On the consensus set, X-Raydar achieved a mean AUC of 0.864, underlining its robust performance across critical, urgent, and non-urgent findings.

To foster accessibility and collaboration, the research team introduced web-based tools that allow the public to tap into the power of AI. The X-Raydar online portal enables users to effortlessly upload DICOM images for automatic pre-processing and classification. Furthermore, the team has generously open-sourced their meticulously trained network architectures, providing a solid foundation model for future research and adaptation. This altruistic approach ensures that X-Raydar contributes significantly to the evolution of AI applications in radiology.

Conclusion:

The introduction of X-Raydar represents a significant milestone in the field of chest X-ray abnormality detection. Its advanced capabilities, open-source accessibility, and performance on par with trained radiologists have the potential to disrupt the radiology market by offering a powerful and accessible tool for medical professionals, ultimately enhancing patient care and diagnosis accuracy.