TL;DR:

- Large Language Models (LLMs) struggle with numerical calculations involving large numbers.

- xVal introduces a groundbreaking [NUM] token to represent any number, improving efficiency.

- It treats numbers differently by storing them in separate vectors and using a dedicated token head.

- xVal outperforms other methods in multi-operand tasks and complex calculations.

- In temperature data analysis, xVal excels with minimal training time.

- In planetary simulations, xVal’s interpolation abilities surpass all other encoding schemes.

Main AI News:

In the vast landscape of Large Language Models (LLMs), a persistent challenge has confounded researchers and scientists alike – the ability to seamlessly incorporate numerical calculations, particularly those involving large numbers. Despite their remarkable proficiency in mastering a multitude of language-based tasks, these models grapple with achieving the same level of competence when it comes to numerical operations. In essence, when tasked with multiplying two four-digit numbers, their success rate barely breaches the 90% mark, leaving ample room for enhancement.

The crux of this issue lies in the fundamental disparity between numbers and the conventional facets of language. Unlike the discreet and well-defined nature of letters or words, numbers span a continuous spectrum of values, replete with intricate and stringent rules governing their manipulation. This disjuncture between language models and numerical data has ignited a fervent quest for a viable solution.

However, the existing solutions to this quandary remain scarce and far from flawless. Large Language Models, celebrated for their prowess in language-related endeavors, struggle to seamlessly accommodate the continuous and infinitely variable nature of numerical data. The prevailing approaches typically involve tokenization, a process that fragments numbers into multiple tokens, inevitably augmenting model complexity and imposing formidable memory requirements.

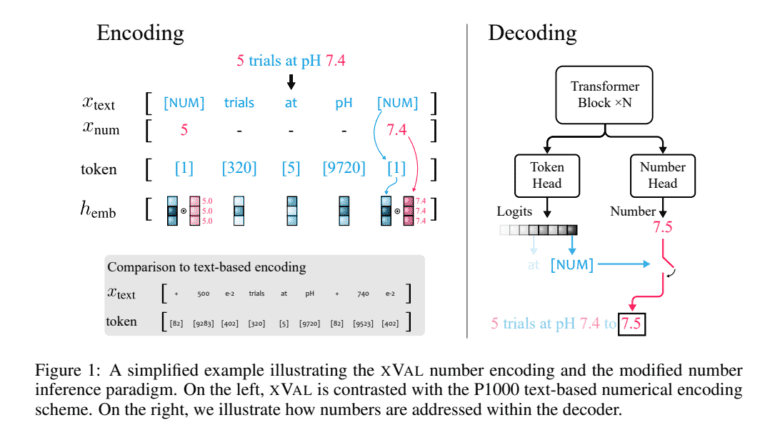

Enter the realm of innovation, courtesy of the polymathic AI researchers, who have unveiled a potential game-changer: the ingenious xVal encoding strategy. This revolutionary approach offers a fresh perspective on numerical encoding within LLMs, particularly tailored for scientific applications. xVal introduces a singular token, elegantly labeled as [NUM], which serves as an all-encompassing representation for any numerical value.

The xVal strategy accomplishes this feat by treating numbers as unique entities within the language model’s architecture. Instead of relying on a multitude of tokens, each numerical value is meticulously pre-processed and stored within an independent vector. The text seamlessly replaces the numerical value with the [NUM] token. During the decoding process, a dedicated token head integrated into the transformer architecture takes center stage, predicting the actual value corresponding to the [NUM] token. This prediction is refined through the utilization of Mean Squared Error (MSE) loss as the guiding metric, ensuring precision and accuracy.

A series of rigorous experiments were conducted to scrutinize the capabilities of xVal, contrasting it with four alternative numerical encoding strategies. The results were nothing short of intriguing. xVal triumphed over its counterparts in multi-operand tasks, showcasing its exceptional competence in complex calculations, including the multiplication of sizable multi-digit integers.

However, the true testament to xVal’s prowess emerged when applied to temperature data sourced from the ERA5 global climate dataset. xVal’s inherent continuity bias allowed it to surge ahead, achieving unparalleled performance levels with minimal training time.

Furthermore, in the realm of Planetary Simulations, xVal’s extraordinary interpolation abilities took the spotlight during simulations of planets orbiting a central mass, consistently outperforming all other encoding schemes when making predictions for out-of-distribution data.

Conclusion:

xVal’s innovative approach to numerical encoding within language models signifies a monumental shift in scientific applications. By bridging the gap between language and numbers, xVal not only enhances the capabilities of Large Language Models but also opens doors to unprecedented possibilities in the scientific market. Its superior performance in diverse scenarios makes it a potential game-changer, promising to revolutionize how scientific data is processed and analyzed in the years ahead.